- #1

fluidistic

Gold Member

- 3,924

- 261

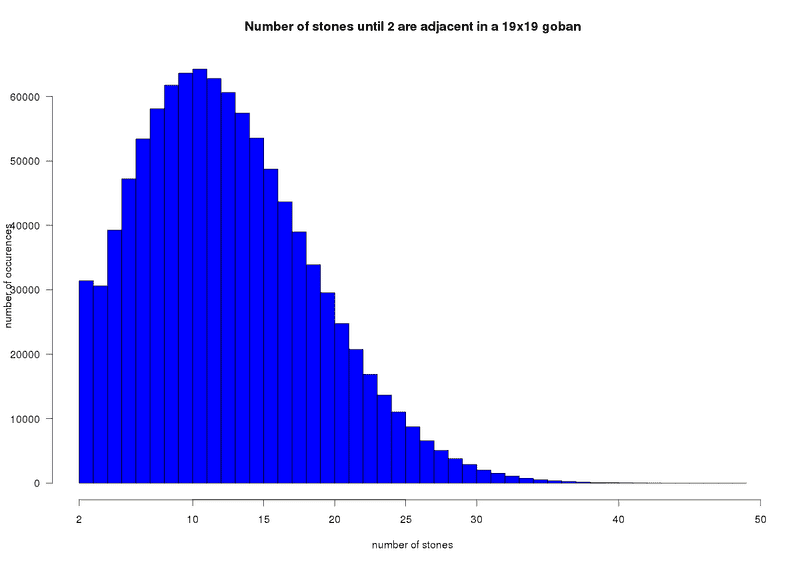

I've written a program that simulates placing pieces randomly on an nxn chessboard, one by one until 2 are adjacent. When 2 are adjacent I stop, and I report the number of pieces it took.

I've plotted a histogram of the number of pieces it took vs number of occurences just to see what kind of distribution it is. To my surprise, with big boards (such as 19x19 like in the game of go), I've noticed a very strange behavior of the histogram/distribution. There is a very slight decrease in probability to stop at 3 pieces compared than to stop at 2 pieces, something that does not happen for smaller boards. I've told this behavior to a friend who studies maths and he didn't trust my results, saying my program is faulty.

So I would like to calculate the exact probability to stop at step 2 and at step 3 for a 19x19 chessboard. I consider that 2 pieces are adjacent when when they are either at the right/left or bottom/top of each other; in diagonal they aren't adjacent.

I'd like to have some guidance on how to proceed, I don't really know where to start.

I've plotted a histogram of the number of pieces it took vs number of occurences just to see what kind of distribution it is. To my surprise, with big boards (such as 19x19 like in the game of go), I've noticed a very strange behavior of the histogram/distribution. There is a very slight decrease in probability to stop at 3 pieces compared than to stop at 2 pieces, something that does not happen for smaller boards. I've told this behavior to a friend who studies maths and he didn't trust my results, saying my program is faulty.

So I would like to calculate the exact probability to stop at step 2 and at step 3 for a 19x19 chessboard. I consider that 2 pieces are adjacent when when they are either at the right/left or bottom/top of each other; in diagonal they aren't adjacent.

I'd like to have some guidance on how to proceed, I don't really know where to start.