- #1

Math Amateur

Gold Member

MHB

- 3,998

- 48

I am reading Bruce N. Coopersteins book: Advanced Linear Algebra (Second Edition) ... ...

I am focused on Section 10.1 Introduction to Tensor Products ... ...

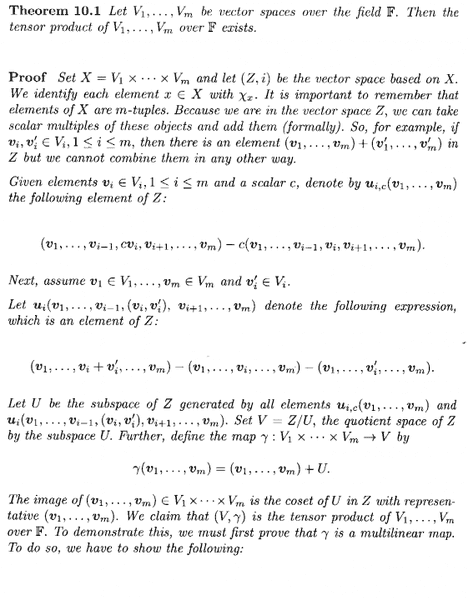

I need help with the proof of Theorem 10.1 on the existence of a tensor product ... ...Theorem 10.1 reads as follows:

In the above text we read the following:

" ... ... Because we are in the vector space [itex]Z[/itex], we can take scalar multiples of these objects and add them formally. So for example, if [itex]v_i , v'_i \ , \ 1 \leq i \leq m[/itex], then there is an element [itex](v_1, \ ... \ , \ v_m ) + (v'_1, \ ... \ , \ v'_m )[/itex] in [itex]Z[/itex] ... ... "So it seems that the elements of the vector space [itex]Z[/itex] are of the form [itex](v_1, \ ... \ , \ v_m )[/itex] ... ... the same as the elements of [itex]X[/itex] ... that is [itex]m[/itex]-tuples ... except that [itex]Z[/itex] is a vector space, not just a set so that we can add them and multiply elements by a scalar from [itex]\mathbb{F}[/itex] ... ...

... ... BUT ... ...

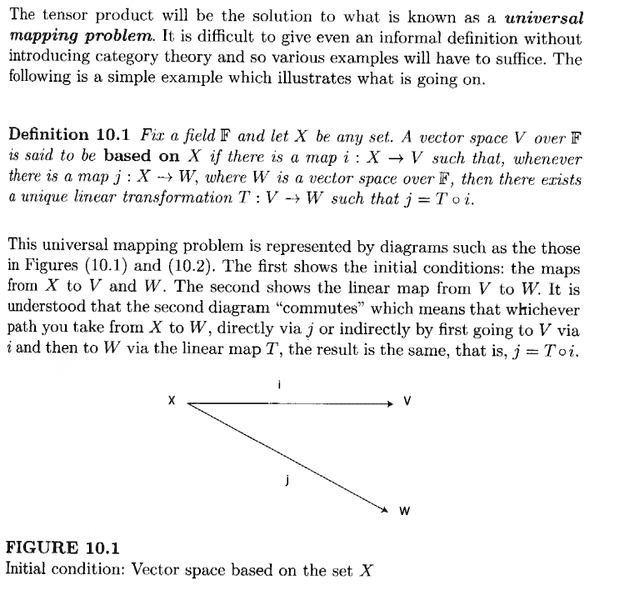

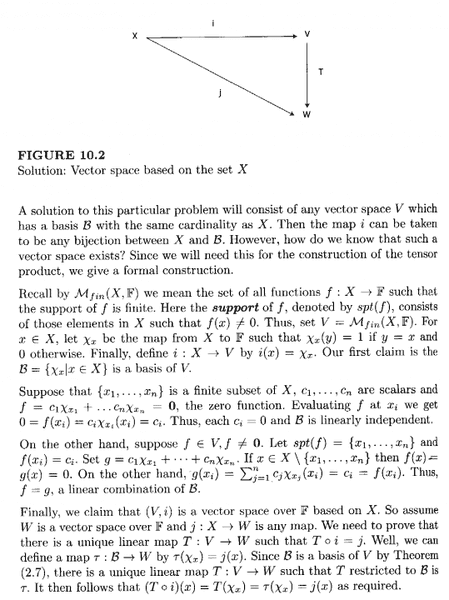

... earlier in 10.1 when talking about a UMP ... Cooperstein discussed a vector space [itex]V[/itex] based on a set [itex]X[/itex] and defined [itex]\lambda_x[/itex] to be a map from [itex]X[/itex] to [itex]\mathbb{F}[/itex] such that

[itex]\lambda_x (y) = 1[/itex] if [itex]y = x[/itex] and [itex]0[/itex] otherwise ...

Then [itex]i \ : \ X \longrightarrow V[/itex] was defined by [itex]i(x) = \lambda_x[/itex]

... as in the Cooperstein text at the beginning of Section 10.1 ...

The relevant text from Cooperstein reads as follows:

I am focused on Section 10.1 Introduction to Tensor Products ... ...

I need help with the proof of Theorem 10.1 on the existence of a tensor product ... ...Theorem 10.1 reads as follows:

In the above text we read the following:

" ... ... Because we are in the vector space [itex]Z[/itex], we can take scalar multiples of these objects and add them formally. So for example, if [itex]v_i , v'_i \ , \ 1 \leq i \leq m[/itex], then there is an element [itex](v_1, \ ... \ , \ v_m ) + (v'_1, \ ... \ , \ v'_m )[/itex] in [itex]Z[/itex] ... ... "So it seems that the elements of the vector space [itex]Z[/itex] are of the form [itex](v_1, \ ... \ , \ v_m )[/itex] ... ... the same as the elements of [itex]X[/itex] ... that is [itex]m[/itex]-tuples ... except that [itex]Z[/itex] is a vector space, not just a set so that we can add them and multiply elements by a scalar from [itex]\mathbb{F}[/itex] ... ...

... ... BUT ... ...

... earlier in 10.1 when talking about a UMP ... Cooperstein discussed a vector space [itex]V[/itex] based on a set [itex]X[/itex] and defined [itex]\lambda_x[/itex] to be a map from [itex]X[/itex] to [itex]\mathbb{F}[/itex] such that

[itex]\lambda_x (y) = 1[/itex] if [itex]y = x[/itex] and [itex]0[/itex] otherwise ...

Then [itex]i \ : \ X \longrightarrow V[/itex] was defined by [itex]i(x) = \lambda_x[/itex]

... as in the Cooperstein text at the beginning of Section 10.1 ...

The relevant text from Cooperstein reads as follows: