An Introduction to Computer Programming Languages

In this Insights Guide, the focus is entirely on types of languages, how they relate to what computers do, and how they are used by people. It is a general discussion and intended to be reasonably brief, so there is very little focus on specific languages. Please keep in mind that for just about any topic mentioned in this article, entire books have been written about that topic v.s. a few sentences in this article.

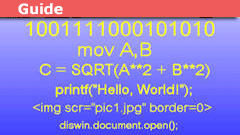

The primary focus is on

• Machine language

• Assembly language

• Higher Level Languages

And then some discussion of

• interpreters (e.g. BASIC)

• markup languages (e.g. HTML)

• scripting languages (e.g. JavaScript, Perl)

• object-oriented languages (e.g. Java)

• Integrated Development Environments (e.g. The “.NET Framework”)

Table of Contents

Machine Language

Computers work in binary and their innate language is strings of 1’s and 0’s. In my early days, I programmed computers using strings of 1’s and 0’s, input to the machine by toggle switches on the front panel. You don’t get a lot done this way but fortunately, I only had to input a dozen or so instructions, which made up a tiny “loader” program. That loader program would then read in more powerful programs from magnetic tape. It was possible to do much more elaborate things directly via toggle switches. Remembering EXACTLY (mistakes are not allowed) which string of 1’s and 0’s represented which instructions were a pain.

SO … very early on the concept of assembly language arose.

Assembly Language

This is where you represent each machine instruction’s string of 1’s and 0’s by a human-language word or abbreviation. Things such as “add” or “ad” and “load” or “ld”. These sets of human-readable “words” are translated to machine code by a program called an assembler.

Assemblers have an exact one-to-one correspondence between each assembly language statement and the corresponding machine language statement. This is quite different from high-level languages where compilers can generate dozens of machine language statements for a single high-level language statement. Assemblers are a step away from machine language in terms of usability but when you program in assembly you are programming in machine language. You are using words instead of the strings of 1’s and 0’s but assembly language is direct programming of a computer, with full control over data flow, register usage, I/O, etc. None of those are directly accessible in higher-level languages.

Every computer has its own set of machine language instructions, and so every machine has its assembly language. This can be a bit frustrating when you have to move to a new machine and learn the assembly language all over again. Having learned one, it’s fairly easy to learn another, but it can be very frustrating/annoying to have to remember a simple word in one assembly language (e.g. “load”) is slightly different (e.g. “ld”) or even considerably different (e.g. “mov”) in another language. Also, machines have slightly different instruction sets which means you not only have new “words” you miss some and add some. All this means there can be a time-consuming focus on the implementation of an algorithm instead of just being able to focus on the algorithm itself.

The good news about assembly language is that you are not programming some “pillow” that sits between you and the machine, you are programming the machine directly. This is most useful for writing things like device drivers where you need to be down in the exact details of what the machine is doing. They also allow you to do the best possible algorithm optimization, but for complex algorithms, you would need a VERY compelling reason indeed to go to the trouble of writing it in assembly, and with today’s very fast computers, that’s not likely to occur.

The bad news about assembly language is that you have to tell the machine EVERY ^$*&@^#*! step that you want it to perform, which is tedious. An algebraic statement such as

A = B + COSINE(C) – D

has to be turned into a string of instructions something like what follows (which is a pseudo assembly language that is MUCH more verbose than actual assembly)

• load memory location C to register 1

• Call Subroutine “Cosine” [thus getting the cosine of what’s in register 1 back into register 1 by calling a subroutine]

• Load memory location B to register 2

• Add registers 1 and 2 [thus putting the sum into register 1]

• load memory location D to register 2

• subtract register 2 from register 1 thus putting the difference into register 1

• save register 1 to memory location A [final value]

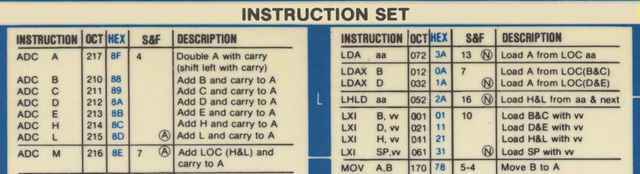

And that’s a VERY simple algebraic statement, and you have to specify each of those steps. In the right order. Without any mistakes. It will make your head hurt. Just to give you some of the flavors of assembly, here’s a very small portion of an 8080 instruction guide (a CPU used in early personal computers). Note that both the octal and hex versions of the binary code are given. These were used during debugging.

Because of the tediousness of all this, compilers were developed

COMPILERS / higher-level languages

Compilers take away the need for assembly language to specify the minutiae of the machine code-level manipulation of memory locations and registers. This makes it MUCH easier to concentrate on the algorithm that you are trying to implement rather than the details of the implementation. Compilers take complex math and semi-human language statements and translate them into machine code to be executed. The bad news is that when things go wrong, figuring out WHAT went wrong is more complicated than in machine/assembly code because the details of what the machine is doing (which is where things go wrong) are not obvious. This means that sophisticated debuggers are required for error location and correction. As high-level languages add layers of abstraction, the distance between what you as a programmer are doing and what the machine is doing down at the detailed level gets larger and larger. This could make debugging things intolerable, but the good news is that today’s programming environments are just that; ENVIRONMENTS. That is, you don’t usually program a computer language anymore, you program an Integrated Development Environment (IDE) and it includes extensive debug facilities to ease the pain of figuring out where you have gone wrong when you do. And you will. Inevitably.

Early high level languages were:

• Fortran (early 1950’s)

• Autocode (1952)

• ALGOL (late 1950’s)

• COBOL (1959)

• LISP (early 1960’s)

• APL (1960’s)

• BASIC (1964)

• PL/1 (1964)

• C (early 1970’s)

Since then there have been numerous developments, including

• Ada (late 70’s, early 80’s)

• C++ (1985)

• Python (late 1980’s)

• Perl (1987)

• Java (early 1990’s)

• JavaScript (mid-1990’s)

• Ruby (mid-1990’s)

• PHP (1994)

• C# (2002)

There were others along the way but they were of a relatively little impact compared to the ones listed above. Even the ones named have had varying degrees of utility, popularity, widespread use, and longevity. One of the lesser-known ones was JOVIAL, a variant of ALGOL (which was originally called the “International Algorithmic Language”). I mention JOVIAL only because the name always tickled me. It was developed by Jules Schwartz and JOVIAL stands for “Jules’ Own Version of the International Algorithmic Language”. I enjoy his lack of modesty.

A special note about the C programming language. C caught on over time as the most widely used computer languages of its time and the syntax rules (and even some of the statements) of several more modern languages come directly from C. The UNIX, Windows, and Apple operating systems kernels are written in C (with some assembly for device drivers) as is some of the rest of the Operating System’s code. Unix is written entirely in C and requires a C compiler. Because of the way it was developed and implemented, C is, by design, about as close to assembly language as you can get in a high-level language. Certainly, it is closer than other high-level languages. Java is perhaps more widely used today but C is still a popular language.

INTERPRETERS / SCRIPTING LANGUAGES / COMMAND LANGUAGES

Interpreters are unlike assemblers and compilers in that they do not generate machine code into an object file and then execute that. Rather, they interpret each statement into machine code one statement (or even just parts of statements) at a time and perform the necessary calculations/manipulations, saving intermediate results as necessary. The use of registers and such low-level detail is completely hidden from the user. The bad news about interpreters is that they are slower than mud compared to executable code generated by assemblers and compilers but that’s much less of an issue today given the speed of modern computers. The particularly good news is that they are amenable to on-the-fly debugging. That is, you can break a program at a particular instruction, change the values of the variables being processed, and even change the subsequent instructions and then resume interpretation. This was WAY more important in the early days of computers when doing an edit-compile-run cycle could require an overnight submission of your program to the computer center.

These days computers are so fast and so widely available that the edit-compile-run cycle is relatively trivial taking minutes or even less as opposed to half a day. One thing to note about interpretive language is that because of the sequential interpretation of the statements, you can have syntax errors that don’t raise any error flags until the attempt is made to interpret them. Assemblers and compilers process your entire program before creating executable object code and so are able to find syntax errors prior to run-time. Some interpreters do a pre-execution pass and do find syntax errors prior to running the program. Even when they don’t, dealing with syntax errors is less painful than with compilers because, given the nature of interpreters, you can simply fix the syntax error during run-time (“interpret-time” actually) and continue on. The first widely used interpretive language was BASIC (there were others, but were less widely known). Another type of scripted language is JavaScript which is interpreted one step at a time like BASIC but is not designed to be changed on the fly the way BASIC statements can be but rather is designed to be embedded in HTML files (see Markup Languages below).

A very important type of scripting language is the command script language, also called “shell scripts”. Early PC system control was done with the DOS command interpreter which is one such. Probably the most well-known is Perl. In its heyday, it was the most widely used system administrator’s language to automate operational and administrative tasks. It is still widely used and there are a lot of applications still running that have been built in Perl. It runs on just about all operating systems and still has a very active following because of its versatility and power.

MARKUP LANGUAGES

The most widely used markup language is HTML (Hypertext Markup Language). It defines sets of rules for encoding documents in a format that is both human-readable and machine-readable. You will often hear the phrase “HTML code”. Technically this is a misnomer since HTML is NOT “code” in the original sense because its purpose is not to result in any machine code. They are just data. They do not get translated to machine language and executed. They are read as input data. HTML is read by a program called a browser and is used not to specify an algorithm but rather to describe how a web page should look. HTML doesn’t specify a set of steps, it “marks up” the look and feel of how a browser creates a web page.

To go at this again, because it’s fairly important to understand the difference between markup languages and other languages, consider the following. Assembler and Compiler languages result in what is called object code. That is, a direct machine language result of the assembly or compilation, and the object code persists during execution. Interpreter languages are interpreted directly AT execution time so clearly exist during execution. Markup languages are different in that they do not persist during execution and in fact do not themselves result directly in any execution at all. They provide information that allows a program called a browser to create an HTML web page with a particular look and feel. So HTML does not code in the sense that a program is a code; it is just data to be used by the browser program. A significant adjunct to HTML is CSS (Cascading Style Sheets) which can be used to further refine the look and feel of a web page. The latest version of HTML, HTML5, is a significant increase in the power and flexibility of HTML but further discussion of CSS and HTML5 is beyond the scope of this article. Another significant markup language is XML, also beyond the scope of this article.

You can embed procedural code inside an HTML file in the form of, for example, JavaScript and the browser will treat it differently than the HTML lines. JavaScript is generally implemented as an interpreted script language but there are compilers for it. The use of JavaScript is pretty much a requirement for web pages that are interactive on the client side. Web servers have their own set of procedural code (e.g. PHP) that can be embedded in HTML and used to change what is served to the client. The browser does not use the JavaScript code as input data but rather treats it as a program to be executed before the web page is presented and even while the web page is active. This allows for dynamic web pages where user input can cause changes to the web page and the manipulation and/or storage of information from the user.

INTEGRATED DEVELOPMENT ENVIRONMENTS

Programming languages these days come with an entire environment surrounding them, including for example object creation by drag and drop. You don’t program the language so much as you program the environment. The number of machine language instructions generated by a simple drag and drop of control onto the window you are working on can be enormous. You don’t need to worry about such things because the environment takes care of all that. The compiler is augmented in the environment by automatic linkers, loaders, and debuggers so you get to focus on your algorithm and the high-level implementation and not worry about the low-level implementation. These environments are now called “Integrated Development Environments” (IDE’s) which is a very straightforward description of exactly what they are. They include a compiler, debugger, help tool, and as well as additional facilities that can be extremely useful (such as a version control system, a class browser, an object browser, support for more than one language, etc.). These extensions will not be explored in this article.

Because the boundary between a full bore IDE and a less powerful programming environment is vague, it is quite arguable just how long IDE’s have existed but I think that it is fair to say that in their current very powerful incarnations they began to evolve seriously in the 1980s and came on even stronger in the ’90s. Today’s incarnations are more evolutionary from the ’80s and ’90s than the more revolutionary nature of what was happening in those decades.

So to summarize, the basic types of computer languages are

• assembler

• compiler

• interpreted

• scripting

• markup

• database (not discussed in this article)

and each has its own set of characteristics and uses.

Within the confines of those general characteristics, there are a couple of other significant categorizations of languages, the most notable being object-oriented languages and event-driven languages. These are not themselves languages but they have a profound effect on how programs work so deserve at least a brief discussion in this article.

OBJECT-ORIENTED LANGUAGES

“Normal” compiled high-level languages have subroutines that are very rigid in their implementation. They can, of course, have things like “if … then” statements that allow for different program paths based on specific conditions but everything is well defined in advance. “Objects” are sets of data and code that work together in a more flexible way than exists in non-object-oriented programming.

C++ tacked object-orientation onto C but object orientation is not enforced. That is, you can write a pure, non-object program in C++ and the compiler is perfectly happy to compile it exactly as would a C compiler. Data and code are not required to be part of objects. They CAN be but they don’t have to be. Java on the other hand is a “pure” object-oriented language. ONLY objects can be created and data and code are always part of an object. C# (“C sharp”) is Microsoft’s attempt to create a C language that is purely object-oriented like Java. C# is proprietary to Microsoft and thus is not used on other platforms (Apple, Unix, etc). I don’t know if it is still true, but an early comment about C# was “C# is sort of Java with reliability, productivity, and security deleted”.

Objects, and OOP, have great flexibility and extensibility but getting into all of that is beyond the scope of this modest discussion of types of languages.

EVENT-DRIVEN PROGRAMMING

“Normal” compiled high-level languages are procedural. That is, they specify a strictly defined set of procedures. It’s like driving a car. You go straight, turn left or right, stop then go again, and so forth, but you are following a specific single path, a procedural map.

“Event-driven” languages such as VB.NET are not procedural, they are, as the name clearly states, driven by events. Such events include, for example, a mouse-click or the press of a key. Having subroutines that are driven by events is not new at all. Even in the early days of computers, things like a keystroke would cause a system interrupt (a hardware action) that would cause a particular subroutine to be called. BUT … the integration of such event-driven actions into the very heart of programming is a different matter and is common in modern IDEs in ways that are far more powerful than early interrupt-driven system calls. It’s somewhat like playing a video game where new characters can pop up out of nowhere and characters can teleport to a different place and the actions of one character affect the actions of other characters. Like OOP, event-driven programming has its power and pitfalls but those are beyond the scope of this article. Both Apple operating systems and Microsoft operating systems strongly support event-driven programming. It’s pretty much a necessity in today’s world of highly interactive, windows based, computer screens.

GENERATIONS OF LANGUAGES

You’ll read in the literature about “second generation”, “third-generation”, and so forth, computer languages. That’s not much discussed these days and I’ve never found it to be particularly enlightening or helpful, however, just for completeness, here’s a rough summary of “generations”. One reason I don’t care for this is that you can get into pointless arguments about exactly where something belongs on this list.

• first generation = machine language

• second generation = assembly language

• third generation = high level languages

• fourth generation = database and scripting languages

• fifth generation = Integrated Development Environments

Newer languages don’t replace older ones. Newer ones extend the capabilities and usability and are sometimes designed for specific reasons, such as database languages, rather than general use, but they don’t replace older languages. C, for example, is now 40+ years old but still in very wide use and FORTRAN is even older, and yet because of the ENORMOUS amount of scientific subroutines that were created for it over decades, it is still in use in some areas of science.

[NOTE: I had originally intended to have a second Insights article covering some of the more obscure topics not covered in this one, but I’m just not going to ever get around to it so I need to stop pretending otherwise]

Studied EE and Comp Sci a LONG time ago, now interested in cosmology and QM to keep the old gray matter in motion.

Woodworking is my main hobby.

I've got it about 1/3rd done but it's a low priority for me at the momentNo no! high priority! high priority! :biggrin:

Where's part 2?I've got it about 1/3rd done but it's a low priority for me at the moment

Where’s part 2?

Where does something like Scratch fall in these? Just another high-level language? Interpreted, or compiled?From the wiki page — https://en.wikipedia.org/wiki/Scratch_(programming_language) — it appears to be an interpreted language, from the comments near the bottom of that page.

Where does something like Scratch fall in these? Just another high-level language? Interpreted, or compiled?

What about the "dot" directives in MASM (ML) 6.0 and later such as .if, .else, .endif, .repeat, … ?

https://msdn.microsoft.com/en-us/library/8t163bt0.aspx

Conditional assembly does not at all invalidate Scott's statement. I don't see how you think it does. What am I missing?It's not conditional assembly (if else endif directives without the period prefix are conditional assembly), it's sort of like a high level language. For example:

;... .if eax == 1234 ; ... code for eax == 1234 goes here .else ; ... code for eax != 1234 goes here .endifThe .if usually reverses the sense of the condition and conditionally branches past the code following the .if to the .else (or to a .endif).

…there are still mission-critical and/or safety-minded industries where "virtual" is a dirty word.Yeah, I can see how that could be reasonable. OOP stuff can be nasty to debug.

But that does NOT even remotely take advantage of things like inheritance. Yes, you can have good programming practices without OOP, but that does not change the fact that the power of OOP far exceeds non-OOP in many ways. If you have programmed seriously in OOP I don't see why you would even argue with this.I guess it's a matter of semantics. There was a time when OOP did not automatically include inheritance or polymorphism. By the way, the full OOP may "far exceed non-OOP", but there are still mission-critical and/or safety-minded industries where "virtual" is a dirty word.

Personally, I am satisfied when the objects are well-encapsulated and divided out in a sane way. Anytime I see code with someone else's "this" pointer used all over the place, I stop using the term "object-oriented".

What about the "dot" directives in MASM (ML) 6.0 and later such as .if, .else, .endif, .repeat, … ?

https://msdn.microsoft.com/en-us/library/8t163bt0.aspxConditional assembly does not at all invalidate Scott's statement. I don't see how you think it does. What am I missing?

What really defines a assembly language is that all of the resulting machine code is coded for explicitly.What about the "dot" directives in MASM (ML) 6.0 and later such as .if, .else, .endif, .repeat, … ?

https://msdn.microsoft.com/en-us/library/8t163bt0.aspx

Paul, how come you didn't include LOLCODE in your summary?

:oldbiggrin:Mark, you are very weird :smile:

Paul, how come you didn't include LOLCODE in your summary?

:oldbiggrin:

Or polymorphism, another attribute of object-oriented programming.Right. I didn't want to bother typing out encapsulation and polymorphism so I said "things like … " meaning "there are more". Now you've made me type them out anyway. :smile:

But that does NOT even remotely take advantage of things like inheritance.Or polymorphism, another attribute of object-oriented programming.

With a macro assembler, both the programmer and the computer manufacturer can define macros to be anything – calling sequences, structures, etc. Even the individual instructions were macros, so by changing the macros you could change the target machine.DOH !!! I used to know that. Totally forgot. Thanks.

I suppose it depends on how much you include in the term object-oriented. Literally arranging you code into objects, making some "methods" public and others internal, was something I practiced before C++ or the term object-oriented were coined.But that does NOT even remotely take advantage of things like inheritance. Yes, you can have good programming practices without OOP, but that does not change the fact that the power of OOP far exceeds non-OOP in many ways. If you have programmed seriously in OOP I don't see why you would even argue with this.

I stopped doing assembly somewhere in the mid-70's but my recollection of macro assemblers is that the 1-1 correspondence was not lost. Can you expand on your point?With a macro assembler, both the programmer and the computer manufacturer can define macros to be anything – calling sequences, structures, etc. Even the individual instructions were macros, so by changing the macros you could change the target machine.

I never used Forth so may have shortchanged it.It's important because it is an interpretive language that it pretty efficient. It contradicts your "slow as mud" statement.

I totally and completely disagree. OOP is a completely different programming paradigm.I suppose it depends on how much you include in the term object-oriented. Literally arranging you code into objects, making some "methods" public and others internal, was something I practiced before C++ or the term object-oriented were coined.

As I read through the article, I had these notes:

1) The description of assembler having a one-to-one association with the machine code was a characteristic of the very earliest assemblers. In the mid '70's, macro-assemblers came into vogue – and the 1-to-1 association was lost. What really defines a assembly language is that all of the resulting machine code is coded for explicitly.I stopped doing assembly somewhere in the mid-70's but my recollection of macro assemblers is that the 1-1 correspondence was not lost. Can you expand on your point?

2) It was stated that it is more difficult to debug compiler code than machine language or assembler code. I very much understand this. But it is true under very restrictive conditions that most readers would not understand. In general, a compiler will remove a huge set of details from the programmers consideration and will thus make debugging easier – if for no other reason than there are fewer opportunities for making mistakes. Also (and I realize that this isn't the scenario considered in the article), often when assembler is used today, it is often used in situations that are difficult to debug – such as hardware interfaces.No argument, but too much info for the article.

3) Today's compilers are often quite good at generating very efficient code – often better than what a human would do when writing assembly. However, some machines have specialized optimization opportunities that cannot be handled by the compiler. The conditions that dictate the use of assembly do not always bear on the "complexity". In fact, it is often the more complex algorithms that most benefit from explicit coding.No argument, but too much info for the article.

4) I believed the article misses a big one in the list of interpretive languages: Forth (c. 1970). It breaks the mold in that, although generally slower than compilers of that time, it was far from "slower than mud". It's also worth noting that Forth and often other interpretive languages such as Basic, encode their source for run-time efficiency. So, as originally implemented, you could render your Forth or Basic as an ASCII "file" (or paper tape equivalent), but you would normally save is in a native form.I never used Forth so may have shortchanged it. Intermediate code was explicitly left out of this article and will be mentioned in part 2

5) I think it is worth noting that Object-Oriented programming is primarily a method for organizing code.I totally and completely disagree. OOP is a completely different programming paradigm. It is very possible (and unfortunately quite common) to code "object dis-oriented" even when using OO constructs.I completely agree but that does nothing to invalidate my previous sentence.Similarly, it is very possible to keep code objected oriented when the language (such as C) does not explicitly support objects.Well, sort of, but not really. You don't get a true class object in non-OOP languages, nor do you have the major attributes of OOP (inheritance, etc).

As I read through the article, I had these notes:

1) The description of assembler having a one-to-one association with the machine code was a characteristic of the very earliest assemblers. In the mid '70's, macro-assemblers came into vogue – and the 1-to-1 association was lost. What really defines a assembly language is that all of the resulting machine code is coded for explicitly.

2) It was stated that it is more difficult to debug compiler code than machine language or assembler code. I very much understand this. But it is true under very restrictive conditions that most readers would not understand. In general, a compiler will remove a huge set of details from the programmers consideration and will thus make debugging easier – if for no other reason than there are fewer opportunities for making mistakes. Also (and I realize that this isn't the scenario considered in the article), often when assembler is used today, it is often used in situations that are difficult to debug – such as hardware interfaces.

3) Today's compilers are often quite good at generating very efficient code – often better than what a human would do when writing assembly. However, some machines have specialized optimization opportunities that cannot be handled by the compiler. The conditions that dictate the use of assembly do not always bear on the "complexity". In fact, it is often the more complex algorithms that most benefit from explicit coding.

4) I believed the article misses a big one in the list of interpretive languages: Forth (c. 1970). It breaks the mold in that, although generally slower than compilers of that time, it was far from "slower than mud". It's also worth noting that Forth and often other interpretive languages such as Basic, encode their source for run-time efficiency. So, as originally implemented, you could render your Forth or Basic as an ASCII "file" (or paper tape equivalent), but you would normally save is in a native form.

5) I think it is worth noting that Object-Oriented programming is primarily a method for organizing code. It is very possible (and unfortunately quite common) to code "object dis-oriented" even when using OO constructs. Similarly, it is very possible to keep code objected oriented when the language (such as C) does not explicitly support objects.

[QUOTE="FactChecker, post: 5691027, member: 500115"]In my defense, I think that the emergence of 5'th generation languages for simulation, math calculations, statistics, etc are the most significant programming trend in the last 15 years. There is even an entire physicsforum section primarily dedicated to it (Math Software and LaTeX). I don't feel that I was just quibbling about a small thing.”Fair enough. Perhaps that's something that I should have included, but there were a LOT of things that I thought about including. Maybe I'll add that to Part 2

[QUOTE="FactChecker, post: 5689999, member: 500115"]Sorry. I thought that was an significant category of languages that you might want to mention and that your use of the term "fifth generation" (which refers to that category) was not what I was used to. I didn't mean to offend you.”Oh, I wasn't offended and I"m sorry if it came across that way. My point was exactly what I said … I deliberately left out at least as much as I put in and I had to draw the line somewhere or else put in TONS of stuff that I did not feel was relevant to the thrust of the article and thus make it so long as to be unreadable. EVERYONE is going to come up with at least one area where they are confident I didn't not give appropriate coverage and if I satisfy everyone, again the article becomes unreadable.

In my defense, I think that the emergence of 5’th generation languages for simulation, math calculations, statistics, etc are the most significant programming trend in the last 15 years. There is even an entire physicsforum section primarily dedicated to it (Math Software and LaTeX). I don’t feel that I was just quibbling about a small thing.

[QUOTE="phinds, post: 5689826, member: 310841"]To quote myself:and”Sorry. I thought that was an significant category of languages that you might want to mention and that your use of the term "fifth generation" (which refers to that category) was not what I was used to. I didn't mean to offend you.

[QUOTE="phinds, post: 5689826, member: 310841"]To quote myself: “Although it's a good article, I don't get why you didn't include ____, _____, and _________. Especially _________ since it's my pet language.By the way what does this word "overview" mean that you keep using?

[QUOTE="FactChecker, post: 5689803, member: 500115"]An excellent article. Thanks.I would make one suggestion: I think of fifth generation languages as including the special-purpose languages like simulation languages (DYNAMO, SLAM, SIMSCRIPT, etc.), MATLAB, Mathcad, etc. I didn't see that mentioned anywhere and I think they are worth mentioning.”To quote myself:[quote]One reason I don’t care for this is that you can get into pointless arguments about exactly where something belongs in this list.[/quote]and[quote]This was NOT intended as a thoroughly exhaustive discourse. If you look at the wikipedia list of languages you'll see that I left out more than I put in but that was deliberate.[/quote]

I think Latex can go into one of those language categories, and if it does looks like markup language is a fitting candidate.Anyway, great and enlightening insight.

An excellent article. Thanks.I would make one suggestion: I think of fifth generation languages as including the special-purpose languages like simulation languages (DYNAMO, SLAM, SIMSCRIPT, etc.), MATLAB, Mathcad, etc. I didn't see that mentioned anywhere and I think they are worth mentioning.

[QUOTE="Jaeusm, post: 5689649, member: 551236"]The entire computer science sub-forum suffers from this.”Oh, it's hardly the only one.

[QUOTE="phinds, post: 5689333, member: 310841"]Yes, I've notice this in many comment threads on Insight articles. People feel the need to weigh in with their own expertise without much regard to whether or not what they have to add is really helpful to the original intent and length of the article supposedly being commented on.”The entire computer science sub-forum suffers from this.

[QUOTE="vela, post: 5689416, member: 221963"]You kind of alluded to this point when you mentioned interpreters make debugging easier, but I think the main advantage of interpreters is in problem solving. It's not always obvious how to solve a particular problem, and interpreters allow you to easily experiment with different ideas without all the annoying overhead of implementing the same ideas in a compiled language.”Good point.

You kind of alluded to this point when you mentioned interpreters make debugging easier, but I think the main advantage of interpreters is in problem solving. It's not always obvious how to solve a particular problem, and interpreters allow you to easily experiment with different ideas without all the annoying overhead of implementing the same ideas in a compiled language.

[QUOTE="Greg Bernhardt, post: 5689338, member: 1"]No no no it's a great thing to do! Very rewarding and helpful! :)”Yeah, that's EXACTLY what the overseers said to the slaves building the pyramids :smile:

[QUOTE="jim mcnamara, post: 5689101, member: 35824"]Your plight is exactly why I am loath to try an insight article.”No no no it's a great thing to do! Very rewarding and helpful! :)

[QUOTE="jim mcnamara, post: 5689101, member: 35824"][USER=310841]@phinds[/USER] – Do you give up yet? This kind of scope problem is daunting. You take a generalized tack, people reply with additional detail. Your plight is exactly why I am loath to try an insight article. You are braver, hats off to you!”Yes, I've notice this in many comment threads on Insight articles. People feel the need to weigh in with their own expertise without much regard to whether or not what they have to add is really helpful to the original intent of the article supposedly being commented on.

I should add that, regarding Lua, they say it is an interpreted language. "Although we refer to Lua as an interpreted language, Lua always precompiles source code to an intermediate form before running it. (This is not a big deal: Most interpreted languages do the same.) " This is different from a pure interpreter, which translates the source program line by line while it is being run. In any case you can read their explanation here.http://www.lua.org/pil/8.htmlBTW it is easy and instructive to write an interpreter for BASIC in C.

[QUOTE="nsaspook, post: 5689143, member: 351035"]Also missing is the language Forth.”To repeat myself:[quote]This was NOT intended as a thoroughly exhaustive discourse. If you look at the wikipedia list of languages you'll see that I left out more than I put in but that was deliberate.[/quote]

[QUOTE="vela, post: 5688952, member: 221963"]Another typo: I believe you meant "fourth generation," not "forth generation."”Also missing is the language Forth.The forth language and dedicated stack processors are still used today for real-time motion control.I had a problem with a semiconductor processing tool this week that still uses a Forth processor to control Y mechanical and X electrical scanning. https://en.wikipedia.org/wiki/RTX2010https://web.archive.org/web/20110204160744/http://forth.gsfc.nasa.gov/

https://en.wikipedia.org/wiki/RTX2010https://web.archive.org/web/20110204160744/http://forth.gsfc.nasa.gov/

[QUOTE="jedishrfu, post: 5688853, member: 376845"]Scripting languages usually can interact with the command shell that you're running them in. They are interpreted and can evaluate expressions that are provided at runtime.”[QUOTE="jedishrfu, post: 5688853, member: 376845"]Java is actually compiled into bytecodes that act as machine code for the JVM. This allows java to span many computing platforms in a write once run anywhere kind of way.”And of course something like Python has both these traits. But there are plenty of variations for scripting languages.For example some scripting languages are much less handy, e.g. so far as I know, AppleScript can't interact in a command shell, which makes it harder to code in; neither did older versions of WinBatch, back when I was cobbling together Windows scripts with it around 1999-2000; looking at the product pages for WinBatch today, it still doesn't look like this limitation has been removed. Discovering Python after having learned on WinBatch for several years was like a prison break for me.And I also like the example given above by [USER=608525]@David Reeves[/USER] of Lua, a non-interpreted, non-interactive scripting language: "Within a development team, some programmers may only need to work at the Lua script level, without ever needing to modify and recompile the core engine. For example, how a certain game character behaves might be controlled by a Lua script. This sort of scripting could also be made accessible to the end users. But Lua is not an interpreted language."

Yes,, don't give up. Remember the Stone Soup story. You ve got the soup in the pot and we're bringing the vegetables and meat. You've inspired me to write an article too. Jedi

[USER=310841]@phinds[/USER] – Do you give up yet? This kind of scope problem is daunting. You take a generalized tack, people reply with additional detail. Your plight is exactly why I am loath to try an insight article. You are braver, hats off to you!

[QUOTE="vela, post: 5688952, member: 221963"]Another typo: I believe you meant "fourth generation," not "forth generation."”Thanks.

Another typo: I believe you meant "fourth generation," not "forth generation."

[QUOTE="stevendaryl, post: 5688394, member: 372855"]Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.”One key feature is that you can edit and run a script as opposed to say Java where you compile with one command javac and run with another command java. This means you can't use the shell trick of #!/bin/xxx to indicate that its an executable script.Scripting languages usually can interact with the command shell that you're running them in. They are interpreted and can evaluate expressions that are provided at runtime. The loosey-goosiness is important and makes them more suited to quick programming jobs. The most common usage is to glue applications to the session ie to setup the environment for some application, clear away temp files, make working directories, check that certain resources are present and to then call the application.https://en.wikipedia.org/wiki/Scripting_languageJava is actually compiled into bytecodes that act as machine code for the JVM. This allows java to span many computing platforms in a write once run anywhere kind of way. Java doesn't interact well with the command shell. Programmers unhappy with Java have developed Groovy which is what java would be if it was a scripting language. Its often used to create domain specific languages (DSLs) and for running snippets of java code to see how it works as most java code runs unaltered in Groovy. It also has some convenience features so that you don't have to provide import statements for the more common java classes.https://en.wikipedia.org/wiki/Groovy_(programming_language)Javascript in general works only in web pages. However there's node.js as an example, that can run javascript in a command shell. Node provides a means to write a light weight web application server in javascript on the server-side instead of in Java as a java servlet.https://en.wikipedia.org/wiki/Node.js

[QUOTE="David Reeves, post: 5688593, member: 608525"]Speaking of scripting languages, consider Lua, which is the most widely used scripting language for game development. Within a development team, some programmers may only need to work at the Lua script level, without ever needing to modify and recompile the core engine. For example, how a certain game character behaves might be controlled by a Lua script. This sort of scripting could also be made accessible to the end users. But Lua is not an interpreted language.”This is a good point. "Scripting" is a pretty loose term.

[QUOTE="stevendaryl, post: 5688394, member: 372855"]Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.”You can read the whole Wikipedia articles on "scripting language" and "interpreted language" but this does not really provide a clear answer to your question. In fact, I think there is no definition that would clearly separate scripting from non-scripting languages or interpreted from non-interpreted languages. Here are a couple of quotes from Wikipedia."A scripting or script language is a programming language that supports scripts; programs written for a special run-time environment that automate the execution of tasks that could alternatively be executed one-by-one by a human operator.""The terms interpreted language and compiled language are not well defined because, in theory, any programming language can be either interpreted or compiled."Speaking of scripting languages, consider Lua, which is the most widely used scripting language for game development. Within a development team, some programmers may only need to work at the Lua script level, without ever needing to modify and recompile the core engine. For example, how a certain game character behaves might be controlled by a Lua script. This sort of scripting could also be made accessible to the end users. But Lua is not an interpreted language.LISP is not a scripting language. On the other hand, LISP is an interpreted language, but it can also be compiled. You might spend most of your development time working in the interpreter, but once some code is nailed down you might compile it for greater speed, or because you are releasing a compiled version for use by others. Now perhaps someone will jump in and say "LISP can in fact be a scripting language,", etc. I would not respond. ;)

[QUOTE="David Reeves, post: 5688215, member: 608525"]One thing I noticed is that although you mention LISP, you did not mention the topic of languages for artificial intelligence. I did not see any mention of Prolog, which was the main language for the famous 5th Generation Project in Japan.[/quote]This was NOT intended as a thoroughly exhaustive discourse. If you look at the wikipedia list of languages you'll see that I left out more than I put in but that was deliberate.[quote]It could also be useful to discuss functional programming languages or functional programming techniques in general.[/quote]And yes I could have written thousands of pages on all aspects of computing. I chose not to.[quote]Since you mention object-oriented programming and C++, how about also mentioning Simula, the language that started it all, and Smalltalk, which took OOP to what some consider an absurd level.[/quote]See above[quote]Finally, I do not see any mention of Pascal, Modula, and Oberon. The work on this family of languages by Prof. Wirth is one of the greatest accomplishments in the history of computer languages.[/quote]Pascal is listed but not discussed. See above[QUOTE="stevendaryl, post: 5688394, member: 372855"]Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.”Basically, I think most people see "scripting" in two ways. First is, for example, BASIC which is an interpreted computer language and second is, for example, Perl, which is a command language. The two are quite different but I'm not going to get into that. It's easy to find on the internet.[QUOTE="jedishrfu, post: 5688439, member: 376845"]One word of clarification on the history of markup is that while HTML is considered to be the first markup language it was in fact adapted from the SGML(1981-1986) standard of Charles Goldfarb by Sir Tim Berners-Lee:[/quote]NUTS, again. Yes, you are correct. I actually found that all out AFTER I had done the "final" edit and just could not stand the thought of looking at the article for the 800th time so I left it in. I'll make a correction. Thanks.

[QUOTE="stevendaryl, post: 5688394, member: 372855"]Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.”Others more knowledgable than me will no doubt reply, but I get the sense that scripting languages are a subset of interpreted. So something like Python gets called both, but Java is only interpreted (compiled into bytecode, as Python is also) and not scripted. https://en.wikipedia.org/wiki/Scripting_language

Nice article, [USER=310841]@phinds[/USER]! I would like to point out that some interpreted languages, such as MATLAB, have move to the JIT (just-in-time) model, where some parts are compiled instead of simply being interpreted.Also, Fortran is still in use not only because of legacy code. First, there are older physicists like me who never got the hang of C++ or python. Second, many physical problems are more simply translated to Fortran than other compiled languages, making development faster.

Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.

Well done Phinds!

One word of clarification on the history of markup is that while HTML is considered to be the first markup language it was in fact adapted from the SGML(1981-1986) standard of Charles Goldfarb by Sir Tim Berners-Lee:

https://en.wikipedia.org/wiki/Standard_Generalized_Markup_Language

And the SGML (1981-1986) standard was in fact an outgrowth of GML(1969) found in an IBM product called Bookmaster, again developed by Charles Goldfarb who was trying to make it easier to use SCRIPT(1968) a lower level document formatting language:

https://en.wikipedia.org/wiki/IBM_Generalized_Markup_Language

https://en.wikipedia.org/wiki/SCRIPT_(markup)

in between the time of GML(1969) and SGML(1981-1986), Brian Reid developed SCRIBE(1980) for his doctoral dissertation and both SCRIBE(1980) and SGML(1981-1986) were presented at the same conference (1981). Scribe is considered to be the first to separate presentation from content which is the basis of markup:

https://en.wikipedia.org/wiki/Scribe_(markup_language)

and then in 1981, Richard Stallman developed TEXINFO(1981) because SCRIBE(1980) became a proprietary language:

https://en.wikipedia.org/wiki/Texinfo

these early markup languages , GML(1969), SGML(1981), SCRIBE(1980) and TEXINFO were the first to separate presentation from content:

Before that there were the page formatting language of SCRIPT(1968) and SCRIPT’s predecessor TYPSET/RUNOFF (1964):

https://en.wikipedia.org/wiki/TYPSET_and_RUNOFF

Runoof was so named from “I’ll run off a copy for you.”

All of these languages derived from printer control codes (1958?):

https://en.wikipedia.org/wiki/ASA_carriage_control_characters https://en.wikipedia.org/wiki/IBM_Machine_Code_Printer_Control_Characters

So basically the evolution was:

– program controlled printer control (1958)

– report formatting via Runoff (1964)

– higher level page formatting macros Script (1968)

– intent based document formatting GML (1969)

– separation of presentation from content via SCRIBE(1981)

– standardized document formatting SGML (1981 finalized 1986)

– web document formatting HTML (1993)

– structured data formatting XML (1996)

– markdown style John Gruber and Aaron Schwartz (2004)

https://en.wikipedia.org/wiki/Comparison_of_document_markup_languages

and back to pencil and paper…

A Printer Code Story

————————

Lastly, the printer codes were always an embarrassing nightmare for a newbie Fortran programmer who would write throughly elegant program that generated a table of numbers and columnized them to save paper only to find he’s printed a 1 in column 1 and receives a box or two of fanfold paper with a note from the printer operator not to do it again.

I’m sure I’ve left some history out here.

– Jedi

[QUOTE="phinds, post: 5687821, member: 310841"]Yes, there are a TON of such fairly obscure points that I could have brought in, but the article was too long as is and that level of detail was just out of scope.”I think the level you kept it at was excellent. Not easy to do.

This looks like an interesting topic. I hope there will be a focus on which languages are most widely used in the math and science community, since this is after all a physics forum.One thing I noticed is that although you mention LISP, you did not mention functional languages in general. Also, I did not see any mention of Prolog, which was the main language for the famous 5th Generation Project in Japan.I think it would be interesting to see the latest figures on which are the most popular languages, and why they are so popular. The last time I looked the top three were Java, C, and C++. But that's just one survey, and no doubt some surveys come up with a different result.Since you mention object-oriented programming and C++, how about also mentioning Simula, the language that started it all, and Smalltalk, which took OOP to what some consider an absurd level.Finally, I do not see any mention of Pascal, Module, and Oberon. The work on this family of languages by Prof. Wirth is one of the greatest accomplishments in the history of computer languages.In any case, I look forward to the discussion.

[QUOTE="rcgldr, post: 5688122, member: 17595"]No mention of plugboard programming.”The number of things that I COULD have brought in, that have little or no relevance to modern computing, would have swamped the whole article.

[QUOTE="rcgldr, post: 5688122, member: 17595"]"8080 instruction guide (the CPU on which the first IBM PCs were based)." The first IBM PC's were based on 8088, same instruction set as 8086, but only an 8 bit data bus. The Intel 8080, 8085, and Zilog Z80 were popular in the pre-PC based systems, such as S-100 bus systems, ….”Nuts. You are right of course. I spent so much time programming the 8080 on CPM systems that I forgot that IBM went with the 8088. I'll make a change. Thanks.

Some comments:No mention of plugboard programming.http://en.wikipedia.org/wiki/Plugboard"8080 instruction guide (the CPU on which the first IBM PCs were based)." The first IBM PC's were based on 8088, same instruction set as 8086, but only an 8 bit data bus. The Intel 8080, 8085, and Zilog Z80 were popular in the pre-PC based systems, such as S-100 bus systems, Altair 8000, TRS (Tandy Radio Shack) 80, …, mostly running CP/M (Altair also had it's own DOS). There was some overlap as the PC's didn't sell well until the XT and later AT.APL and Basic are interpretive languages. The section title is high level language, so perhaps it could mention these languages include compiled and interpretive languages.RPG and RPG II high level langauges, popular for a while for conversion from plugboard based systems into standard computer systems. Similar to plugboard programming, input to output field operations were described, but there was no determined ordering of those operations. In theory, these operations could be performed in parallel.

[QUOTE="256bits, post: 5688040, member: 328943"]No choice.You have sent an ASCII 06.Full Duplex mode?ASCII 07 will get the attention for part 2.”Yeah but part 2 is likely to be the alternate interpretation of the acronym for ASCII 08

[QUOTE="phinds, post: 5687860, member: 310841"]AAACCCKKKKK !!! That reminds me that now I have to write part 2.”No choice.You have sent an ASCII 06.Full Duplex mode?ASCII 07 will get the attention for part 2.

[QUOTE="Mark44, post: 5687951, member: 147785"]I thought it was spelled ACK…(As opposed to NAK)“No, that's a minor ACK. MIne was a heartfelt, major ACK, generally written as AAACCCKKKKK !!!

Very well done, thanks phinds for this insight! Judging from this part 1, it gives a very good general picture touching upon many interesting things.

[QUOTE="phinds, post: 5687860, member: 310841"]AAACCCKKKKK !!!”I thought it was spelled ACK…(As opposed to NAK)

[QUOTE="Greg Bernhardt, post: 5687834, member: 1"]Great part 1 phinds!”AAACCCKKKKK !!! That reminds me that now I have to write part 2. Damn !

Great part 1 phinds!

Yes, there are a TON of such fairly obscure points that I could have brought in, but the article was too long as is and that level of detail was just out of scope.

Mainframe assembler's included fairly advanced macro capability going back to the 1960s'. In the case of IBM mainframes, there were macro functions that operated on IBM database types, such as ISAM (index sequential access method), which was part of the reason for the strange mix of assembly and Cobol on the same programs, which due to legacy issues, still exists somewhat today.Self-modifying code – this was utilized on some older computers, like the CDC 3000 series which included a store instruction that only modified the address field of another instruction, essentially turning an instruction into an instruction + modifiable pointer.Event driven programming is part of most pre-emptive operating systems and applications, and time sharing systems / applications, again dating back to the 1960s'.

[QUOTE="anorlunda, post: 5687568, member: 455902"][USER=310841]@phinds[/USER] , thanks for the trip down memory lane. “Oh, I didn't even get started on the early days. No mention of punched card decks, teletypes, paper tape machines and huge clean rooms with white-coated machine operators to say nothing of ROM burners for writing your own BIOS in the early PC days, and on and on. I could have done a LONG trip down memory lane without really telling anyone much of any practical use for today's world, but I resisted the urge :smile:My favorite "log cabin story" is this: In one of my early mini-computer jobs, well after I had started working on mainframes, it was a paper tape I/O machine. To do a full cycle, you had to (and I'm probably leaving out a step or two, and ALL of this is using a very slow paper tape machine) load the editor from paper tape, use it to load your source paper tape, use the teletype to do the edit, output a new source tape, load the assembler, use it to load the modified source tape and output an object tape, load the loader, use it to load the object tape, run the program, realize you had made another code mistake, go out and get drunk.

Thanks Jim. Several people gave me some feedback and [USER=147785]@Mark44[/USER] went through it line by line and found lots of my typos and poor grammar. The one you found is one I snuck in after he looked at it :smile:

[USER=310841]@phinds[/USER] , thanks for the trip down memory lane. That was fun to read.I too started in the machine language era. My first big project was a training simulator (think of a flight simulator) done in binary on a computer that had no keyboard, no printer. All coding and debugging was done in binary with those lights and switches. I had to learn to read floating point numbers in binary.Then there was the joy of the most powerful debugging technique of all. Namely, the hex dump (or octal dump) of the entire memory printed on paper. That captured the full state of the code & data and there was no bug that could not be found if you just spent enough tedium to find it.But even that paled compared the to generation just before my time. They had to work on "stripped program" machines. Instructions were read from the drum one-at-a-time and executed. To do a branch might mean (worst case) waiting for one full revolution of the drum before the next instruction could be executed. To program them, you not only had to decide what the next instruction would be, but also where on the drum it was stored. Choices made an enormous difference in speed of execution. My boss did a complete boiler control system on such a computer that had only 3×24 bit registers, and zero RAM memory. And he had stories about the generation before him that programmed the IBM 650 using plugboards.In boating we say, "No matter how big your boat, someone else has a bigger one." In this field we can say, "No matter how crusty your curmudgeon credentials, there's always an older crustier guy somewhere."

Very well done. Every language discussion thread – 'what language should I use' should have a reference back this article. Too many statements in those threads are off target. Because posters have no clue about origins.Typo in the Markup language section: "Markup language are"Thanks for a good article.