An Introduction to Computer Programming Languages

In this Insights Guide, the focus is entirely on types of languages, how they relate to what computers do, and how they are used by people. It is a general discussion and intended to be reasonably brief, so there is very little focus on specific languages. Please keep in mind that for just about any topic mentioned in this article, entire books have been written about that topic v.s. a few sentences in this article.

The primary focus is on

• Machine language

• Assembly language

• Higher Level Languages

And then some discussion of

• interpreters (e.g. BASIC)

• markup languages (e.g. HTML)

• scripting languages (e.g. JavaScript, Perl)

• object-oriented languages (e.g. Java)

• Integrated Development Environments (e.g. The “.NET Framework”)

Table of Contents

Machine Language

Computers work in binary and their innate language is strings of 1’s and 0’s. In my early days, I programmed computers using strings of 1’s and 0’s, input to the machine by toggle switches on the front panel. You don’t get a lot done this way but fortunately, I only had to input a dozen or so instructions, which made up a tiny “loader” program. That loader program would then read in more powerful programs from magnetic tape. It was possible to do much more elaborate things directly via toggle switches. Remembering EXACTLY (mistakes are not allowed) which string of 1’s and 0’s represented which instructions were a pain.

SO … very early on the concept of assembly language arose.

Assembly Language

This is where you represent each machine instruction’s string of 1’s and 0’s by a human-language word or abbreviation. Things such as “add” or “ad” and “load” or “ld”. These sets of human-readable “words” are translated to machine code by a program called an assembler.

Assemblers have an exact one-to-one correspondence between each assembly language statement and the corresponding machine language statement. This is quite different from high-level languages where compilers can generate dozens of machine language statements for a single high-level language statement. Assemblers are a step away from machine language in terms of usability but when you program in assembly you are programming in machine language. You are using words instead of the strings of 1’s and 0’s but assembly language is direct programming of a computer, with full control over data flow, register usage, I/O, etc. None of those are directly accessible in higher-level languages.

Every computer has its own set of machine language instructions, and so every machine has its assembly language. This can be a bit frustrating when you have to move to a new machine and learn the assembly language all over again. Having learned one, it’s fairly easy to learn another, but it can be very frustrating/annoying to have to remember a simple word in one assembly language (e.g. “load”) is slightly different (e.g. “ld”) or even considerably different (e.g. “mov”) in another language. Also, machines have slightly different instruction sets which means you not only have new “words” you miss some and add some. All this means there can be a time-consuming focus on the implementation of an algorithm instead of just being able to focus on the algorithm itself.

The good news about assembly language is that you are not programming some “pillow” that sits between you and the machine, you are programming the machine directly. This is most useful for writing things like device drivers where you need to be down in the exact details of what the machine is doing. They also allow you to do the best possible algorithm optimization, but for complex algorithms, you would need a VERY compelling reason indeed to go to the trouble of writing it in assembly, and with today’s very fast computers, that’s not likely to occur.

The bad news about assembly language is that you have to tell the machine EVERY ^$*&@^#*! step that you want it to perform, which is tedious. An algebraic statement such as

A = B + COSINE(C) – D

has to be turned into a string of instructions something like what follows (which is a pseudo assembly language that is MUCH more verbose than actual assembly)

• load memory location C to register 1

• Call Subroutine “Cosine” [thus getting the cosine of what’s in register 1 back into register 1 by calling a subroutine]

• Load memory location B to register 2

• Add registers 1 and 2 [thus putting the sum into register 1]

• load memory location D to register 2

• subtract register 2 from register 1 thus putting the difference into register 1

• save register 1 to memory location A [final value]

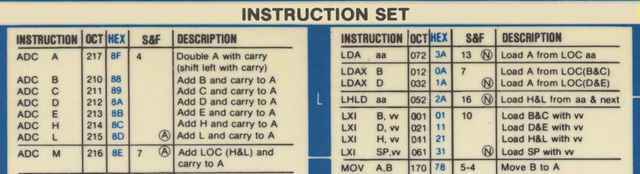

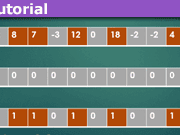

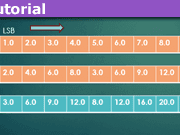

And that’s a VERY simple algebraic statement, and you have to specify each of those steps. In the right order. Without any mistakes. It will make your head hurt. Just to give you some of the flavors of assembly, here’s a very small portion of an 8080 instruction guide (a CPU used in early personal computers). Note that both the octal and hex versions of the binary code are given. These were used during debugging.

Because of the tediousness of all this, compilers were developed

COMPILERS / higher-level languages

Compilers take away the need for assembly language to specify the minutiae of the machine code-level manipulation of memory locations and registers. This makes it MUCH easier to concentrate on the algorithm that you are trying to implement rather than the details of the implementation. Compilers take complex math and semi-human language statements and translate them into machine code to be executed. The bad news is that when things go wrong, figuring out WHAT went wrong is more complicated than in machine/assembly code because the details of what the machine is doing (which is where things go wrong) are not obvious. This means that sophisticated debuggers are required for error location and correction. As high-level languages add layers of abstraction, the distance between what you as a programmer are doing and what the machine is doing down at the detailed level gets larger and larger. This could make debugging things intolerable, but the good news is that today’s programming environments are just that; ENVIRONMENTS. That is, you don’t usually program a computer language anymore, you program an Integrated Development Environment (IDE) and it includes extensive debug facilities to ease the pain of figuring out where you have gone wrong when you do. And you will. Inevitably.

Early high level languages were:

• Fortran (early 1950’s)

• Autocode (1952)

• ALGOL (late 1950’s)

• COBOL (1959)

• LISP (early 1960’s)

• APL (1960’s)

• BASIC (1964)

• PL/1 (1964)

• C (early 1970’s)

Since then there have been numerous developments, including

• Ada (late 70’s, early 80’s)

• C++ (1985)

• Python (late 1980’s)

• Perl (1987)

• Java (early 1990’s)

• JavaScript (mid-1990’s)

• Ruby (mid-1990’s)

• PHP (1994)

• C# (2002)

There were others along the way but they were of a relatively little impact compared to the ones listed above. Even the ones named have had varying degrees of utility, popularity, widespread use, and longevity. One of the lesser-known ones was JOVIAL, a variant of ALGOL (which was originally called the “International Algorithmic Language”). I mention JOVIAL only because the name always tickled me. It was developed by Jules Schwartz and JOVIAL stands for “Jules’ Own Version of the International Algorithmic Language”. I enjoy his lack of modesty.

A special note about the C programming language. C caught on over time as the most widely used computer languages of its time and the syntax rules (and even some of the statements) of several more modern languages come directly from C. The UNIX, Windows, and Apple operating systems kernels are written in C (with some assembly for device drivers) as is some of the rest of the Operating System’s code. Unix is written entirely in C and requires a C compiler. Because of the way it was developed and implemented, C is, by design, about as close to assembly language as you can get in a high-level language. Certainly, it is closer than other high-level languages. Java is perhaps more widely used today but C is still a popular language.

INTERPRETERS / SCRIPTING LANGUAGES / COMMAND LANGUAGES

Interpreters are unlike assemblers and compilers in that they do not generate machine code into an object file and then execute that. Rather, they interpret each statement into machine code one statement (or even just parts of statements) at a time and perform the necessary calculations/manipulations, saving intermediate results as necessary. The use of registers and such low-level detail is completely hidden from the user. The bad news about interpreters is that they are slower than mud compared to executable code generated by assemblers and compilers but that’s much less of an issue today given the speed of modern computers. The particularly good news is that they are amenable to on-the-fly debugging. That is, you can break a program at a particular instruction, change the values of the variables being processed, and even change the subsequent instructions and then resume interpretation. This was WAY more important in the early days of computers when doing an edit-compile-run cycle could require an overnight submission of your program to the computer center.

These days computers are so fast and so widely available that the edit-compile-run cycle is relatively trivial taking minutes or even less as opposed to half a day. One thing to note about interpretive language is that because of the sequential interpretation of the statements, you can have syntax errors that don’t raise any error flags until the attempt is made to interpret them. Assemblers and compilers process your entire program before creating executable object code and so are able to find syntax errors prior to run-time. Some interpreters do a pre-execution pass and do find syntax errors prior to running the program. Even when they don’t, dealing with syntax errors is less painful than with compilers because, given the nature of interpreters, you can simply fix the syntax error during run-time (“interpret-time” actually) and continue on. The first widely used interpretive language was BASIC (there were others, but were less widely known). Another type of scripted language is JavaScript which is interpreted one step at a time like BASIC but is not designed to be changed on the fly the way BASIC statements can be but rather is designed to be embedded in HTML files (see Markup Languages below).

A very important type of scripting language is the command script language, also called “shell scripts”. Early PC system control was done with the DOS command interpreter which is one such. Probably the most well-known is Perl. In its heyday, it was the most widely used system administrator’s language to automate operational and administrative tasks. It is still widely used and there are a lot of applications still running that have been built in Perl. It runs on just about all operating systems and still has a very active following because of its versatility and power.

MARKUP LANGUAGES

The most widely used markup language is HTML (Hypertext Markup Language). It defines sets of rules for encoding documents in a format that is both human-readable and machine-readable. You will often hear the phrase “HTML code”. Technically this is a misnomer since HTML is NOT “code” in the original sense because its purpose is not to result in any machine code. They are just data. They do not get translated to machine language and executed. They are read as input data. HTML is read by a program called a browser and is used not to specify an algorithm but rather to describe how a web page should look. HTML doesn’t specify a set of steps, it “marks up” the look and feel of how a browser creates a web page.

To go at this again, because it’s fairly important to understand the difference between markup languages and other languages, consider the following. Assembler and Compiler languages result in what is called object code. That is, a direct machine language result of the assembly or compilation, and the object code persists during execution. Interpreter languages are interpreted directly AT execution time so clearly exist during execution. Markup languages are different in that they do not persist during execution and in fact do not themselves result directly in any execution at all. They provide information that allows a program called a browser to create an HTML web page with a particular look and feel. So HTML does not code in the sense that a program is a code; it is just data to be used by the browser program. A significant adjunct to HTML is CSS (Cascading Style Sheets) which can be used to further refine the look and feel of a web page. The latest version of HTML, HTML5, is a significant increase in the power and flexibility of HTML but further discussion of CSS and HTML5 is beyond the scope of this article. Another significant markup language is XML, also beyond the scope of this article.

You can embed procedural code inside an HTML file in the form of, for example, JavaScript and the browser will treat it differently than the HTML lines. JavaScript is generally implemented as an interpreted script language but there are compilers for it. The use of JavaScript is pretty much a requirement for web pages that are interactive on the client side. Web servers have their own set of procedural code (e.g. PHP) that can be embedded in HTML and used to change what is served to the client. The browser does not use the JavaScript code as input data but rather treats it as a program to be executed before the web page is presented and even while the web page is active. This allows for dynamic web pages where user input can cause changes to the web page and the manipulation and/or storage of information from the user.

INTEGRATED DEVELOPMENT ENVIRONMENTS

Programming languages these days come with an entire environment surrounding them, including for example object creation by drag and drop. You don’t program the language so much as you program the environment. The number of machine language instructions generated by a simple drag and drop of control onto the window you are working on can be enormous. You don’t need to worry about such things because the environment takes care of all that. The compiler is augmented in the environment by automatic linkers, loaders, and debuggers so you get to focus on your algorithm and the high-level implementation and not worry about the low-level implementation. These environments are now called “Integrated Development Environments” (IDE’s) which is a very straightforward description of exactly what they are. They include a compiler, debugger, help tool, and as well as additional facilities that can be extremely useful (such as a version control system, a class browser, an object browser, support for more than one language, etc.). These extensions will not be explored in this article.

Because the boundary between a full bore IDE and a less powerful programming environment is vague, it is quite arguable just how long IDE’s have existed but I think that it is fair to say that in their current very powerful incarnations they began to evolve seriously in the 1980s and came on even stronger in the ’90s. Today’s incarnations are more evolutionary from the ’80s and ’90s than the more revolutionary nature of what was happening in those decades.

So to summarize, the basic types of computer languages are

• assembler

• compiler

• interpreted

• scripting

• markup

• database (not discussed in this article)

and each has its own set of characteristics and uses.

Within the confines of those general characteristics, there are a couple of other significant categorizations of languages, the most notable being object-oriented languages and event-driven languages. These are not themselves languages but they have a profound effect on how programs work so deserve at least a brief discussion in this article.

OBJECT-ORIENTED LANGUAGES

“Normal” compiled high-level languages have subroutines that are very rigid in their implementation. They can, of course, have things like “if … then” statements that allow for different program paths based on specific conditions but everything is well defined in advance. “Objects” are sets of data and code that work together in a more flexible way than exists in non-object-oriented programming.

C++ tacked object-orientation onto C but object orientation is not enforced. That is, you can write a pure, non-object program in C++ and the compiler is perfectly happy to compile it exactly as would a C compiler. Data and code are not required to be part of objects. They CAN be but they don’t have to be. Java on the other hand is a “pure” object-oriented language. ONLY objects can be created and data and code are always part of an object. C# (“C sharp”) is Microsoft’s attempt to create a C language that is purely object-oriented like Java. C# is proprietary to Microsoft and thus is not used on other platforms (Apple, Unix, etc). I don’t know if it is still true, but an early comment about C# was “C# is sort of Java with reliability, productivity, and security deleted”.

Objects, and OOP, have great flexibility and extensibility but getting into all of that is beyond the scope of this modest discussion of types of languages.

EVENT-DRIVEN PROGRAMMING

“Normal” compiled high-level languages are procedural. That is, they specify a strictly defined set of procedures. It’s like driving a car. You go straight, turn left or right, stop then go again, and so forth, but you are following a specific single path, a procedural map.

“Event-driven” languages such as VB.NET are not procedural, they are, as the name clearly states, driven by events. Such events include, for example, a mouse-click or the press of a key. Having subroutines that are driven by events is not new at all. Even in the early days of computers, things like a keystroke would cause a system interrupt (a hardware action) that would cause a particular subroutine to be called. BUT … the integration of such event-driven actions into the very heart of programming is a different matter and is common in modern IDEs in ways that are far more powerful than early interrupt-driven system calls. It’s somewhat like playing a video game where new characters can pop up out of nowhere and characters can teleport to a different place and the actions of one character affect the actions of other characters. Like OOP, event-driven programming has its power and pitfalls but those are beyond the scope of this article. Both Apple operating systems and Microsoft operating systems strongly support event-driven programming. It’s pretty much a necessity in today’s world of highly interactive, windows based, computer screens.

GENERATIONS OF LANGUAGES

You’ll read in the literature about “second generation”, “third-generation”, and so forth, computer languages. That’s not much discussed these days and I’ve never found it to be particularly enlightening or helpful, however, just for completeness, here’s a rough summary of “generations”. One reason I don’t care for this is that you can get into pointless arguments about exactly where something belongs on this list.

• first generation = machine language

• second generation = assembly language

• third generation = high level languages

• fourth generation = database and scripting languages

• fifth generation = Integrated Development Environments

Newer languages don’t replace older ones. Newer ones extend the capabilities and usability and are sometimes designed for specific reasons, such as database languages, rather than general use, but they don’t replace older languages. C, for example, is now 40+ years old but still in very wide use and FORTRAN is even older, and yet because of the ENORMOUS amount of scientific subroutines that were created for it over decades, it is still in use in some areas of science.

[NOTE: I had originally intended to have a second Insights article covering some of the more obscure topics not covered in this one, but I’m just not going to ever get around to it so I need to stop pretending otherwise]

Studied EE and Comp Sci a LONG time ago, now interested in cosmology and QM to keep the old gray matter in motion.

Woodworking is my main hobby.

Leave a Reply

Want to join the discussion?Feel free to contribute!