LHC Part 3: Protons as Large as a Barn

View All Parts

Part 1: LHC about to restart – some FAQs

Part 2: Commissioning

Part 3: Protons as Large as a Barn

Part 4: Searching for New Particles and Decays

Table of Contents

Introduction

See also part 1 and part 2 of the series.

The LHC commissioning phase is coming to and end. On Wednesday May 20th 2015, the first collisions at full energy (13 TeV) happened, a world record and very exciting for the experiments. The collision rate was still low, of course. To predict it, physicists use the concept of cross-sections: the smaller the cross-section area, the smaller the target you have to hit. Cross-sections can be given for specific collision processes as well, like the cross-section for a collision that produces a Higgs boson. It is not a classical area, but the same formulas still apply.

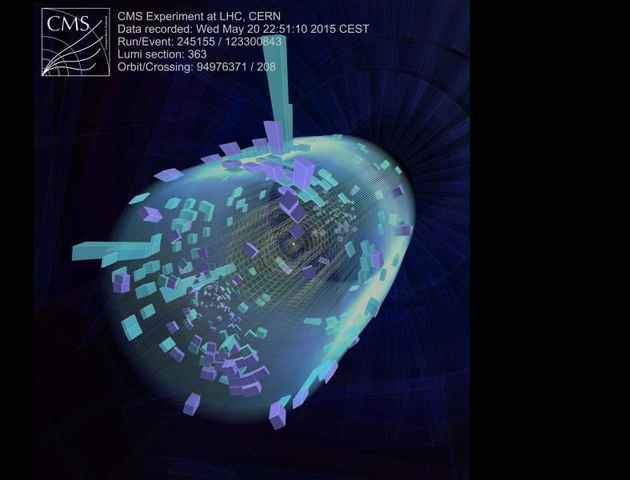

One of the first 13 TeV collisions at the LHC, seen by CMS.

The main use of those event displays are press releases.

Before particle physics used it, this concept first got important for nuclear reactors and weapons. Fission produces neutrons that have to hit other uranium nuclei to induce more fission. The area of an uranium nucleus (when viewed from one side) is about 10-28 m2. Working with those exponentials is impractical, so physicists invented a new unit: for nuclear physics the whole uranium nucleus is “as large as a barn”. Therefore, 1 barn = 10-28 m2. This is combined with SI prefixes to make millibarn (mb), microbarn (µb), nanobarn (nb) and so on.

At the LHC

Proton-proton collisions at the LHC have a cross-section of about 100 mb.

Physicists describe the LHC capability with the concept of luminosity, the collision rate divided by the cross-section. As an example, a luminosity of ##\frac{10}{1b \cdot s}## would give ##\frac{1000mb}{1b \cdot s} = \frac{1}{s}##, or 1 collision per second. If you multiply luminosity with time, you get the so-called “integrated luminosity”. Another multiplication by the cross-section gives the total number of events.

The LHC design luminosity is ##\frac{10^{34}}{cm^2 \cdot s} = \frac{10}{nb \cdot s}## or about 1 billion collisions per second (all those numbers apply to ATLAS and CMS separately). 75% of the design value has been achieved in 2012, the plan for 2015 is about 150%.

Searching particles

Most collisions are boring, so let’s look at rare, interesting collisions:

The cross-section for the production of a Higgs boson is about 20 pb with the energy of 2012 and 50 pb with the energy of 2015. Multiply this with the design luminosity and you get a Higgs boson every 5 seconds (2012) or one every 2 seconds (2015).

If you multiply the cross-section with the integrated luminosities of 2011 and 2012, the LHC produced about 80 000 and 400 000 Higgs particles, respectively. So why did it take until mid 2012 to discover it? Well, those events were hidden in 2 500 000 000 000 000 (2.5 quadrillion) other collisions! Finding a needle in a haystack is trivial compared to that. To make it worse, most decays are indistinguishable from other processes. Only a few rare decay types are clear enough to separate them from other events.

There are searches for particles with even smaller cross-sections, where the experiments would be happy to get a few of them in a year. Those searches would be impossible without the very high luminosity of the LHC.

Plans for 2015

There are still some analyses ongoing with the 2011 and 2012 collisions, but the main focus is on new data now. As mentioned in part 2, commissioning takes time. The experiments will start regular data taking in early June at a low collision rate. The machine operators will increase the number of protons and the collision rate over time. The experiments hope to get about 1/20 of the 2012 collisions in June and July. After a shorter break in August, it is planned to get about half the number of 2012 collisions until November. The higher energy still makes the 2015 collisions much more valuable than those recorded so far. New particles could be discovered even with data from June and July!

Working on a PhD for one of the LHC experiments. Particle physics is great, but I am interested in other parts of physics as well.

“I can imagine that a higher production rate is more helpful to produce larger statistics which makes it easier to spot specific types of particles such as the Higgs boson, but doesn’t it add ‘noise’ and hinders finding more subtle particles with a longer lifespan?[/quote]The better statistics is a stronger argument. Models with long-living heavy particles are very exotic, you have to tune the interactions in the right way to suppress decays by orders of magnitude. And you would gain only for lifetimes between 10 and 30 nanoseconds, a tiny range in ~20 orders of magnitude of known particles lifetimes. The focus is on possible new short-living particles and studies of the known particles.

There is a different negative effect: not all detectors are fast enough. The large LHCb tracker, for example, includes drift tubes that need up to ~100 nanoseconds to see their full signal. With 25 nanoseconds, they will get the signals from four bunch crossings combined.

[quote]I mentioned it more vaguely in the sense like a [URL=’http://en.wikipedia.org/wiki/Centuria#Military’]Centuria[/URL] going into battle … the ones clashing in the front … but thanks for clearing it precisely out.”To get the scale right, you have about one man per light second (300 000 km) of your “front line”.

Great reply, thanks! This could have been ‘PF Insights post’ on it’s own.

“so far most of the time the LHC used 50 nanoseconds but we had 25 ns and it is planned for the future”

I can imagine that a higher production rate is more helpful to produce larger statistics which makes it easier to spot specific types of particles such as the Higgs boson, but doesn’t it add ‘noise’ and hinders finding more subtle particles with a longer lifespan?

“What do you mean with “front of the 100 mb”? A cross-section is not a distance.”

I mentioned it more vaguely in the sense like a [URL=’http://en.wikipedia.org/wiki/Centuria#Military’]Centuria[/URL] going into battle … the ones clashing in the front … but thanks for clearing it precisely out.

“With so many collisions going on there could be some exotic quark-gluon plasma being formed that has a certain lifespan and brakes up into new particles emerging a fraction later”All those processes happen at timescales of the strong and electromagnetic interaction, about 10[sup]-22[/sup] seconds or shorter. The ratio to the time between bunch crossings (25 ns – so far most of the time the LHC used 50 nanoseconds but we had 25 ns and it is planned for the future – I’ll use 25 here) is much more than 10 orders of magnitude.

Produced particles are extremely short-living (as above) and/or ultrarelativistic, which means they or their decay products fly away at nearly the speed of light. At the time the next bunch crossing occurs, they are 7.5 meters away from the collision point. They are still in the detector at that time – the inner detectors start seeing new collisions while the products from previous collisions are still flying through the outer detector, but well separated by those 7.5 meters. Actually, if you take the length of ATLAS (46 m), products from three to four bunch crossings are in the detector at the same time. This is taken into account for the readout and data processing, of course.

What do you mean with “front of the 100 mb”? A cross-section is not a distance.

The bunches are a few centimeters long, all collisions in a bunch crossing (up to 30-40, see above) happen within a fraction of a nanosecond. The detectors record all their products together. Their separation along the beam axis is used to figure out which track came from which collision.

Their could be new unstable particles that live relatively long compared to 25 nanoseconds. If they are charged, they would probably get stuck in the calorimeters and decay there at a random time, not synchronized with bunch crossings. There are dedicated searches for those particles as well. Examples: [url=http://arxiv.org/abs/1310.6584]ATLAS[/url], [url=http://arxiv.org/abs/1011.5861]CMS[/url]

If they live a bit shorter, they might look similar to heavy mesons, and produce a secondary vertex in the tracker.

Finding unknown [i]stable[/i] particles is much harder, because there is no decay to see. They would have to be so heavy that they move slower than other particles at the same energy, or get detected by kinematics (energy/momentum conservation).

Interesting series of articles, thanks.

One thing that puzzles me is how timing is handled.

With so many collisions going on there could be some exotic quark-gluon plasma being formed that has a certain lifespan and brakes up into new particles emerging a fraction later … all this with bunches traveling only a couple of meters behind each other with a dozen of nano seconds between them … how is this measured when some collisions happening at the front of the 100 mb and their product could be mixed with things that happen at the end of the 100 mb long bunch, also taking in account that it are two bunches colliding extending the collision zone … how long do collisions last … mini black hole evaporates very quickly … but on the other end of the spectrum a new proton being created would last forever … how is it taken into account what lies in-between?

“So, how big is the side of an actual barn in barns?[/quote]An African or European barn?

[quote]I’ve enjoyed reading your LHC series, and am looking forward to the next installment.”Thanks :)

“A yet interesting 3rd part about this topic…Thank you!

” 75% of the design value has been achieved in 2012, the plan for 2015 is about 150%.”

What does this practically mean?”What do you mean with practically? 0.77*10[sup]34[/sup]/(cm[sup]2[/sup]*s) was the record in 2012.

In terms of collision rate, about 750 million per second in 2012, 1.5 billion per second in 2015. The bunch spacing goes down from 50 to 25 nanoseconds (40 instead of 20 million bunch crossings per second), so the number of collisions per bunch crossing will be similar, about 30-40 at peak luminosity. A bit lower if you consider the inelastic collisions only, as the elastic collisions are usually too close to the beam pipe to be visible (the small [url=http://arxiv.org/abs/1204.5689]TOTEM experiment can see them[/url]).

“So, how big is the side of an actual barn in barns?

I remember having an “aha” moment after playing around with some dimensional analysis on extinction coefficients and determining that M[sup]-1[/sup] cm[sup]-1[/sup] is equivalent to an area, so extinction coefficients are just molar absorption cross sections.

I’ve enjoyed reading your LHC series, and am looking forward to the next installment.”

1×10^(-24) cm^2 per barn and an average barn is 2 stories and about 75′ in length.

Putting it all together we get about 5.5×10^28 barns/barn.

Or we could get really complicated on this and go with the atomic make-up of wood and figure out the ‘real’ barns/barn!

So, how big is the side of an actual barn in barns?

I remember having an “aha” moment after playing around with some dimensional analysis on extinction coefficients and determining that M[sup]-1[/sup] cm[sup]-1[/sup] is equivalent to an area, so extinction coefficients are just molar absorption cross sections.

I’ve enjoyed reading your LHC series, and am looking forward to the next installment.

A yet interesting 3rd part about this topic…Thank you! “75% of the design value has been achieved in 2012, the plan for 2015 is about 150%.” What does this practically mean?