Superdeterminism and the Mermin Device

Superdeterminism as a way to resolve the mystery of quantum entanglement is generally not taken seriously in the foundations community, as explained in this video by Sabine Hossenfelder (posted in Dec 2021). In her video, she argues that superdeterminism should be taken seriously, indeed it is what quantum mechanics (QM) is screaming for us to understand about Nature. According to her video per the twin-slit experiment, superdeterminism simply means the particles must have known at the outset of their trip whether to go through the right slit, the left slit, or both slits, based on what measurement was going to be done on them. Thus, she defines superdeterminism this way:

Superdeterminism: What a quantum particle does depends on what measurement will take place.

In Superdeterminism: A Guide for the Perplexed she gives a bit more technical definition:

Theories that do not fulfill the assumption of Statistical Independence are called “superdeterministic” … .

where Statistical Independence in the context of Bell’s theory means:

There is no correlation between the hidden variables, which determine the measurement outcome, and the detector settings.

Sabine points out that Statistical Independence should not be equated with free will and I agree, so a discussion of free will in this context is a red herring and will be ignored.

Since the behavior of the particle depends on a future measurement of that particle, Sabine writes:

This behavior is sometimes referred to as “retrocausal” rather than superdeterministic, but I have refused and will continue to refuse using this term because the idea of a cause propagating back in time is meaningless.

Ruth Kastner argues similarly here and we agree. Simply put, if the information is coming from the future to inform particles at the source about the measurements that will be made upon them, then that future is co-real with the present. Thus, we have a block universe and since nothing “moves” in a block universe, we have an “all-at-once” explanation per Ken Wharton. Huw Price and Ken say more about their distinction between superdeterminism and retrocausality here. I will focus on the violation of Statistical Independence and not worry about these semantics.

So, let me show you an example of the violation of Statistical Independence using Mermin’s instruction sets. If you are unfamiliar with the mystery of quantum entanglement illustrated by the Mermin device, read about the Mermin device in this Insight, “Answering Mermin’s Challenge with the Relativity Principle” before continuing.

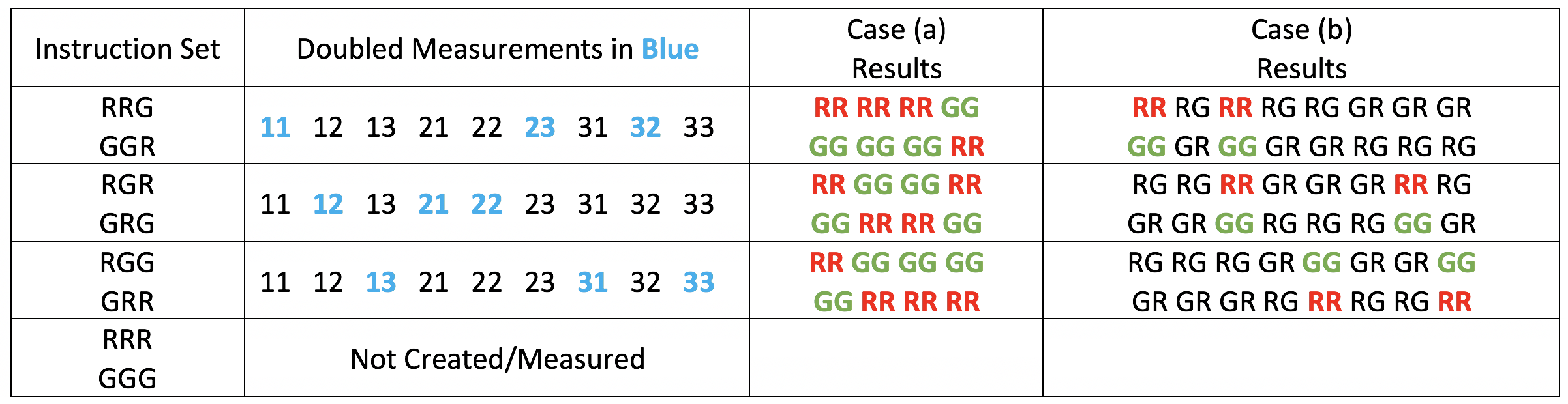

In using instruction sets to account for quantum-mechanical Fact 1 (same-color outcomes in all trials when Alice and Bob choose the same detector settings (case (a)), Mermin notes that quantum-mechanical Fact 2 (same-color outcomes in ##\frac{1}{4}## of all trials when Alice and Bob choose different detector settings (case (b)) must be violated. In making this claim, Mermin is assuming that each instruction set produced at the source is measured with equal frequency in all nine detector setting pairs (11, 12, 13, 21, 22, 23, 31, 32, 33). That assumption is called Statistical Independence. Table 1 shows how Statistical Independence can be violated so as to allow instruction sets to reproduce quantum-mechanical Facts 1 and 2 per the Mermin device.

Table of Contents

Table 1

In row 2 column 2 of Table 1, you can see that Alice and Bob select (by whatever means) setting pairs 23 and 32 with twice the frequency of 21, 12, 31, and 13 in those case (b) trials where the source emits particles with the instruction set RRG or GGR (produced with equal frequency). Column 4 then shows that this disparity in the frequency of detector setting pairs would indeed allow our instruction sets to satisfy Fact 2. However, the detector setting pairs would not occur with equal frequency overall in the experiment and this would certainly raise red flags for Alice and Bob. Therefore, we introduce a similar disparity in the frequency of the detector setting pair measurements for RGR/GRG (12 and 21 frequencies doubled, row 3) and RGG/GRR (13 and 31 frequencies doubled, row 4), so that they also satisfy Fact 2 (column 4). Now, if these six instruction sets are produced with equal frequency, then the six case (b) detector setting pairs will occur with equal frequency overall. In order to have an equal frequency of occurrence for all nine detector setting pairs, let detector setting pair 11 occur with twice the frequency of 22 and 33 for RRG/GGR (row 2), detector setting pair 22 occur with twice the frequency of 11 and 33 for RGR/GRG (row 3), and detector setting pair 33 occur with twice the frequency of 22 and 11 for RGG/GRR (row 4). Then, we will have accounted for quantum-mechanical Facts 1 (column 3) and 2 (column 4) of the Mermin device using instruction sets with all nine detector setting pairs occurring with equal frequency overall.

Since the instruction set (hidden variable values of the particles) in each trial of the experiment cannot be known by Alice and Bob, they do not suspect any violation of Statistical Independence. That is, they faithfully reproduced the same QM state in each trial of the experiment and made their individual measurements randomly and independently, so that measurement outcomes for each detector setting pair represent roughly ##\frac{1}{9}## of all the data. Indeed, Alice and Bob would say their experiment obeyed Statistical Independence, i.e., there is no (visible) correlation between what the source produced in each trial and how Alice and Bob chose to make their measurement in each trial.

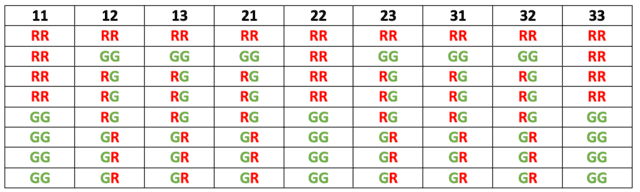

Here is a recent (2020) argument against such violations of Statistical Independence by Eddy Chen. And, here is a recent (2020) argument that superdeterminism is “fine-tuned” by Indrajit Sen and Antony Valentini. So, the idea is contested in the foundations community. In response, Vance, Sabine, and Palmer recently (2022) proposed a different version of superdeterminism here. Thinking dynamically (which they don’t — more on that later), one could say the previous version of superdeterminism has the instruction sets controlling Alice and Bob’s measurement choices (Table 1). The new version (called “supermeasured theory”) has Alice and Bob’s measurement choices controlling the instruction sets. That is, each instruction set is only measured in one of the nine measurement pairs (Table 2). Indeed, there are 72 instruction sets for the 72 trials of the experiment shown in Table 2. That removes the complaint about superdeterminism being “conspiratorial” or “fine-tuned” or “violating free will.”

Table 2

Again, that means you need information from the future controlling the instruction set sent from the source, if you’re thinking dynamically. However, Vance et al. do not think dynamically writing:

In the supermeasured models that we consider, the distribution of hidden variables is correlated with the detector settings at the time of measurement. The settings do not cause the distribution. We prefer to use find [sic] Adlam’s terms—that superdeterministic/supermeasured theories apply an “atemporal” or “all-at-once” constraint—more apt and more useful.

Indeed, they voice collectively the same sentiment about retrocausality that Sabine voiced alone in her quote above. They write:

In some parts of the literature, authors have tried to distinguish two types of theories which violate Bell-SI. Those which are superdetermined, and those which are retrocausal. The most naive form of this (e.g. [6]) seems to ignore the prior existence of the measurement settings, and confuses a correlation with a causation. More generally, we are not aware of an unambiguous definition of the term “retrocausal” and therefore do not want to use it.

In short, there does seem to be an emerging consensus between the camps calling themselves superdeterministic and retrocausal that the best way to view violations of Statistical Independence is in “all-at-once” fashion as in Geroch’s quote:

There is no dynamics within space-time itself: nothing ever moves therein; nothing happens; nothing changes. In particular, one does not think of particles as moving through space-time, or as following along their world-lines. Rather, particles are just in space-time, once and for all, and the world-line represents, all at once, the complete life history of the particle.

Regardless of the terminology, I would point out that Sabine is not merely offering an interpretation of QM, but she is proposing the existence of a more fundamental (deterministic) theory for which QM is a statistical approximation. In this paper, she even suggests “what type of experiment has the potential to reveal deviations from quantum mechanics.” Specifically:

This means concretely that one should make measurements on states prepared as identically as possible with devices as small and cool as possible in time-increments as small as possible.

According to this article in New Scientist (published in May 2021):

The good news is that Siddharth Ghosh at the University of Cambridge has just the sort of set-up that Hossenfelder needs. Ghosh operates nano-sensors that can detect the presence of electrically charged particles and capture information about how similar they are to each other, or whether their captured properties vary at random. He plans to start setting up the experiment in the coming months.

We’ll see what the experiments tell us.

PhD in general relativity (1987), researching foundations of physics since 1994. Coauthor of “Beyond the Dynamical Universe” (Oxford UP, 2018).

We have dozens of posts of pointless argument which has nothing to do with the original Insight.

It's so disrespectful, IMHO.

In order to disagree, you must be able to name one fact about a quantum system that that is accessible for humans but not predicted by the Born rule.

"

You have it backwards. My point is that there are many situations (like the orbit of the Moon 4 billion years ago) to which the Born rule can perfectly well be applied but which don't involve human measurements or observations.

Just look at Wikipedia:

"

Wikipedia is not a valid reference. You need to reference a textbook or peer-reviewed paper. (You do that for Boltzmann so that part is fine, although I don't have those books so I can't personally check the references.)

Gibbs requires dynamic coarse graining by the laws of motion.

"

"

this is why Gibbs' H-theorem doesn't work as a derivation of statistical mechanics

"

I don't think these claims are true. From posts others have made in this thread, I don't think I'm the only one with that opinion.

What reference are you using for your understanding of Gibbs' derivation of statistical mechanics? (And for that matter, Boltzmann's?)

If the facts about a quantum system that can in principle be measured by humans are in 1 to 1 correspondence with the facts that are predicted by the Born rule (which they are)

"

No, they're not. This seems to be a fundamental disagreement we have. I don't think we're going to resolve it.

A explanation for the "Tsirelson Bound" ??

"An explanation is a set of statements, a set of facts, which states the causes, context, and consequences of those facts. It may establish rules or laws"

.

"

Yes, here is the explanatory sequence:

1. No preferred reference frame + h –> average-only projection for qubits

2. Average-only projection for qubits –> average-only conservation per the Bell states

3. Average-only conservation per the Bell states –> Tsirelson bound

In short, the Tsirelson bound obtains due to "conservation per no preferred reference frame".

Basically, there are two ways to arrive at statistical mechanics

"

Neither of these looks right to me.

It is true that "the system is always and only in one pure state". And if we could measure with exact precision which state it was in, at any instant, according to classical physics, we would know its state for all time, since the dynamics are fully deterministic.

However, we can't measure the system's state with exact precision. In fact, we can't measure its microscopic state (the individual positions and velocities of all the particles) at all. We can only measure macroscopic variables like temperature, pressure, and volume. So in order to make predictions about what the system will do, we have to coarse grain the phase space into "cells", where each cell represents a set of phase space points that all have the same values for the macroscopic variables we are measuring. Then, roughly speaking, we build theoretical models of the system, for the purpose of making predictions, using these coarse grained cells instead of individual phase space points: we basically assume that, at an instant of time where the macroscopic variables have particular values, the system's exact microscopic state is equally likely to be any of the phase space points inside the cell that corresponds to those values for the macroscopic variables. That gives us a distribution and enables us to do statistics.

Gibbs' H-theorem requires intermediate coarse graining as a physical process, i.e. it must be in the equations of motion.

"

I don't see how this can be true since the classical equations of motion are fully deterministic. A trajectory in phase space is a 1-dimensional curve (what you describe as "delta functions evolving to delta functions"), it does not start out as a 1-dimensional curve but then somehow turn into a 2-dimensional area.

The picture shows how an initial small uncertainty evolves to an uncertainty that, upon coarse graining, looks like a larger uncertainty.

"

I think this description is a little off. What your series of pictures show is a series of "snapshots" at single instants of time of one "cell" of a coarse graining of the phase space (i.e., all of the phase space points in the cell have the same values for macroscopic variables like temperature at that instant of time). At ##t = 0## the cell looks nice and neat and easy to distinguish from the rest of the phase space even with measurements of only finite precision (the exact location of the boundary of the cell will be uncertain, but the boundary is simple and that uncertainty doesn't have too much practical effect). As time evolution proceeds, however, ergodicity (I think that's the right term) causes the shape of the cell in phase space to become more and more convoluted and makes it harder and harder to distinguish, by measurements with only finite precision, what part of the phase space is in the cell and what part is not.

"

There is no coarse graining in your picture.

"

Yes, there is. The blue region in his picture is not a single trajectory. It's a set of phase space points that correspond to one "cell" of a coarse graining of the phase space at a single instant of time. See above.

Pointless discussion. To know whether anything is anthropocentric is akin to knowing whether when a tree falls in a forest and no one is around to hear it it makes a sound.

"

That would be more arboreocentric!

I don't know if it does

"

In other words, your claim is not "standard QM". It's your opinion.

"

You claim that collapse happens all the time, independent of measurement.

"

No, that's not what I have been claiming. I have been claiming that "measurement" is not limited to experiments run by humans; an object like the Moon is "measuring" itself constantly, whether a human looks at it or not.

"

The no communication theorem involves many other things, but the crucial ingredient that makes it anthropocentric is the Born rule.

"

This doesn't change anything I have said.

I don't think we're going to make any further progress in this discussion.

Anyway, if you don't like the ergodic argument, why do you bring it up then? I'm only telling you that you argue based on ergodicity here.

"

You mentioned ergodicity first. We obviously disagree on foundations of classical statistical mechanics, even on meaning of the words such as "ergodicity" and "coarse graining", so I think it's important to clear these things up.

It just shows the trajectory eventually filling the whole phase space.

"

No it doesn't. The final pink region has the same area as the initial one, it covers only a fraction of the whole gray disc. It's only if you look at the picture with blurring glasses (which is coarse graining) that it looks as if the whole disc is covered.

Ergodic theory works just fine for deriving statistical mechanics

"

Except that it doesn't.

https://www.jstor.org/stable/1215826?seq=1#page_scan_tab_contents

https://arxiv.org/abs/1103.4003

https://arxiv.org/pdf/cond-mat/0105242v1.pdf

What you are presenting is not coarse graining. This is the argument based on ergodic theory and it works fine.

"

No, that's coarse graining, not ergodicity. Ergodicity involves an average over a long period of time, while the fourth picture shows filling the whole phase-space volume at one time. And it fills the whole space only in the coarse grained sense.

No, they are completely different derivations. Boltzmann's H-theorem is based on the Stosszahlansatz. Gibbs' H-theorem is based on coarse graining in phase space.

"

I see, thanks!

"

The theorem works in classical statistical physics, if you can supply a reason for why this coarse graining happens, i.e. there must be a physical process. The Liouville equation alone is reversible and can't therefore can't increase entropy. Similarly, the Bohmian equations of motion are reversible and can't increase entropy.

"

It seems that we disagree on what is coarse graining, so let me explain how I see it, which indeed agrees with all textbooks I am aware of, as well as with the view of Jaynes mentioned in your wikipedia quote. Coarse graining is not something that happens, it's not a process. It's just a fact that, in practice, even in classical physics we cannot measure position and momentum with perfect precision. The coarse graining is the reason why do we use statistical physics in the first place. Hence there is always some practical uncertainty in the phase space. The picture shows how an initial small uncertainty evolves to an uncertainty that, upon coarse graining, looks like a larger uncertainty.

View attachment 298912

No, because this is not Boltzmann's H-theorem. Valentini appeals to Gibbs' H-theorem, which is well-understood not to work without a physical mechanism for the coarse-graining.

"

By Gibbs H-theorem, I guess you mean H-theorem based on Gibbs entropy, rather than Boltzmann entropy. But I didn't know that Gibbs H-theorem in classical statistical mechanics does not work for the reasons you indicated. Can you give a reference?

Because it doesn't work. Bohmian time evolution doesn't involve the coarse graining steps that are used in his calculation. A delta distribution remains a delta distribution at all times and does not decay into ##|\Psi|^2##.

"

If your argument is correct, then an analogous argument should apply to classical statistical mechanics: The Hamiltonian evolution doesn't involve the coarse graining steps that are used in the Boltzmann H-theorem. A delta distribution in phase space remains a delta distribution at all times and does not decay into a thermal equilibrium. Would you then conclude that thermal equilibrium in classical statistical mechanics also requires fine tuning?

I am using standard textbook quantum mechanics as described e.g. in Landau.

"

And where does that say the no communication theorem is anthropomorphic?

"

you are apparently not using standard QM. Instead you seem to be arguing with respect to an objective collapse model.

"

You apparently have a misunderstanding as to what "standard QM" is. "Standard QM" is not any particular interpretation. It is just the "shut up and calculate" math.

I have mentioned collapse interpretations, but not "objective collapse" ones specifically. Nothing I have said requires objective collapse. The only interpretation I have mentioned in this thread that I do not think is relevant to the discussion (because in it, measurements don't have single outcomes, and measurements having single outcomes seems to me to be a requirement for the discussion we are having) is the MWI.

"

My arguement depends only on the Born rule

"

No, it also depends on the no communication theorem. I know you claim that the no communication theorem is only about the Born rule, and that the Born rule is anthropomorphic. I just disagree with those claims. I have already explained why multiple times. You haven't addressed anything I've actually said. You just keep repeating the same claims over and over.

"

You can read up on this e.g. in Nielsen, Chuang

"

Do you mean this?

https://www.amazon.com/dp/1107002176/?tag=pfamazon01-20

due to the deferred measurement principle, it can be shited arbitrarily close to the present.

"

Well, this, at least, is a new misstatement you hadn't made before. The reference you give does not say you can shift collapse "arbitrarily close to the present". It only says you can shift it "to the end of the quantum computation" (and if you actually read the details it doesn't even quite say that–it's talking about a particular result regarding equivalence of quantum computing circuits, not a general result about measurement and collapse). That's a much weaker claim (and is also irrelevant to this discussion). If a quantum computation happened in someone else's lab yesterday, I can't shift any collapses resulting from measurements made in that computation "arbitrarily close to the present".

collapse has nothing to do with decoherence

"

I have already agreed multiple times that they are not the same thing.

"

I don't see why you bring it up.

"

This whole subthread started because you claimed that the no communication theorem was anthropomorphic. I am trying to get you to either drop that claim or be explicit about exactly what kind of QM interpretation you are using to make it. I have repeatedly stated what kind of interpretation I think is necessary to make that claim: a "consciousness causes collapse" interpretation. I have brought up other interpretations, such as "decoherence and collapse go together" only in order to show that, under those interpretations, the no communication theorem is not anthropomorphic, because measurement itself is not. Instead of addressing that point, which is the only one that's relevant to the subthread, you keep complaining about irrelevancies like whether or not collapse and decoherence are the same thing (of course they're not, and I never said they were).

At this point I'm not going to respond further since you seem incapable of addressing the actual point of the subthread we are having. I've already corrected other misstatements of yours and I'm not going to keep correcting the same ones despite the fact that you keep making them.

See atyy's post (although I don't agree that Valentini's version fixes this).

"

OK, so why do you not agree that Valentini's version fixes this?

So where exactly is fine tuning in the Bohmian theory?

"

The fine tuning is in the quantum equilibrium assumption. But maybe Valentini's version is able to overcome it the fine tuning, at the cost and benefit of predicting violations of present quantum theory without fine tuning. There's a discussion by Wood and Spekkens on p21 of https://arxiv.org/abs/1208.4119.

As can be seen in this paper, such a non-local explanation of entanglement requires even more fine tuning than superdeterminism.

"

I can't find this claim in the paper. Where exactly does the paper say that?

It doesn't matter though, whether it is well understood. That doesn't make it any less fine tuned.

"

So where exactly is fine tuning in the Bohmian theory?

Can you give a citation that says that in bona fide Copenhagen, an object can collapse itself?

"

Do you consider the literature on decoherence, when it is applied in the context of a collapse interpretation, to be "bona fide Copenhagen"? Because the fact that macroscopic objects are continually decohering themselves is an obvious consequence of decoherence theory in general. And, as I have argued in prior posts, unless you are going to say that collapse only happens when a human is looking, any collapse event in a macroscopic object is going to be close to a decoherence event.

"

Copenhagen says that collapse happens after a measurement. It is silent on what a measurement is, so we don't understand when collapse happens.

"

We can't prove when collapse happens. But that doesn't mean we can't say anything at all about when it happens.

"

But if a system would just continuously collapse, it would be frozen in time, as per the quantum Zeno effect, so we can also exclude this possibility.

"

No, the quantum Zeno effect does not say the system is "frozen in time". It only says that the probability of a quantum system making "quantum jumps" from one eigenstate of an observable to another can be driven to zero by measuring that observable at shorter and shorter time intervals. But there is nothing that says that the eigenstate it is being "held" in by continuous measurement has to be "frozen in time." If the system is a macroscopic object, then the "eigenstate" it starts out it is just a set of classical initial conditions, and being "held" in that eigenstate by continuous collapse just means it executes the classical motion that starts from those initial conditions.

To put it another way, a macroscopic object behaving classically does not have to make quantum jumps. It just behaves classically. If it is collapsing continuously, that just means it's following the classical trajectory that was picked out by its initial classical state.

as I said, by shifting the split between system and environment, you can shift the collapse almost arbitrarily in time.

"

But within a pretty narrow window of time in any actual case. Unless, as I said, you're going to take a position that, for example, the Moon only has a single trajectory when humans are looking at it. If we limit ourselves to reasonable places to put the collapse for macroscopic objects, so that they behave classically regardless of whether they are being looked at, any place you put the collapse is going to be pretty close to a decoherence event, because macroscopic objects are always decohering themselves.

"

I was just claiming that the Born rule is not needed for time evolution and this is just true.

"

You're quibbling. Collapse is needed for time evolution on any collapse interpretation. You agree with that in your very next sentence:

"

Time evolution is a concatenation of projections and unitary evolutions.

"

And this being the case is already sufficient to disprove your claim, which originally started this subthread, that the no communication theorem is anthropomorphic. The theorem applies to any collapse, not just one triggered by a human looking.

"

my point is not that the collapse postulate is athropocentric, but that the Born rule is anthropocentric

"

The Born rule can't be anthropocentric if collapse isn't. Except in the (irrelevant for this discussion) sense that humans are the ones who invented the term "Born rule" and so that term can only be applied to human discussions of QM, not QM itself. And if you are going to take that position, then your claim that the no communication theorem has to do with the Born rule is false: the theorem has to do with QM itself, not human discussions of QM. And, as I said above, the theorem applies to any collapse events, not just ones that humans are looking at.

"

I have been arguing that postulate II.b is anthropocentric.

"

See above.

you can't just define what counts as a measurement this way.

"

Basically, you seem to be expounding a "consciousness causes collapse" interpretation. In such an interpretation, yes, you could call the no communication theorem anthropomorphic, because anything to do with measurement at all is anthropomorphic. But once again, you are then saying that no measurements at all took place anywhere in the universe until the first conscious human looked at something.

If that is your position, once again, we are talking past each other and can just drop this subthread.

If it is not your position, then pretty much everything you are saying looks irrelevant to me as far as the discussion in this thread is concerned. Yes, I get that decoherence doesn't cause collapse, and collapse can't be proven to occur in a collapse interpretation whenever decoherence occurs, but once you've adopted a collapse interpretation, and once you've adopted an interpretation that says collapse doesn't require consciousness to occur, so a macroscopic object like the Moon can be continually collapsing itself, you are going to end up having measurements and decoherence events be pretty much the same thing. And neither decoherence nor measurements nor anything associated with them will be anthropomorphic.

The system would evolve just fine if we didn't ascribe meaning to the relative frequencies. They play no role in the evolution laws of the system. All the universe needs is the wave function and the Hamilton operator.

"

This is basically assuming the MWI. Again, see my post #79 above.

On any interpretation where measurements have single outcomes, no, just the wave function and the Hamiltonian are not enough.

Are there any entities in the universe, other than humans, that make use of the Born rule?

"

Any time Nature has to decide what single outcome a measurement has when there are two or more quantum possibilities, it is making use of the Born rule.

(For why I am assuming that measurements have single outcomes, see my post #79 just now.)

Decoherence itself doesn't collapse the wave function into a single branch

"

I know that. That's why I said that what we are discussing is ultimately a matter of interpretation. You are free to adopt an interpretation like the MWI, in which there is no collapse at all, and in such an interpretation it would not be true that the Moon has a single position.

However, in such an interpretation your claim that the no communication theorem is anthropocentric is still not true, because in an interpretation like the MWI it makes no sense to even talk about whether Bob can do something to affect Alice's measurement results and thus send Alice a signal, since measurements don't even have single outcomes to begin with. This is just an aspect of the issue that it's not clear how the Born rule even makes sense in the MWI.

In any case, as I said before, if you are using an interpretation like the MWI, then you should say so explicitly. Then we can simply drop this whole subthread since we have been talking past each other: I was assuming we were using an interpretation in which measurements have single outcomes, so the Born rule makes sense and it makes sense to talk about whether or not Bob can send Alice a signal using measurement results on entangled particles.

(Note also that this thread is about superdeterminism, which also makes no sense in an interpretation like the MWI, since the whole point of superdeterminism is to try to reconcile violations of the Bell inequalities with an underlying local hidden variable theory in which measurements have single outcomes determined by the hidden variables, by violating the statistical independence assumption of Bell's theorem instead of the locality assumption. So the MWI as an interpretation is really off topic for this thread.)

If you are not using the MWI, and you are using an interpretation in which measurements do have single outcomes, then your statement quoted above, while true, is pointless. Yes, decoherence by itself does not make measurements have single outcomes, but in any interpretation where we are assuming that measurements do have single outcomes anyway, decoherence is an excellent way of defining what counts as a "measurement". That is the only use I am making of decoherence in my arguments, and you have not responded to that at all.

It's not about information theory at all. The no communication theorem is about measurement, which is an anthropocentric notion. It says that no local operation at Bob's distant system (in the sense of separation by tensor products) can cause any disturbance of measurement results at Alice's local system, or more succintly:

"

Alice and Bob are anthropocentric notions, I'll give you that!

Things like well-defined positions and well-defined momenta don't exist in quantum mechanics.

"

Objects with a huge number of quantum degrees of freedom, like the Moon, can have center of mass positions and momenta that, while not delta functions, will both have widths that are much, much narrower than the corresponding widths for a single quantum particle (narrower by roughly the same order of magnitude as the number of particles in the object). That is what I mean by "well-defined" in this connection.

"

Decoherence just ensures that the probability distributions given by the wave function have always been peaked on one or more trajectories, rather than being spread across the universe.

"

But the Moon doesn't have "one or more" trajectories. It has one. And that was true 4 billion years ago. The Moon didn't have to wait for humans to start observing it to have one trajectory. Or at least, if you are taking the position that it did–that the Moon literally had multiple trajectories (each decohered, but still multiple ones) until the first human looked at it and collapsed them into one, then please make that argument explicitly.

"

So if someone had measured the moon 4 billion years ago, he would have found it orbiting earth. It doesn't matter whether sombody was there though.

"

"It doesn't matter whether someone was there" in the sense that the Moon had one single trajectory back then?

"

The wave function evolves independent of whether someone is there or not and may also decohere in that process. You don't need the Born rule for that.

"

You do in order for the Moon to have just one trajectory instead of multiple ones. More precisely, you need the Born rule to tell you what the probabilities were for various possible trajectories 4 billion years ago. And then you need to realize that, because the Moon is constantly measuring itself, it will have picked just one of those trajectories 4 billion years ago. And then you need the Born rule to tell you what the probabilities were for various possible trajectories 4 billion years minus one second (let's say–I think the decoherence time for the Moon is actually much shorter than that, but let's say one second for this discussion) ago–and then the Moon picks one of those. And then you're at 4 billion years minus two seconds ago…etc., etc.

I wouldn't consider modern special relativity less constructive than classical mechanics. SR replaces the Galilei symmetry by Poincare symmetry. There is no reason why Galilei symmetry should be preferred, nature just made a different choice. We don't get around postulating some basic principles in any case.

For me, a satisfactory explanation of QM would have to explain, why we have to use this weird Hilbert space formalism and non-commutative algebras in the first place. Sure, we have learned to perform calculations with it, but at the moment, it's not something a sane person would come up with if decades of weird experimental results hadn't forced them to. I hope someone figures this out in my lifetime.

"

SR is a principle theory, per Einstein. Did you read the Mainwood article with Einstein quotes?

And, yes, the information-theoretic reconstructions of QM, whence the relativity principle applied to the invariant measurement of h, give you the Hilbert space formalism with its non-commutative algebra. So, it's figured out in principle fashion. Did you read our paper?

the Born rule captures everything that is in principle accessible to humans

"

Again, this is a matter of interpretation. According to decoherence theory, the Moon had a perfectly well-defined orbit 4 billion years ago, when there were no humans around. In terms of the Born rule, the probabilities for the Moon to be at various positions were perfectly well-defined 4 billion years ago, and the no communication theorem would apply to them.

Whereas on the viewpoint you are taking here, the Born rule was meaningless until humans started doing QM experiments.

The no communication theorem is about measurement, which is an anthropocentric notion.

"

I think that is a matter of interpretation. In decoherence theory, for example, "measurement" can be defined as any situation in which decoherence occurs. For example, the Moon is constantly measuring itself by this definition, whether or not a human being looks at it. And the no communication theorem would therefore apply to the Moon (and would say, for example, that the Moon's orbit cannot be affected by what is happening on Pluto at a spacelike separated event, even if particles in the Moon and particles in Pluto are quantum entangled).

If you insist that by mechanism, we mean an explanation in terms of hidden variables. I rather except that we need to refine our notion of what constitutes a mechanism. Before Einstein, people already knew the Lorentz transformations but they were stuck believing in an ether explanation. Even today, there are some exots left who can't wrap their heads around the modern interpretation. I think with quantum theory, we are in a quite similar situation. We know the correct formulas, but we are stuck in classical thinking. I guess we need another Einstein to sort this out.

"

Yes, and even Einstein tried "mechanisms" aka "constructive efforts" to explain time dilation and length contraction before he gave up and went with his principle explanation (relativity principle and light postulate). Today, most physicists are content with that principle explanation without a constructive counterpart (no "mechanisms", see this article), i.e., what you call "the modern interpretation." So, if you're happy with that principle account of SR, you should be happy with our principle account of QM (relativity principle and "Planck postulate"). All of that is explained in "No Preferred Reference Frame at the Foundation of Quantum Mechanics". Here is my APS March Meeting talk on that paper (only 4 min 13 sec long) if you don't want to read the paper.

You need to tune the equations in such a way that they obey the no communication property, i.e. it must be impossible to use the non-local effects to transmit information.

"

For me, it is like a 19th century critique of atomic explanation of thermodynamics based on the idea that it requires fine tuning of the rules of atomic motion to fulfill the 2nd law (that entropy of the full system cannot decrease) and the 3rd law (that you cannot reach the state of zero entropy). And yet, as Boltzmann understood back than and many physics students understand today, those laws are FAPP laws that do not require fine tuning at all.

Indeed, in Bohmian mechanics it is well understood why nonlocality does not allow (in the FAPP sense) communication faster than light. It is a consequence of quantum equilibrium, for more details see e.g. https://arxiv.org/abs/quant-ph/0308039

"

communication is an anthropocentric notion

"

Not in the sense in which it is used in the no communication theorem. That sense is basically the information theoretic sense, which in no way requires humans to process or even be aware of the information.

"Quantum nonlocality" is just another word for Bell-violating, which is of course accepted, because it has been demonstrated experimentally and nobody has denied that. The open question is the mechanism and superdeterminism is one way to address it.

"

Right, as Sabine points out in her video:

"

Hidden Variables + Locality + Statistical Independence = Obeys Bell inequality

"

Once you've committed to explaining entanglement with a mechanism (HV), you're stuck with violating at least one of Locality or Statistical Independence.

I disagree. Copenhagen's "there are limits to what we can know about reality, quantum theory is the limit we can probe" is no different from "reality is made up of more than quantum theory" which SD implies. It's semantics.

"

Maybe. But with this notion of semantics the question is: what topic regarding interpretations and foundations of established physics isn't semantics?

"

As for MWI, yes, but by doing away with unique outcomes (at least in the most popular readings of Everettian QM) you literally state: "The theory only makes sense if you happen to be part of the wavefunction where the theory makes sense, but you are also part of maverick branches where QM is invalidated, thus nothing can really be validated as it were pre-QM". I would argue that this stance is equally radical in its departure from what we consider the scientific method to be.

"

I find it difficult to discuss this because there are problems with the role of probabilities in the MWI which may or may not be solved. For now, I would say that I don't see the difference between my experience of the past right now belonging to a maverick branch vs. a single world view where by pure chance, I and everybody else got an enormous amount of untypical data which lead us to the inference of wrong laws of physics.

Can you prove this?

"

Isn't it obvious? We are talking about a lack of statistical independence between a photon source at A and light sources in two quasars, each a billion light-years from A in opposite directions. How else could that possibly be except by some kind of pre-existing correlation?

"

Is this not similar to claiming that in the scenario occurring in controlled medical studies, any correlations would be due to correlations in the sources of randomness and the selected patient?

"

Sort of. Correlations between the "sources of randomness" and the patient would be similar, yes. (And if we wanted to be extra, extra sure to eliminate such correlations, we could use light from quasars a billion light-years away as the source of randomness for medical experiments, just as was done in the scenario I described.) But that has nothing to do with the placebo effect, and is not what I understood you to be talking about before.

Then the proponents of “superdeterminism” should tackle the radioactive decay.

"

I don't study SD, so I don't know what they're looking for exactly. Maybe they can't control sample preparation well enough for radioactive decay? Just guessing, because that seems like an obvious place to look.

Consider the following experimental setup:

I have a source that produces two entangled photons at point A. The two photons go off in opposite directions to points B and C, where their polarizations are measured. Points B and C are each one light-minute away from point A.

At each polarization measurement, B and C, the angle of the polarization measurement is chosen 1 second before the photon arrives, based on random bits of information acquired from incoming light from a quasar roughly a billion light-years away that lies in the opposite direction from the photon source at A.

A rough diagram of the setup is below:

Quasar B — (1B LY) — B — (1 LM) — A — (1 LM) — C — (1B LY) — Quasar C

In this setup, any violation of statistical independence between the angles of the polarizations and the results of the individual measurements (not the correlations between the measurements, those will be as predicted by QM, but the statistics of each measurement taken separately) would have to be due to some kind of pre-existing correlation between the photon source at A and the distant quasars at both B and C. This is the sort of thing that superdeterminism has to claim must exist.

"

Exactly. On p. 3 of Superdeterminism: A Guide for the Perplexed, Sabine writes:

"

What does it mean to violate Statistical Independence? It means that fundamentally everything in the universe is connected with everything else, if subtly so.

"

Copenhagen's "there are limits to what we can know about reality, quantum theory is the limit we can probe" is no different from "reality is made up of more than quantum theory" which SD implies. It's semantics.

"

I disagree. There could be a superdeterministic theory underlying QM which isn

"

As for MWI, yes, but by doing away with unique outcomes (at least in the most popular readings of Everettian QM) you literally state: "The theory only makes sense if you happen to be part of the wavefunction where the theory makes sense, but you are also part of maverick branches where QM is invalidated, thus nothing can really be validated as it were pre-QM". I would argue that this stance is equally radical in its departure from what we consider the scientific method to be.

"

I find this difficult to discuss because the MWI still has the problem of how to make sense of probabilities. This might be a problem in which case I'm inclined to agree with you. But

I only have trouble to "see" where that scenario occurring in controlled medical studies was excluded in what Shimony, Clauser and Horne wrote.

"

I don't see how the two have anything to do with each other.

"

I also tried to guess what they actually meant

"

Consider the following experimental setup:

I have a source that produces two entangled photons at point A. The two photons go off in opposite directions to points B and C, where their polarizations are measured. Points B and C are each one light-minute away from point A.

At each polarization measurement, B and C, the angle of the polarization measurement is chosen 1 second before the photon arrives, based on random bits of information acquired from incoming light from a quasar roughly a billion light-years away that lies in the opposite direction from the photon source at A.

A rough diagram of the setup is below:

Quasar B — (1B LY) — B — (1 LM) — A — (1 LM) — C — (1B LY) — Quasar C

In this setup, any violation of statistical independence between the angles of the polarizations and the results of the individual measurements (not the correlations between the measurements, those will be as predicted by QM, but the statistics of each measurement taken separately) would have to be due to some kind of pre-existing correlation between the photon source at A and the distant quasars at both B and C. This is the sort of thing that superdeterminism has to claim must exist.

But we would naively not expect that it has an influence whether the experimenter (i.e. the doctor) knows which patient received which treatment. But apparently it has some influence.

"

Whether this is true or not (that question really belongs in the medical forum for discussion, not here), this is not the placebo effect. The placebo effect is where patients who get the placebo (but don't know it–and in double-blind trials the doctors don't know either) still experience a therapeutic effect.

I don't see how this has an analogue in physics.

I have never understood why there is such a visceral oppositional response to superdeterminism while the Many Worlds Interpretation and Copenhagen Interpretation enjoy tons of support. In Many Worlds the answer is essentially: "Everything happens, thus every observation is explained, you just happen to be the observer observing this outcome". In Copenhagen it's equally lazy: "Nature is just random, there is no explanation". How are these any less anti-scientific than Superdeterminism?

"

I think that all three somewhat break with simple ideas about how science is done and what it tells us but to a different degree.

Copenhagen says that there are limits to what we can know because we need to split the world into the observer and the observed. The MWI does away with unique outcomes. Superdeterminism does away with the idea that we can limit the influence of external degrees of freedom on the outcome of our experiment. These might turn out to be different sides of the same coin but taken at face value, the third seems like the most radical departure from the scientific method to me.

There's also the point that unlike Copenhagen and the MWI, superdeterminism is not an interpretation of an existing theory. It is a property of a more fundamental theory which doesn't make any predictions to test it (NB: Sabine Hossenfelder disagrees) because it doesn't even exist yet.

If I understand you correctly you are saying that it is (or might be) possible but it has not been found yet.

"

In principle, yes.

"

Doesn't this contradict the no communication theorem?

"

No, because in theories such as Bohmian mechanics, the no communication theorem is a FAPP (for all practical purposes) theorem.

Roughly, this is like the law of large numbers. Is it valid for ##N=1000##? In principle, no. In practice, yes.

There is no any conspiracy. See https://www.physicsforums.com/threa…unterfactual-definiteness.847628/post-5319182

"

If I understand you correctly you are saying that it is (or might be) possible but it has not been found yet. Doesn't this contradict the no communication theorem? It would require that QM needs to be modified completely or may be superdeterminism is the explanation.

I find both non-locality and superdeterminism equally unappealing. Both mechanism require fine-tuning.

"

How does non-locality require fine tuning?

"

However, if I had to choose, I would probably pick superdeterminism, just because it is less anthropocentric.

"

How is non-locality anthropocentric?

"

There are non-local cause and effect relations everywhere but nature conspires in such a way to prohibit communication? I can't make myself believe that, but that's just my personal opinion.

"

There is no any conspiracy. See https://www.physicsforums.com/threa…unterfactual-definiteness.847628/post-5319182

You'd think so! But these photons never existed in a common light cone because their lifespan is short. In fact, in this particular experiment, one photon was detected (and ceased to exist) BEFORE its entangled partner was created.

"

It is probably the phrasing that you use that confuses me, but I still don't understand. For example the past light cone of the event = production of the second pair of photons, contains the whole life of the first photon, that no longer exists. All the possible light cones that you can pick intersect.

Can you clarify. How can there be no common overlap? Any two past lightcones overlap.

"

You'd think so! But these photons never existed in a common light cone because their lifespan is short. In fact, in this particular experiment, one photon was detected (and ceased to exist) BEFORE its entangled partner was created.

In this next experiment, the entangled photon pairs are spatially separated (and did coexist for a period of time). However, they were created sufficiently far apart that they never occupied a common light cone.

High-fidelity entanglement swapping with fully independent sources (2009)

https://arxiv.org/abs/0809.3991

"Entanglement swapping allows to establish entanglement between independent particles that never interacted nor share any common past. This feature makes it an integral constituent of quantum repeaters. Here, we demonstrate entanglement swapping with time-synchronized independent sources with a fidelity high enough to violate a Clauser-Horne-Shimony-Holt inequality by more than four standard deviations. The fact that both entangled pairs are created by fully independent, only electronically connected sources ensures that this technique is suitable for future long-distance quantum communication experiments as well as for novel tests on the foundations of quantum physics."

And from a 2009 paper that addresses the theoretical nature of entanglement swapping with particles with no common past, here is a quote that indicates that in fact this entanglement IS problematic for any theory claiming the usual locality (local causality):

"It is natural to expect that correlations between distant particles are the result of causal influences originating in their common past — this is the idea behind Bell’s concept of local causality [1]. Yet, quantum theory predicts that measurements on entangled particles will produce outcome correlations that cannot be reproduced by any theory where each separate outcome is locally determined by variables correlated at the source. This nonlocal nature of entangled states can be revealed by the violation of Bell inequalities.

"However remarkable it is that quantum interactions can establish such nonlocal correlations, it is even more remarkable that particles that never directly interacted can also become nonlocally correlated. This is possible through a process called entanglement swapping [2]. Starting from two independent pairs of entangled particles, one can measure jointly one particle from each pair, so that the two other particles become entangled, even though they have no common past history. The resulting pair is a genuine entangled pair in every aspect, and can in particular violate Bell inequalities.

"Intuitively, it seems that such entanglement swapping experiments exhibit nonlocal effects even stronger than those of usual Bell tests. To make this intuition concrete and to fully grasp the extent of nonlocality in entanglement swapping experiments, it seems appropriate to contrast them with the predictions of local models where systems that are initially uncorrelated are described by uncorrelated local variables. This is the idea that we pursue here."

Despite the comments from Nullstein to the contrary, such swapped pairs are entangled without any qualification – as indicated in the quote above.

Personally I wouldn't bet on any classical deterministic mechanism in the first place. I find both non-locality and superdeterminism equally unappealing.

"

As Sabine points out, if you want a constructive explanation of Bell inequality violation, it's nonlocality and/or SD. That's why we pivoted to a principle account (from information-theoretic reconstructions of QM). We cheated a la Einstein for time dilation and length contraction.

If DrChinese claimed that it was wrong

"

As I have already said, he didn't. He never said anything in the Deutsch paper you referenced was wrong. He just said it wasn't relevant to the entanglement swapping experiments he has referenced. He also gave a specific argument for why (in post #11, repeated in post #24).

Your only response to that, so far, has been to assert that the Deutsch paper is relevant to the entanglement swapping experiments @DrChinese referenced, without any supporting argument and without addressing the specific reason @DrChinese gave for saying it was not.

"

Since I quoted that paper

"

I don't see any quotes from that paper in any of your posts. You referenced it, but as far as I can tell you gave no specifics about how you think that paper addresses the issues @DrChinese is raising.

"Quantum nonlocality" is just another word for Bell-violating, which is of course accepted, because it has been demonstrated experimentally and nobody has denied that. The open question is the mechanism and superdeterminism is one way to address it.

"

It's perhaps not a fair question but what odds would you give on SD being that mechanism? Would you bet even money on this experiment proving QM wrong? Or, 10-1 against or 100-1 against?

I wonder what Hossenfelder would be prepared to bet on it?

I'm not aware of a rebuttal to Deutsch's paper, so his argument must be taken to be the current state of our knowledge.

"

This is not a valid argument. There are many papers that never get rebuttals but are still incorrect.

"

If there was a mistake in Deutsch's paper, one should be able to point it out.

"

This is not a valid argument either; many papers have had mistakes that are not easily pointed out. Nor is it relevant to what @DrChinese is saying. @DrChinese is not claiming there is a mistake in Deutsch's paper. He is saying he doesn't see how Deutsch's paper addresses the kind of experiment he is talking about (entanglement swapping) at all. If you think Deutsch's paper does address that, the burden is on you to explain how.

…I just wanted to defend Deutsch's reputation and point out that DrChinese did not address the argument in the article. I considered his response quite rude, given that I just argued based on a legit article by a legit researcher. The article contains detailed calculations, so one could just point to a mistake if there was one.

"

I read the 1999 Deutsch article, and unfortunately it did not map to my 2012 reference. There is actually no reason it should (or would), as the experiment had not been conceived at that time.

As I mentioned in another post, Deutsch's reputation does not need defending. However, I would not agree that his well-known positions on MWI should be considered as representing generally accepted science. Some are, and some are not, and I certainly think it is fair to identify positions as "personal" vs "generally accepted" when it is relevant. There is nothing wrong with having personal opinions that are contrary to orthodox science, but I believe those should be identified.

Show me where Zeilinger (or any of the authors of similar papers) says there are local explanations for the kind of swapping I describe, and we'll be on to something. From the paper: "The observed quantum correlations manifest the non-locality of quantum mechanics in spacetime." I just don't see how SD can get around this: it is supposed to restore realism and locality to QM.

3. Of course swapping adds to the mystery. And it certainly rules out some types of candidate theories. How can there be a common local cause when there is no common light cone overlap?

"

Can you clarify. How can there be no common overlap? Any two past lightcones overlap.

1. That's an ad hominem argument and also a personal opinion. Deutsch is a well respected physicist working in quantum foundations and quantum computing at Oxford university who has received plenty of awards.

2. That is not necessary, because entanglement swapping isn't really a physical process. It's essentially just an agreement between two distant parties, which photons to use for subsequent experiments. These two parties need information from a third party who has access to particles from both Bell pairs and who communicates with them over a classical channel at sub light speed.

3. Again, nobody denied this. The statement is that entanglement produced by entanglement swapping can be explained if the correlations in the original Bell pairs can be explained. Entanglement swapping doesn't add to the mystery.

"

1. Relevant here if he is stating something that is not generally accepted (and the part in quotes was actually from another physicist). Local explanations of swapping are not, and clearly that is the tenor of the paper. Deutsch is of course a respected physicist (and respected by me), that's not the issue.

2. I specifically indicated how your citation differed from swapping using independent sources outside of a common light cone (which does not occur in Deutsch's earlier paper). There are no "local" explanations for how 2 photons that have never existed within a common light cone manage to be perfectly correlated (entangled) a la Bell. That you might need information from a classical channel to postselect pairs is irrelevant. No one is saying there is FTL signaling or the like.

3. Of course swapping adds to the mystery. And it certainly rules out some types of candidate theories. How can there be a common local cause when there is no common light cone overlap?

From the experiment that they are trying to perform, the hope seems to be that if the detectors are prepared similar enough then statistical independence will be violated. In other words, that what we've seen as the statistical independence of measurements is an artifact of detectors in different states. Is that a correct reading here?

"

Well, my understanding is that the experiments will check for violations of randomness where QM predicts randomness. That means we should look for a theory X underwriting QM so that QM is just a statistical approximation to theory X. Then you can infer the existence of hidden variables (hidden in QM, but would be needed in theory X) whence either or both of nonlocality or/and the violation of Statistical Independence. Just my guess, I don't study SD.

1. Certainly, RBW is local in the sense that Bohmian Mechanics is not. Bohmian Mechanics does not feature superluminal signaling either, and we know we don't want to label BM as "local".

So I personally wouldn't label RBW as you do ("local"); as I label it "quantum nonlocal" when a quantum system (your block) has spatiotemporal extent (your 4D contextuality) – as in the Bell scenario. But I see why you describe it as you do: it's because c is respected when you draw context vertices on a block (not sure that is the correct language to describe that).

2. Thanks for the references.

"

I should be more precise, sorry. In the quantum-classical contextuality of RBW, "local" means "nothing moving superluminally" and that includes "no superluminal information exchange." That's because in our ontology, quanta of momentum exchanged between classical objects don't have worldlines.

Notice also that when I said RBW does not entail violations of Statistical Independence because the same state is always faithfully produced and measured using fair sampling, I could have said that about QM in general. Again, what I said follows from the fact that there are no "quantum entities" moving through space, there is just the spatiotemporal (4D) distribution of discrete momentum exchanges between classical objects. And that quantum-classical contextuality conforms to the boundary of a boundary principle and the relativity principle.

So, how do we explain violations of Bell's inequality without nonlocal interactions, violations of Statistical Indendence, or "shut up and calculate" (meaning "the formalism of QM works, so it's already 'explained'")? Our principle explanation of Bell state entanglement doesn't entail an ontology at all, so there is no basis for nonlocality or violations of Statistical Independence. And, it is the same (relativity) principle that explains time dilation and length contraction without an ontological counterpart and without saying, "the Lorentz transformations work, so it's already 'explained'". So, we do have an explanation of Bell state entanglement (and therefore of the Tsirelson bound).

That's an ad hominem argument

"

So is your response (although since your statements about Deutsch are positive, it might be better termed a "pro homine" argument):

"

Deutsch is a well respected physicist working in quantum foundations and quantum computing at Oxford university who has received plenty of awards.

"

None of these are any more relevant to whether Deutsch's arguments are valid than the things you quoted from @DrChinese. What is relevant is what Deutsch actually says on the topic and whether it is logically coherent and consistent with what we know from experiments.

1. Locality is not violated because there is no superluminal signaling.

"

"

2. RBW is nonetheless contextual in the 4D sense, see here and here for our most recent explanations of that.

"

1. Certainly, RBW is local in the sense that Bohmian Mechanics is not. Bohmian Mechanics does not feature superluminal signaling either, and we know we don't want to label BM as "local".

So I personally wouldn't label RBW as you do ("local"); as I label it "quantum nonlocal" when a quantum system (your block) has spatiotemporal extent (your 4D contextuality) – as in the Bell scenario. But I see why you describe it as you do: it's because c is respected when you draw context vertices on a block (not sure that is the correct language to describe that).

2. Thanks for the references.

[And of course I know you are not arguing in favor of superdeterminism in your Insight.]

"

Correct, I'm definitely not arguing for SD. If Statistical Independence is violated and QM needs to be underwritten as Sabine suggests, physics is not going to be fun for me anymore

"

Could just as rationally be:

-Row 2 occurs 75% of the time when 23 measurement occurs.

-Row 3 occurs 0% of the time when 23 measurement occurs. This is so rows 2 and 3 add to 75%.

-Row 4 occurs 25% of the time when 23 measurement occurs.

Total=100%

…Since the stats add up the same. After all, that would cause the RRG & GGR cases to be wildly overrepresented, but since we are only observing the 23 measurements, we don't see that the other (unmeasured) hidden variable (1) occurrence rate is dependent on the choice of the 23 basis. Since we are supposed to believe that it exists (that is the purpose of a hidden variable model) but has a value that makes it inconsistent with the 23 pairing (of course this inconsistency is hidden too).

"

Right, we have no idea exactly how Statistical Independence is violated because it's all "hidden." My Table 1 is just an example to show what people refer to as the "conspiratorial nature" of SD. Sabine addresses that charge in her papers, too.

"

Obviously, in this table, we have statistical independence as essentially being the same thing as contextuality. Which more or less orthodox QM is anyway. Further, it has a quantum nonlocal element, as the Bell tests with entanglement swapping indicate*. That is because the "23" choice was signaled to the entangled source so that the Row 2 case can be properly overrepresented. But in an entanglement swapping setup, there are 2 sources! So now we need to modify our superdeterministic candidate to account for a mechanism whereby the sources know to synchronize in such a way as to yield the expected results. Which is more or less orthodox QM anyway.

My point is that the explicit purpose of a Superdeterministic candidate is to counter the usual Bell conclusion, that being: a local realistic theory (read: noncontextual causal theory) is not viable. Yet we now are stuck with a superdeterministic theory which features local hidden variables, except they are also contextual and quantum nonlocal. I just don't see the attraction.

"

Sabine points out that to evade the conclusion of Bell, you need to violate either or both of Statistical Independence or/and locality. She also points out that SD could violate both, giving you the worst of both worlds. I'll let the experimental evidence decide, but I'm definitely hoping for no SD

"

*Note that RBW does not have any issue with this type of quantum nonlocal element. The RBW diagrams can be modified to account for entanglement swapping, as I see it (at least I think so). RBW being explicitly contextual, as I understand it: "…spatiotemporal relations provide the ontological basis for our geometric interpretation of quantum theory…" And on locality: "While non-separable, RBW upholds locality in the sense that there is no action at a distance, no instantaneous dynamical or causal connection between space-like separated events [and there are no space-like worldlines.]" [From one of your papers (2008).]

"

We uphold locality and Statistical Independence in our principle account of QM as follows. Locality is not violated because there is no superluminal signaling. Statistical Independence is not violated because Alice and Bob faithfully reproduce the most accurate possible QM state and make their measurements in accord with fair sampling (independently and randomly). In this principle account of QM (ultimately based on quantum information theorists' principle of Information Invariance & Continuity, see the linked paper immediately preceding), the state being created in every trial of the experiment is the best one can do, given that everyone must measure the same value for Planck's constant regardless of the orientation of their measurement device. RBW is nonetheless contextual in the 4D sense, see here and here for our most recent explanations of that.

I thought that the motivation for superdeterminism wasn't to avoid nonlicality but nonclassicality. The claim being that the world is really classical, but somehow (through the special initial conditions and evolution laws) it only seems like quantum theory is a correct description.

"

What exactly do you mean by non-classicality?

Super-determinism is super-nonlocal

In her "Guide for the Perplexed", Sabine Hossenfelder writes:

"What does it mean to violate Statistical Independence? It means that fundamentally everything in the universe is connected with everything else, if subtly so. You may be tempted to ask where these connections come from, but the whole point of superdeterminism is that this is just how nature is. It’s one of the fundamental assumptions of the theory, or rather, you could say one drops the usual assumption that such connections are absent. The question for scientists to address is not why nature might choose to violate Statistical Independence, but merely whether the hypothesis that it is violated helps us to better describe observations."

I think this quote perfectly explains how super-determinism avoids quantum nonlocality. Not by replacing it with locality, but by replacing it with super-nonlocality!

In ordinary nonlocality, things get correlated over distance due to nonlocal forces. In super-nonlocality one does not need forces for that. They are correlated just because the fundamental laws of Nature (or God if you will) say so. You don't need to fine tune the initial conditions to achieve such correlations, it's a general law that does not depend on initial conditions. You don't need any complicated conspiracy for that, in principle it can be a very simple general law.

"

I thought that the motivation for superdeterminism wasn't to avoid nonlicality but nonclassicality. The claim being that the world is really classical, but somehow (through the special initial conditions and evolution laws) it only seems like quantum theory is a correct description.

In her "Guide for the Perplexed", Sabine Hossenfelder writes:

"What does it mean to violate Statistical Independence? It means that fundamentally everything in the universe is connected with everything else, if subtly so. You may be tempted to ask where these connections come from, but the whole point of superdeterminism is that this is just how nature is. It’s one of the fundamental assumptions of the theory, or rather, you could say one drops the usual assumption that such connections are absent. The question for scientists to address is not why nature might choose to violate Statistical Independence, but merely whether the hypothesis that it is violated helps us to better describe observations."

I think this quote perfectly explains how super-determinism avoids quantum nonlocality. Not by replacing it with locality, but by replacing it with super-nonlocality!

In ordinary nonlocality, things get correlated over distance due to nonlocal forces. In super-nonlocality one does not need forces for that. They are correlated just because the fundamental laws of Nature (or God if you will) say so. You don't need to fine tune the initial conditions to achieve such correlations, it's a general law that does not depend on initial conditions. You don't need any complicated conspiracy for that, in principle it can be a very simple general law.

I don't see the relevance of this article. If you can locally explain the correlations of an ordinary Bell pair, you can also locally explain the results of this paper. This is just entanglement swapping, which can be explained locally (https://arxiv.org/abs/quant-ph/9906007).

"

In your reference (1999), its Figure 3 is not a fair representation of the experiment I presented (2012). Deutsch is not what I would call a universally accepted writer on the subject, and "is a legendary advocate for the MWI". "Quantum nonlocality" is generally accepted in current science. And we've had this discussion here many a time. The nature of that quantum nonlocality has no generally accepted mechanism, however. That varies by interpretation.

The modern entanglement swapping examples have entangled particles which never exist in a common backward light cone – so they cannot have had an opportunity for a local interaction or synchronization. The A and B particles are fully correlated like any EPR pair, even though far apart and having never interacted*. That is quantum nonlocality, plain and simple. There are no "local" explanations for entanglement swapping of this type (that I have seen), of course excepting interpretations that claim to be fully local and forward in time causal (which I would probably dispute). Some MWI proponents makes this claim, although not all.

*Being from independent photon sources, and suitably distant to each other.

If the instruction sets are produced in concert with the settings as shown in the Table, the 23/32 setting would produce agreement in 1/4 of the trials, which matches QM.

"

[And of course I know you are not arguing in favor of superdeterminism in your Insight.]

My mistake, yes, I see now that is a feature of the table. So basically, that would equivalent to saying that for the 23 case (to be specific) – which in the table is marked as follows (where rows 2/3/4, the 23 cases will add to 100% as follows:

-Row 2 occurs 50% of the time when 23 measurement occurs. Because this is doubled by design/assumption.

-Row 3 occurs 25% of the time when 23 measurement occurs.

-Row 4 occurs 25% of the time when 23 measurement occurs.

Total=100%

Could just as rationally be:

-Row 2 occurs 75% of the time when 23 measurement occurs.

-Row 3 occurs 0% of the time when 23 measurement occurs. This is so rows 2 and 3 add to 75%.

-Row 4 occurs 25% of the time when 23 measurement occurs.

Total=100%

…Since the stats add up the same. After all, that would cause the RRG & GGR cases to be wildly overrepresented, but since we are only observing the 23 measurements, we don't see that the other (unmeasured) hidden variable (1) occurrence rate is dependent on the choice of the 23 basis. Since we are supposed to believe that it exists (that is the purpose of a hidden variable model) but has a value that makes it inconsistent with the 23 pairing (of course this inconsistency is hidden too).

Obviously, in this table, we have statistical independence as essentially being the same thing as contextuality. Which more or less orthodox QM is anyway. Further, it has a quantum nonlocal element, as the Bell tests with entanglement swapping indicate*. That is because the "23" choice was signaled to the entangled source so that the Row 2 case can be properly overrepresented. But in an entanglement swapping setup, there are 2 sources! So now we need to modify our superdeterministic candidate to account for a mechanism whereby the sources know to synchronize in such a way as to yield the expected results. Which is more or less orthodox QM anyway.

My point is that the explicit purpose of a Superdeterministic candidate is to counter the usual Bell conclusion, that being: a local realistic theory (read: noncontextual causal theory) is not viable. Yet we now are stuck with a superdeterministic theory which features local hidden variables, except they are also contextual and quantum nonlocal. I just don't see the attraction.

*Note that RBW does not have any issue with this type of quantum nonlocal element. The RBW diagrams can be modified to account for entanglement swapping, as I see it (at least I think so). RBW being explicitly contextual, as I understand it: "…spatiotemporal relations provide the ontological basis for our geometric interpretation of quantum theory…" And on locality: "While non-separable, RBW upholds locality in the sense that there is no action at a distance, no instantaneous dynamical or causal connection between space-like separated events [and there are no space-like worldlines.]" [From one of your papers (2008).]

In your example, the 32/23 combinations produce a different outcome sets for certain hidden variable combinations. In an actual experiment with the 32 or 23 settings as static, you'd notice that immediately (since the results must still match prediction) – so there must be more to the SI violation so that this result does not occur. And just like that, the Superdeterminism candidate must morph yet again.

"

If the instruction sets are produced in concert with the settings as shown in the Table, the 23/32 setting would produce agreement in 1/4 of the trials, which matches QM.

In

Superdeterminism: A Guide for the Perplexed

one reads:

"The two biggest problem with superdeterminism at the moment are (a) the lack of a generally applicable fundamental theory and (b) the lack of experiment."

"

In the New Scientist article, it did say Ghosh was planning an experiment proposed by Sabine.

When I click on the link to "Mermin's Challenge", it says I'm not allowed to edit that item.

"

Sorry, I accidentally inserted my link as an author to that Insight. Hopefully, it's fixed now :-)