Superdeterminism and the Mermin Device

Superdeterminism as a way to resolve the mystery of quantum entanglement is generally not taken seriously in the foundations community, as explained in this video by Sabine Hossenfelder (posted in Dec 2021). In her video, she argues that superdeterminism should be taken seriously, indeed it is what quantum mechanics (QM) is screaming for us to understand about Nature. According to her video per the twin-slit experiment, superdeterminism simply means the particles must have known at the outset of their trip whether to go through the right slit, the left slit, or both slits, based on what measurement was going to be done on them. Thus, she defines superdeterminism this way:

Superdeterminism: What a quantum particle does depends on what measurement will take place.

In Superdeterminism: A Guide for the Perplexed she gives a bit more technical definition:

Theories that do not fulfill the assumption of Statistical Independence are called “superdeterministic” … .

where Statistical Independence in the context of Bell’s theory means:

There is no correlation between the hidden variables, which determine the measurement outcome, and the detector settings.

Sabine points out that Statistical Independence should not be equated with free will and I agree, so a discussion of free will in this context is a red herring and will be ignored.

Since the behavior of the particle depends on a future measurement of that particle, Sabine writes:

This behavior is sometimes referred to as “retrocausal” rather than superdeterministic, but I have refused and will continue to refuse using this term because the idea of a cause propagating back in time is meaningless.

Ruth Kastner argues similarly here and we agree. Simply put, if the information is coming from the future to inform particles at the source about the measurements that will be made upon them, then that future is co-real with the present. Thus, we have a block universe and since nothing “moves” in a block universe, we have an “all-at-once” explanation per Ken Wharton. Huw Price and Ken say more about their distinction between superdeterminism and retrocausality here. I will focus on the violation of Statistical Independence and not worry about these semantics.

So, let me show you an example of the violation of Statistical Independence using Mermin’s instruction sets. If you are unfamiliar with the mystery of quantum entanglement illustrated by the Mermin device, read about the Mermin device in this Insight, “Answering Mermin’s Challenge with the Relativity Principle” before continuing.

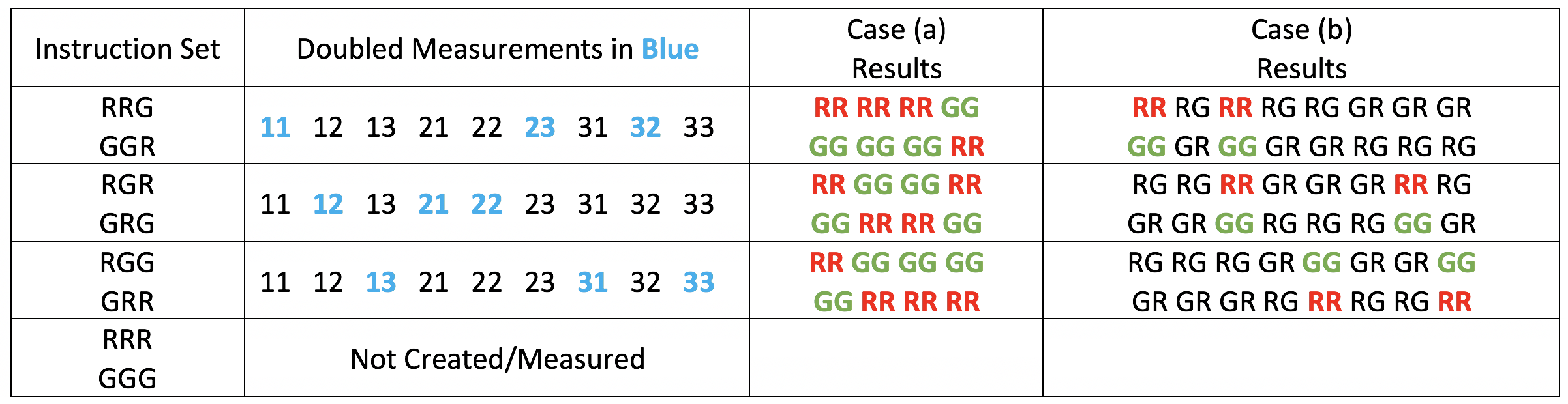

In using instruction sets to account for quantum-mechanical Fact 1 (same-color outcomes in all trials when Alice and Bob choose the same detector settings (case (a)), Mermin notes that quantum-mechanical Fact 2 (same-color outcomes in ##\frac{1}{4}## of all trials when Alice and Bob choose different detector settings (case (b)) must be violated. In making this claim, Mermin is assuming that each instruction set produced at the source is measured with equal frequency in all nine detector setting pairs (11, 12, 13, 21, 22, 23, 31, 32, 33). That assumption is called Statistical Independence. Table 1 shows how Statistical Independence can be violated so as to allow instruction sets to reproduce quantum-mechanical Facts 1 and 2 per the Mermin device.

Table of Contents

Table 1

In row 2 column 2 of Table 1, you can see that Alice and Bob select (by whatever means) setting pairs 23 and 32 with twice the frequency of 21, 12, 31, and 13 in those case (b) trials where the source emits particles with the instruction set RRG or GGR (produced with equal frequency). Column 4 then shows that this disparity in the frequency of detector setting pairs would indeed allow our instruction sets to satisfy Fact 2. However, the detector setting pairs would not occur with equal frequency overall in the experiment and this would certainly raise red flags for Alice and Bob. Therefore, we introduce a similar disparity in the frequency of the detector setting pair measurements for RGR/GRG (12 and 21 frequencies doubled, row 3) and RGG/GRR (13 and 31 frequencies doubled, row 4), so that they also satisfy Fact 2 (column 4). Now, if these six instruction sets are produced with equal frequency, then the six case (b) detector setting pairs will occur with equal frequency overall. In order to have an equal frequency of occurrence for all nine detector setting pairs, let detector setting pair 11 occur with twice the frequency of 22 and 33 for RRG/GGR (row 2), detector setting pair 22 occur with twice the frequency of 11 and 33 for RGR/GRG (row 3), and detector setting pair 33 occur with twice the frequency of 22 and 11 for RGG/GRR (row 4). Then, we will have accounted for quantum-mechanical Facts 1 (column 3) and 2 (column 4) of the Mermin device using instruction sets with all nine detector setting pairs occurring with equal frequency overall.

Since the instruction set (hidden variable values of the particles) in each trial of the experiment cannot be known by Alice and Bob, they do not suspect any violation of Statistical Independence. That is, they faithfully reproduced the same QM state in each trial of the experiment and made their individual measurements randomly and independently, so that measurement outcomes for each detector setting pair represent roughly ##\frac{1}{9}## of all the data. Indeed, Alice and Bob would say their experiment obeyed Statistical Independence, i.e., there is no (visible) correlation between what the source produced in each trial and how Alice and Bob chose to make their measurement in each trial.

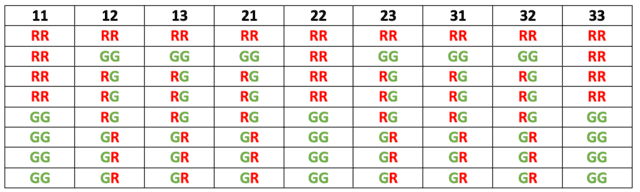

Here is a recent (2020) argument against such violations of Statistical Independence by Eddy Chen. And, here is a recent (2020) argument that superdeterminism is “fine-tuned” by Indrajit Sen and Antony Valentini. So, the idea is contested in the foundations community. In response, Vance, Sabine, and Palmer recently (2022) proposed a different version of superdeterminism here. Thinking dynamically (which they don’t — more on that later), one could say the previous version of superdeterminism has the instruction sets controlling Alice and Bob’s measurement choices (Table 1). The new version (called “supermeasured theory”) has Alice and Bob’s measurement choices controlling the instruction sets. That is, each instruction set is only measured in one of the nine measurement pairs (Table 2). Indeed, there are 72 instruction sets for the 72 trials of the experiment shown in Table 2. That removes the complaint about superdeterminism being “conspiratorial” or “fine-tuned” or “violating free will.”

Table 2

Again, that means you need information from the future controlling the instruction set sent from the source, if you’re thinking dynamically. However, Vance et al. do not think dynamically writing:

In the supermeasured models that we consider, the distribution of hidden variables is correlated with the detector settings at the time of measurement. The settings do not cause the distribution. We prefer to use find [sic] Adlam’s terms—that superdeterministic/supermeasured theories apply an “atemporal” or “all-at-once” constraint—more apt and more useful.

Indeed, they voice collectively the same sentiment about retrocausality that Sabine voiced alone in her quote above. They write:

In some parts of the literature, authors have tried to distinguish two types of theories which violate Bell-SI. Those which are superdetermined, and those which are retrocausal. The most naive form of this (e.g. [6]) seems to ignore the prior existence of the measurement settings, and confuses a correlation with a causation. More generally, we are not aware of an unambiguous definition of the term “retrocausal” and therefore do not want to use it.

In short, there does seem to be an emerging consensus between the camps calling themselves superdeterministic and retrocausal that the best way to view violations of Statistical Independence is in “all-at-once” fashion as in Geroch’s quote:

There is no dynamics within space-time itself: nothing ever moves therein; nothing happens; nothing changes. In particular, one does not think of particles as moving through space-time, or as following along their world-lines. Rather, particles are just in space-time, once and for all, and the world-line represents, all at once, the complete life history of the particle.

Regardless of the terminology, I would point out that Sabine is not merely offering an interpretation of QM, but she is proposing the existence of a more fundamental (deterministic) theory for which QM is a statistical approximation. In this paper, she even suggests “what type of experiment has the potential to reveal deviations from quantum mechanics.” Specifically:

This means concretely that one should make measurements on states prepared as identically as possible with devices as small and cool as possible in time-increments as small as possible.

According to this article in New Scientist (published in May 2021):

The good news is that Siddharth Ghosh at the University of Cambridge has just the sort of set-up that Hossenfelder needs. Ghosh operates nano-sensors that can detect the presence of electrically charged particles and capture information about how similar they are to each other, or whether their captured properties vary at random. He plans to start setting up the experiment in the coming months.

We’ll see what the experiments tell us.

PhD in general relativity (1987), researching foundations of physics since 1994. Coauthor of “Beyond the Dynamical Universe” (Oxford UP, 2018).

I am interested in what people get from those paper also, in addition this paper:

Hidden assumptions in the derivation of the Theorem of Bell

https://arxiv.org/abs/1108.3583

Maybe a new thread should be started though?

"

The work of De Raedt et al has nothing to do with this thread. So yes, a new thread would be appropriate if you choose to persue. You should also be specific about what element of their team's work you are interested in. Keep in mind that it is not generally accepted science, as there are literally dozens of papers that make similar assertions about Bell's Theorem. Those have yet to gain traction within the community, any more than Superdeterminism* has.

Their work (Hess, De Raedt, Michielsen) approaches things from a different perspective, and there is a lot of computer science involved. I know a bit about it, and would be happy to discuss where I can add something useful.

*I would say that Superdeterminism ("The Great Conspiracy") as a hypothesis has received virtually nil serious consideration to date. For the obvious reason that its very premise means it can never add anything to our understanding of the quantum world, either theoretically or experimentally. (The same is true of all conspiracy theories, of course.) As FRA says in post #141 above: "If the laws or rules and initial conditions required for the SD to work, can not be learned by an actual inside observer, then it's predictive and explanatory value is essentially zero (even if it, in some sense would be true)."

Which leads us to the topic: what "paradigm" does make sense for an agent, the newtonian paradigm does not?

"

And the only answer at this point is that this is an open area of research. We're certainly not going to resolve that issue here.

"

Compare the task of riemann geometry to define curvatures in terms of intrinsic curvatures only. Rather than imagining the curved surface embedded in an flat enviroment. The perspective with intrinsic vs extrinsic information theory is the same here.

"

No, it isn't, because modeling a spacetime in GR using intrinsic curvature does not require the spacetime to be embedded in any higher dimensional space, and we have no evidence that our universe is so embedded.

Whereas, we know all agents in our universe are embedded in the universe as a whole. And we also know that all agents process information from the rest of the universe and act on the rest of the universe. So there is a huge difference from the case of modeling a spacetime using intrinsic curvature.

"

So by information I include also the "prior implicit information"

"

Do you have a reference for this?

the above comments assume that the concept of "information" being used (a) makes sense, and (b) is the relevant one to use for analyzing, say, learning by humans. I would like to see more than just an assertion from you of those points.

"

Perhaps it will help if I describe a different concept of "information".

Suppose I write a computer program to accomplish some non-trivial task. Consider the bits in my computer's memory that store the program code. Before I write and execute the program, those bits store arbitrary values that do not enable the accomplishment of anything useful. Afterwards, those bits store a very particular set of values that do enable the accomplishment of something useful.

I might describe this process as storing information in those bits. The whole process could be perfectly deterministic. But it certainly seems like information is there in the computer's memory that wasn't there before. Where did the information come from?

At least one common answer is that "information" here means negentropy: the process of setting the bits in the computer's memory to the particular values that encode my program is a large decrease of entropy, because instead of being random bits, corresponding to a large phase space volume (the phase space volume containing all of the bit patterns storable in those bits that don't accomplish anything useful), they are now a very particular bit sequence, corresponding to a tiny phase space volume (the phase space volume of all the bit patterns storable in those bits that accomplish exactly the same thing as my program does). And, according to the second law, I must have increased my own entropy by at least as much in order to accomplish this: all the pizza and soda and Doritos I consumed while writing the program that then got converted into the energy to operate my brain and body, with this metabolic process involving a large entropy increase. And again, this whole process could be perfectly deterministic.

@Fra, whatever concept of "information" you are using, it would not appear to be the concept described above. So what is it?

If the future state is implied by the past. The past encodes the same informatiom as the future.

"

These statements only apply to the entire universe. They do not apply to tiny subsystems of the entire universe.

"

So wherein lies the learning or information gain??

"

Information can get transferred between subsystems even if, over the entire universe, the total information is constant.

Btw, the above comments assume that the concept of "information" being used (a) makes sense, and (b) is the relevant one to use for analyzing, say, learning by humans. I would like to see more than just an assertion from you of those points.

Even QM as it stands today follows the newtonian paradigm, which is charaterised by

1. timeless statspace (q,p) in classical physics and the quantum state space in QM

2. fixed (timeless, nonchanging) dynamical LAWS (ie hamiltonian etc)

3. The state that changes within the statespace as per dynamical evolution laws

"

Quantum field theory doesn't fit this pattern. #1 can sort of apply if you have a closed system, but most systems of interest are not closed. #2 applies only in a very weak sense, as phase transitions can change the effective dynamical laws. #3 doesn't apply because, as a relativistic theory, QFT does not have a "state" that changes with "time"; it can't, because that would require an invariant concept of "now", and there is none in relativity.

in this paradigm there is no regular learning in time

"

Sure there is. "Learning" is a process that happens as part of your #3, the state changing in accordance with dynamical laws.

"

The implicit conjecture though, is that the "choosen" actions of an IGUS/agent is independent on what input appears to it as random noise

"

Only in the sense that the agent won't perceive any pattern in random noise, so it won't take any action that depends on perceiving a pattern.

But if, for example, I have a white noise sound generator and I turn up the volume very high, you're going to do something in response. It won't be anything based on perceiving a pattern in the noise, but that doesn't mean you won't choose any action at all. You sort of acknowledge this:

"

except possibly to the extent that the processing of the noise itself has indirect influences (as it's hard to screen yourself from noise, even if its useless)

"

But doing something to avoid the noise isn't the same as "processing" the noise.

"

This for example would suggest that agents that can't communicate (beacuse they see each others messages as just noise) decouple also in their physical interactions.

"

This doesn't follow at all. I don't need to communicate with you to hit you over the head with a baseball bat and take your wallet. "Physical interactions" is much, much broader than just communication.

By that term is meant that from the perspective of any real agent (ie a coherent part of the universe having finite mass etc), it is not possible to encode the state space of the universe

"

Yes.

"

this is why a real agent learning means the statespace and theory space is constantly revised

"

Meaning, the agent's model of the rest of the universe? Of course, this is obvious.

"

This is how information in biological systems are stored over evolution.

"

Information in the genes, yes.

"

it's not how information is stored in the newtonian paradigm

"

Why not? It's perfectly possible in Newtonian physics to have one piece of the universe encoding some finite amount of information about other parts of the universe, or some finite model of the rest of the universe.

"

This argument is related to Lee Smolins arguments https://arxiv.org/abs/1201.2632, which is also a critique on the "newtonian paradigm" as he calls it.

"

This isn't an argument, it's a speculative hypothesis which we have no way of testing, now or in the foreseeable future.

"

all one can infer is that it's random

"

One can't even infer that. Pseudo-random, in the sense that computers have "random number generators" which are deterministic (give them the same starting seed and they will output the same sequence of numbers), but whose output meets all statistical tests of randomness when properly seeded. Human brains could use the same sort of thing to generate "random" choices when needed; there's no need for "intrinsic" randomness in the sense of some interpretations of QM.

If my memory serves me right, I've seen a number of authors indicate free will being incompatible with determinism.

"

Yes, that's correct. Incompatibilism (Dennett sometimes calls it "libertarianism", not to be confused with the political party or philosophy of that name) vs. compatibilism is one of the long-running debates in this field.

As a philosophy student, I take a great deal of interest in this topic. Do you have any specific references on it, that I could attempt to access via my university library?

"

Actually most of my knowledge of the literature comes from the books on free will by Daniel Dennett, Elbow Room and Freedom Evolves, both of which have extensive bibliographies listing other books and papers. The point of view I have been describing is basically the one Dennett argues for in those books.

it seems to me the notion of true novel learning, resonates badly with determinism

"

Of course if you throw in vague terms like "true novel learning" can make it seem that way, but that's a problem with you using vague terms, not with determinism.

Everything we know about how actual learning works in living things is completely consistent with the underlying laws of physics being deterministic. If you want to claim that that kind of learning isn't "true novel learning", of course I can't stop you, but I don't see why I should care. The kind of learning that enabled me to learn, say, General Relativity or quantum mechanics is the kind of learning I care about, and that kind of learning is perfectly consistent with determinism.

"

if one really believes in eternal timeless laws that are deterministic at fundamental level, then the notion of experimenters free choice must be an illusion

"

This is false. There are cogent concepts of free will that are perfectly consistent with determinism. The literature on compatibilism, which is what I have just described, is voluminous. You can, of course, just decide not to accept any of that literature as valid, but once again, I don't see why I should care. The kind of free will that enables me to choose what to post here, or what job to take, or what house to buy, or what person to marry, is the kind of free will I care about, and is the same kind of free will that enables experimenters to choose what experiments to run. And the literature on compatibilism shows that that kind of free will is perfectly consistent with determinism.

No, not if you dont mind fine tuning

"

So then you are claiming that if our universe is deterministic, we can only learn things if it's also superdeterministic?

In “Quantum measurements and new concepts for experiments with trapped ions”, Ch. Wunderlich and Ch. Balzer remark:

"

As I have already pointed out, "negative result measurements" are irrelevant to this thread since no such measurements are involved in the experiments being discussed.

Here, @RUTA is completely right. What are the details of the "open system" explanation?

"

As I pointed out to @RUTA, this is backwards. The claim that angular momentum conservation is violated is an extraordinary claim, so that claim is the one that needs to have a detailed explanation that takes into account that the measured systems are open systems. Pointing out that the measured systems are open systems is just making clear what any valid supporting argument for the claim that angular momentum is not conserved would have to include.

Why the systems being open systems is relevant, as I have already pointed out multiple times, should be obvious: angular momentum (and other conserved quantities) can be exchanged during the measurement.

If you believe in "determinism", I would be the first to happily argue that it seems inconsistent to not go all the way and suggest superdeterminism.

"

I don't see why. Superdeterminism adds to ordinary determinism a claim (in my view highly implausible) about precise fine-tuning of initial conditions, in order to ensure that all measurement results come out just right to make us believe that the correct laws of physics are quantum mechanics, when in fact they are completely different.

"

This is contrast to say a real computable algorithm for how a real observer can LEARN from it's interaction and make predictions about it's environment and own future.

"

Are you claiming that this is only possible if the actual physics of our world is not deterministic?

In negative-result measurements

"

Which are irrelevant to this discussion since no such measurements are involved in the experiments being discussed.

No one here has shown me how the Bell states account for the missing conserved quantities per this "open system" explanation of entanglement (via classical thinking) only when Alice and Bob make different measurements.

"

The Bell states don't account for it because they only describe the measured particles, not the measurement apparatus and its environment. In other words, the mathematical model consisting of the Bell states is simply inadequate to even analyze the question of whether angular momentum is conserved in Alice's and Bob's measurements, because it does not capture all of the physical systems involved in those measurements.

To even analyze conservation laws during measurements, you need to build a model that includes all of the physical systems involved, since the interactions between them can exchange conserved quantities. Surely this is obvious?

"

"open system" explanation (which I cannot follow at all)

"

I find this statement astounding. You cannot follow the simple fact that, during the measurements, the particles being measured interact with the measuring devices and their environments, and therefore are open systems (open systems being systems that are not isolated and interact with other systems), and that these interactions can exchange angular momentum?

Measuring the single-particle spins in different directions you only have probabilities for the possible outcomes, and of course some correlation between these outcomes, but you cannot proof or disprove angular-momentum conservation with those measurements, because the correlations are not 100% anymore. That's at the heart of the entire issue of entanglement and also with the content of the Bell inequalities for local realistic HV theories being in contradiction to the predictions of QT.

Do you have a more precise definition and argument that would clarify what you mean here?

"

LOL, you tell me! All I can figure out is:

– It's gotta be local, by definition (that's the point after all). So the predetermined "answer" for say, an electron having its spin measured today: it must be able to consult that local object (which conceivably could be hidden inside the electron, making the electron a composite particle).

– It must also have something that says what its spin will be when measured tomorrow. And if it has existed since the big bang, then it needed instructions for 13.8 billion years of measurements (interactions).

“Why the Tsirelson Bound? Bub’s Question and Fuchs’ Desideratum,” W.M. Stuckey, Michael Silberstein, Timothy McDevitt, and Ian Kohler. Entropy 21(7), 692 (2019).

“Re-Thinking the World with Neutral Monism: Removing the Boundaries Between Mind, Matter, and Spacetime,” Michael Silberstein and W.M. Stuckey. Entropy 22(5), 551 (2020).

“Answering Mermin’s Challenge with Conservation per No Preferred Reference Frame,”

W.M. Stuckey, Michael Silberstein, Timothy McDevitt, and T.D. Le. Scientific Reports 10, 15771 (2020).

“The Completeness of Quantum Mechanics and the Determinateness and Consistency of Intersubjective Experience: Wigner’s Friend and Delayed Choice,” Michael Silberstein and W.M. Stuckey. In Consciousness and Quantum Mechanics, edited by Shan Gao (Oxford University Press, 2022) 198–259.

“Beyond Causal Explanation: Einstein’s Principle Not Reichenbach’s,” Michael Silberstein, W.M. Stuckey, and Timothy McDevitt. Entropy 23(1), 114 (2021).

“Introducing Quantum Entanglement to First-Year Students: Resolving the Trilemma,” W.M. Stuckey, Timothy McDevitt, and Michael Silberstein.

“No Preferred Reference Frame at the Foundation of Quantum Mechanics,” W.M. Stuckey, Timothy McDevitt, and Michael Silberstein. Entropy 24(1), 12 (2022).

“Einstein’s Missed Opportunity to Rid Us of ‘Spooky Actions at a Distance’,” W.M. Stuckey. Science X Dialogs (12 October 2020).

“Quantum Information Theorists Produce New ‘Understanding’ of Quantum Mechanics,”

W.M. Stuckey. Science X Dialogs (6 January 2022).

How Quantum Information Theorists Revealed the Relativity Principle at the Foundation of Quantum Mechanics

A Principle Explanation of the “Mysteries” of Modern Physics

Answering Mermin’s Challenge with the Relativity Principle

Exploring Bell States and Conservation of Spin Angular Momentum

The Unreasonable Effectiveness of the Popescu-Rohrlich Correlations

Why the Quantum | A Response to Wheeler’s 1986 Paper

Beyond Causal Explanation: Einstein's Principle Not Reichenbach's

No Preferred Reference Frame in Quantum Mechanics (Non-technical)

No Preferred Reference Frame in Quantum Mechanics (Technical)

Making Sense of Quantum Mechanics per Its Information-Theoretic Reconstructions (invited talk at the Institute for Quantum Optics and Quantum Information in Vienna, April 2022).

Again, this explanation is straightforward and in perfect accord with textbook QM. In the photon polarizer example, classical physics says half the vertically polarized photon should pass through a polarizer at 45 deg. Since QM says the photon either passes or it doesn't, it is impossible to satisfy our classical model of a polarizing filter in that case, but on average, half of all photons do pass. So we see that QM satisfies classical expectations via average-only transmission. When that photon is one of a pair in a Bell state, that leads to average-only conservation of spin angular momentum (spin-1 in this case) when Alice and Bob are making different measurements.

This also maps to the key difference between classical probability theory and quantum probability theory per Information Invariance & Continuity. A classical bit (e.g., opening one of a pair of boxes to find a ball or not) has only discrete measurement options while a quantum bit has continuous measurement options (pure states connected via continuously reversible transformations). There are only the two boxes to open for the classical bit example yielding a ball or no ball, but the polarizer can be rotated continuously in space yielding pass or no pass in every direction.

So, everything I'm sharing on PF has been thoroughly vetted in the foundations community and agrees perfectly with conventional QM thinking (which violates classical thinking in this case). I'm doing my best to explain that here, but the reader must set aside their classical prejudices when dealing with QM in order to follow what I'm saying.

If those who are trying to explain the Bell states via "open systems" ever publish, post or otherwise provide the details, please send me a link via a Physics Forums Conversation. Now I have to get back to work … on our corresponding book :-)

And as I explained to you in those discussions, everything I am saying follows mathematically from the Bell states. There is absolutely nothing wrong with my statements. That's why it's been published numerous times in various contexts now. I have no idea what confuses you about it, so I can't help you there. Sorry.

"

It's a misconception about the meaning of conservation laws on your side. The point is, as I tried to explain to you several times, that if you have prepared the singlet state of two spin-1/2 spins and you measure the angular-momentum components in non-collinear directions, you cannot say more concerning angular-momentum conservation than the probabilities for getting each of the four possible outcomes since all that angular-momentum conservation tells you is that spin components when measured in the same direction are always opposite to each other, given the preparation of the two spins in the spin-singlet state. Analogous statements of course also hold when you have prepared the system in one of the three spin-1 states.

It's also clear that when the system interacts with something else ("the environment"), i.e., when you have the system as an open system, then angular momentum can be exchanged with the environment, and the angular momentum of the system under consideration needs not to be conserved. That's the same as in classical mechanics.

I'm simply pointing out the QM facts.

"

"The QM facts" do not include a claim that angular momentum is not conserved. Only you are making that claim, not "standard QM".

Indeed, when we pass to quantum field theory as the basis for "standard QM", we find that QFT asserts that angular momentum is conserved, by Noether's theorem, as long as the Lagrangian is rotationally invariant–which it is for the cases you are discussing.

If it's not conserved, where is it going on a trial-by-trial basis? And why does it not disappear when they make the same measurement?

"

My answers are already implicit in what I've said before. I don't see the point of belaboring it.

I would, however, point out that you are looking at this backwards. The one making an extraordinary claim in this discussion is you, not me. Consequently, the burden of proof is on you to demonstrate that angular momentum is not conserved in the processes you described; it is not on me to show how it is conserved. And to meet that burden, it should be obvious that you cannot rely on a model that does not include all interactions involved that can exchange angular momentum. If you want to claim that angular momentum is not conserved in these measurements, you need to build a model that includes all the relevant interactions and shows how angular momentum is not conserved when they are all taken into account. You have not done that.

"

A measurement destroys the Bell state regardless of whether or not they measure at the same angle.

"

Yes, that is true. But you are basing your claim of non-conservation of angular momentum only on what happens when the angles are not the same. As far as I can tell, you are saying that angular momentum is conserved when the angles are the same.

"

what do you mean "stays in the eigenstate in which it was prepared"? The state refers to any direction in the symmetry plane because it's rotationally invariant.

"

Yes; that rotationally invariant state is the state that is prepared. Are you saying this state is not an eigenstate of angular momentum? If so, how can you possibly make any claim about angular momentum being conserved or not conserved, when it doesn't even start out with a well-defined value?

I've already given my answer several times: you are only evaluating conservation of angular momentum using the measured particles. But the measured particles are not a closed system. So you should not expect angular momentum to always be conserved if you only look at the measured particles. So the fact that you find that it isn't is not a problem.

"

If it's not conserved, where is it going on a trial-by-trial basis? And why does it not disappear when they make the same measurement? Where is that information in the wave function? How could it possibly be in the wave function, since you're talking about any number of possible measurement techniques? You're being way too vague here. Again, the physical measurement outcome is always the same, there is no variation in the amplitude of the outcome as Bob and Alice rotate their SG magnets. You haven't addressed that issue at all.

You can do the same thing with polarizers and photons. When you send a single vertically polarized photon through a polarizer at 45 deg, either it passes or it doesn't, but classically we're supposed to get 1/2 a photon. It's quantum, so half photons don't exist. That's why we have average-only transfer of momentum. It has nothing to do measurement devices per se, average-only conservation (for entangled photon pairs) is all about discrete (average only) versus continuous (exact) outcomes.

"

The correlations between Bob's and Alice's measurements are explained by the entangled states that you prepared them in. I am not saying that the prepared states are not the states you use in your model. Of course they are. And those prepared states are sufficient to account for the measurement results. So I don't know what you are asking me to answer with regard to how the measurement results are to be explained. "Standard QM" of course explains them just fine, and I have not said otherwise.

"

Then you should have no concern at all with what I said. But, for some reason, you think what I'm saying is crazy or wrong. I'm simply pointing out the QM facts.

"

The claim you are making, however, goes beyond using the prepared state to explain the measurement results. Your claim is basically this: the two-particle system is prepared in an eigenstate of total angular momentum (parallel spin and zero orbital angular momentum–the latter is not explicitly specified, but is implicit in your model). But conservation of angular momentum then requires that the two-particle system stays in that eigenstate after measurement–and this is not what we observe in cases where Alice's and Bob's measurement angles for spin are different. So conservation of angular momentum must be violated in those cases; all we have is "average conservation" over many trials.

"

A measurement destroys the Bell state regardless of whether or not they measure at the same angle. I don't know what you're trying to say here.

"

My response to this is that your claim is based on a false premise. It is not true that the two-particle system must stay in the eigenstate of angular momentum in which it was prepared, after the measurement. The system interacts with measuring devices, and this interaction can exchange angular momentum between the system and the measuring devices (and their environments). So it is not valid to argue that angular momentum is not conserved on the basis that, when Alice's and Bob's measurement angles are different, the two-particle system does not end up in the same eigenstate of angular momentum in which it started.

Nothing you have said responds to this argument.

"

Again, what do you mean "stays in the eigenstate in which it was prepared"? The state refers to any direction in the symmetry plane because it's rotationally invariant. Therefore, I can let Alice's direction be the direction of the Bell state or I can let it be Bob's. Who is measuring the "right" direction in your explanation?

What's your answer?

"

I've already given my answer several times: you are only evaluating conservation of angular momentum using the measured particles. But the measured particles are not a closed system. So you should not expect angular momentum to always be conserved if you only look at the measured particles. So the fact that you find that it isn't is not a problem.

The correlations between Bob's and Alice's measurements are explained by the entangled states that you prepared them in. I am not saying that the prepared states are not the states you use in your model. Of course they are. And those prepared states are sufficient to account for the measurement results. So I don't know what you are asking me to answer with regard to how the measurement results are to be explained. "Standard QM" of course explains them just fine, and I have not said otherwise.

The claim you are making, however, goes beyond using the prepared state to explain the measurement results. Your claim is basically this: the two-particle system is prepared in an eigenstate of total angular momentum (parallel spin and zero orbital angular momentum–the latter is not explicitly specified, but is implicit in your model). But conservation of angular momentum then requires that the two-particle system stays in that eigenstate after measurement–and this is not what we observe in cases where Alice's and Bob's measurement angles for spin are different. So conservation of angular momentum must be violated in those cases; all we have is "average conservation" over many trials.

My response to this is that your claim is based on a false premise. It is not true that the two-particle system must stay in the eigenstate of angular momentum in which it was prepared, after the measurement. The system interacts with measuring devices, and this interaction can exchange angular momentum between the system and the measuring devices (and their environments). So it is not valid to argue that angular momentum is not conserved on the basis that, when Alice's and Bob's measurement angles are different, the two-particle system does not end up in the same eigenstate of angular momentum in which it started.

Nothing you have said responds to this argument.

The ##\pm 1## prediction at all angles means that the net angular momentum exchange between the measured particles and the measuring devices (i.e., the vector sum of the exchanges from both measurements) must vary by angle (more precisely, by the difference in angle between the two measurements) if total angular momentum is to be conserved. (Note that the angular momentum that is exchanged does not have to be spin; it can be orbital, since what needs to be conserved is total angular momentum, not spin alone.) And that is what we would expect since we expect the angular momentum vector describing the exchange in each individual measurement to vary with the orientation of the measuring device.

"

Alice and Bob obtain the same physical outcomes ##\pm 1## at all angles. When they happen to make a measurement at the same angle, they always get the same result, both get +1 or both get -1, per conservation of spin angular momentum. Now suppose in trial 1, Alice and Bob measured at the same angle and both obtained +1. In trial 2 Bob changed to ##\theta## wrt to Alice who got +1 and he got +1. In trial 3, they did the same measurements as in trial 2 with Alice getting +1 and Bob getting -1. How does conservation of spin angular momentum per the Bell states account for trials 2 and 3?

My answer to that question is just the standard understanding of QM. That is, classically speaking, if our source is producing a pair of particles with equal and aligned angular momenta in some direction (magnitude +1) and Alice measures +1 and Bob measures in the same direction as Alice, he will get +1. If Bob measures at ##\theta## relative to Alice when she gets +1, then of course Bob will measure ##\cos{\theta}##. All of that is in accord with conservation of angular momentum. Since QM results typically average to what is expected from classical physics, it's no surprise at all (at least for me and the referees of our papers) that QM predicts Bob's ##\pm 1## outcomes will average to ##\cos{\theta}## in those trials when Alice measured +1, per conservation of angular momentum. Ditto in reverse.

What's your answer?

Is that what you would say about the astronaut?

"

In the case of the astronaut, the astronaut plus the flashlight is a closed system, at least as far as the astronaut throwing the flashlight is concerned. So I don't see the analogy with the case we are discussing.

So we do need to find a valid theory that works. Sean Carrol advocates for the “many worlds” interpretation. … But I have to believe that, since there are legitimate scientists who believe SD is a possible reality, that it is a least POSSIBLE a theory can be developed. It just seems odd that most of the arguments I have read by Physicists against SD are emotional opinionated arguments dealing with free will, and “many worlds” is considered over SD as a better alternative, but Bell recognizing SD as a possible loophole to his theorem. Did he just not think it through before he made that statement? Is there something in the points that you make above the John Bell was not aware of? Specifically something that has been discovered after Bell that invalidates his claim?

"

1. I won't defend MWI, you can see the reasoning in favor of it in papers about it. I think an honest assessment will admit it is viable, and as best I understand it there is no net creation of matter/energy involved regardless of the number of worlds. But I could be wrong.

2. I am not sure what you mean about "emotional arguments", but for SD to work as a local realistic solution:

There must be a locally accessible "master plan" particle/field/property/object that instructs each quantum interaction how to act (i.e. to provide the outcome of every measurement). This master plan would have object copies in every region of space (to be local), and must provide "answers" (measurement outcomes) for at least 13.8 billion years of history of particles/energy being created/destroyed/transformed, etc. And it must do so in a manner so that the "true" quantum statistics (to explain Bell's result) are hidden from inquiring human experimentalists investigating Quantum Theory, which provides an accurate prediction of the observed statistics.

Really, I don't know where one starts to develop this from "handwaving speculation" to a credible hypothesis or theory. But I guess it is "possible" someone might do so in the future, in which case we would have something to critique (or perhaps test, although that is not really a requirement for an interpretation). As it is now, SD is impossible to critique precisely because its supporters give it such amazing elements/powers – of course constructed on the fly – that no criticism can topple it. This is no different than invoking the existence of an omniscient omnipotent deity, by the way.

3. Bell was not a believer in Superdeterminism. Like most, he threw that out to demonstrate how far you would need to go to keep local realism (post Bell's Theorem). He didn't need to come up with any details, leaving it to the audience to draw their own conclusions. (He could just as easily have invoked the above deity making the same decisions.)

Bell (1985ish): "There is a way to escape the inference of superluminal speeds and spooky action at a distance. But it involves absolute determinism in the universe, the complete absence of free will. Suppose the world is super-deterministic, with not just inanimate nature running on behind-the-scenes clockwork, but with our behavior, including our belief that we are free to choose to do one experiment rather than another, absolutely predetermined, including the "decision" by the experimenter to carry out one set of measurements rather than another, the difficulty disappears. There is no need for a faster than light signal to tell particle A what measurement has been carried out on particle B, because the universe, including particle A, already "knows" what that measurement, and its outcome, will be."

Unsaid: what created the original map (master plan); and how did it calculate, store and hide all of the future outcomes? How does an entangled particle know how to read the map so it can acquire the proper spin (when spin correlations are being studied in a lab)?

Is that what you would say about the astronaut?

"

What astronaut?

"

Now it looks like you want to invoke counterfactual definiteness for the particles' spins

"

I don't know where you are getting that from. I am only talking about the spin measurement results that are actually observed, not about any counterfactual ones.

"

How would your explanation account for the ##\pm 1## prediction at all angles

"

The ##\pm 1## prediction at all angles means that the net angular momentum exchange between the measured particles and the measuring devices (i.e., the vector sum of the exchanges from both measurements) must vary by angle (more precisely, by the difference in angle between the two measurements) if total angular momentum is to be conserved. (Note that the angular momentum that is exchanged does not have to be spin; it can be orbital, since what needs to be conserved is total angular momentum, not spin alone.) And that is what we would expect since we expect the angular momentum vector describing the exchange in each individual measurement to vary with the orientation of the measuring device.

Which means you cannot use them as a basis for claims about conservation laws.

"

Is that what you would say about the astronaut?

"

This is much too vague. I would say that the exchange of angular momentum between the measured particle and the measuring device would vary based on the orientation of the measuring device. Which is precisely the kind of variation that could maintain conservation of total angular momentum in cases where the two entangled particles have their spins measured in different orientations.

"

Now it looks like you want to invoke counterfactual definiteness for the particles' spins (like Alice and Bob in my story). The reason I do that is precisely to show how it differs from the QM prediction of ##\pm 1## at all angles. How would your explanation account for the ##\pm 1## prediction at all angles, given it is supposedly accounting for transfer to the environment, which would certainly vary with angle.

the Bell spin states certainly do not attempt to capture such losses

"

Which means you cannot use them as a basis for claims about conservation laws.

"

Such losses would vary from situation to situation

"

This is much too vague. I would say that the exchange of angular momentum between the measured particle and the measuring device would vary based on the orientation of the measuring device. Which is precisely the kind of variation that could maintain conservation of total angular momentum in cases where the two entangled particles have their spins measured in different orientations.

Only if you assume that angular momentum conservation can be applied to the combined spin angular momentum of the measured systems taken in isolation, even though they are open systems during measurement and even though spin angular momentum is not conserved separately. But that assumption is false.

"

See post #114

See the bolded qualifier I added. My point is that you can't ignore what is being ignored in the definition of the Bell spin states, if you are going to make claims about conservation laws. Conservation laws don't apply to open systems in isolation. They also don't apply to particular pieces of a conserved quantity in isolation. Spin angular momentum is not conserved by itself; only total angular momentum is conserved. But only spin angular momentum of the measured particles is captured in the mathematical model using Bell states.

"

The bolded statement is exactly correct, assuming no losses to the measurement device. Such losses would vary from situation to situation even though the source of spin-entangled particles was the same in every experimental arrangement. Therefore, the Bell spin states certainly do not attempt to capture such losses, as they are not written in a form where one can enter specific experimental details.

everything I am saying follows mathematically from the Bell states

"

Only if you assume that angular momentum conservation can be applied to the combined spin angular momentum of the measured systems taken in isolation, even though they are open systems during measurement and even though spin angular momentum is not conserved separately. But that assumption is false.

I didn't mean that you are wrong but the statements by @RUTA . We had extended discussions about this repeatedly!

"

And as I explained to you in those discussions, everything I am saying follows mathematically from the Bell states. There is absolutely nothing wrong with my statements. That's why it's been published numerous times in various contexts now. I have no idea what confuses you about it, so I can't help you there. Sorry.

The Bell spin states are chosen to model conserved spin angular momentum if you ignore any exchange of angular momentum between the measured systems and measuring devices and environments, and if you ignore that spin angular momentum is not the same as total angular momentum.

"

See the bolded qualifier I added. My point is that you can't ignore what is being ignored in the definition of the Bell spin states, if you are going to make claims about conservation laws. Conservation laws don't apply to open systems in isolation. They also don't apply to particular pieces of a conserved quantity in isolation. Spin angular momentum is not conserved by itself; only total angular momentum is conserved. But only spin angular momentum of the measured particles is captured in the mathematical model using Bell states.

To my mind, you find some thoughts on MathPages in the article “On Cumulative Results of Quantum Measurements”.

https://www.mathpages.com/home/kmath419/kmath419.htm

"

This article doesn't talk at all about what I was talking about, namely, the exchange of conserved quantities (such as angular momentum) between measured systems and measuring devices (and environments).

How do you know? You're not measuring the exchange of angular momentum with the environment. That doesn't mean you can assume it doesn't happen. It means you don't know.

"

The Bell spin states are chosen to model conserved spin angular momentum. It's totally analogous to having an astronaut throw her flashlight in outer space so that conservation of momentum makes her move toward her spaceship. You write ##\vec{P}_{astronaut} + \vec{P}_{flashlight} = 0##. Of course if you wanted to confirm this you'd have to make measurements and that would introduce experimental uncertainty because momentum would be lost relative to the equation. But, that is not conveyed in the equation itself.

https://www.physicsforums.com/threa…elers-1986-paper-comments.952665/post-6056948

I didn't mean that you are wrong but the statements by @RUTA . We had extended discussions about this repeatedly!

"

Can you give any links to threads/posts?

I don't know. The point I have made is not one I have seen addressed in the literature. But that doesn't make it wrong.

"

I didn't mean that you are wrong but the statements by @RUTA . We had extended discussions about this repeatedly!

The question is: Does a quantum spin "exhibition" actually impart quantum spin to the surroundings?

"

"Impart quantum spin" is too narrow; it should be "exchange angular momentum". Quantum spin can be inter-converted with other forms of angular momentum.

I would be interested in seeing any references in the literature to analyses of measurement interactions that address this question.

Should this really be part of the Insights?

"

I don't know. The point I have made is not one I have seen addressed in the literature. But that doesn't make it wrong.

Sorry, these statements are simply false as a matter of what actually happens in an experiment. Measurement involves interaction between the measured system and the measuring device. That interaction can exchange conserved quantities. So it is simply physically invalid to only look at the measured systems when evaluating conservation laws.

"

I don't know, how often we have discussed these wrong statements in the forum. Should this really be part of the Insights?

The Bell spin states obtain due to conservation of spin angular momentum without regard to any loss to the environment.

"

How do you know? You're not measuring the exchange of angular momentum with the environment. That doesn't mean you can assume it doesn't happen. It means you don't know.

"

the theoretical results I shared are independent of experimental uncertainties, which is what you're trying to invoke.

"

I don't know where you're getting this from. There can't be any experimental uncertainty in something that's not being measured. The fact that measurement involves interaction between the measured system and the measuring device is basic QM. But it does not imply that all aspects of that interaction are captured in the measurement result. In fact they practically never are.

Sorry, these statements are simply false as a matter of what actually happens in an experiment. Measurement involves interaction between the measured system and the measuring device. That interaction can exchange conserved quantities. So it is simply physically invalid to only look at the measured systems when evaluating conservation laws.

"

The Bell spin states obtain due to conservation of spin angular momentum without regard to any loss to the environment. Therefore, the theoretical results I shared are independent of experimental uncertainties, which is what you're trying to invoke.

It is impossible to conserve spin angular momentum exactly according to either Alice or Bob because they both always measure ##\pm 1## (in accord with the relativity principle), never a fraction. However, their results do average ##\pm \cos{\theta}## under these data partitions. It has nothing to do with momentum transfer with the measurement device.

"

Sorry, these statements are simply false as a matter of what actually happens in an experiment. Measurement involves interaction between the measured system and the measuring device. That interaction can exchange conserved quantities. So it is simply physically invalid to only look at the measured systems when evaluating conservation laws.

As I said, this can't be correct because during the measurement process angular momentum is exchanged between the measured particles, which the formalism you refer to describes, and the measuring devices and environment, which the formalism does not describe. So the formalism is incomplete and cannot support any claims about conservation laws.

My point has nothing to do with experimental uncertainty. It has to do with the fact that during measurement, the measured particles are open systems, not closed systems.

"

Look at a Bell spin triplet state in the symmetry plane. When Alice and Bob both measure in the same direction, they both get the same outcome, +1 or -1. That is due to conservation of spin angular momentum. Now suppose Bob measures at an angle ##\theta## with respect to Alice and they do many trials of the experiment. When Alice partitions the data according to her +1 or -1 results, she expects Bob to measure ##+\cos{\theta}## or ##-\cos{\theta}##, respectively, because she knows he would have also measured +1 or -1 if he had measured in her direction. Therefore, she knows his true, underlying value of spin angular momentum is +1 or -1 along her measurement direction, so he should be measuring the projection of that true, underlying value along his measurement direction at ##\theta## to conserve spin angular momentum. Of course, Bob can partition the data according to his ##\pm 1## equivalence relation and say it is Alice who should be measuring ##\pm \cos{\theta}## in order to conserve spin angular momentum. It is impossible to conserve spin angular momentum exactly according to either Alice or Bob because they both always measure ##\pm 1## (in accord with the relativity principle), never a fraction. However, their results do average ##\pm \cos{\theta}## under these data partitions. It has nothing to do with momentum transfer with the measurement device. All of this follows strictly from the Bell spin state formalism.

My claim is a mathematical fact that follows from the Bell state formalism alone.

"

As I said, this can't be correct because during the measurement process angular momentum is exchanged between the measured particles, which the formalism you refer to describes, and the measuring devices and environment, which the formalism does not describe. So the formalism is incomplete and cannot support any claims about conservation laws.

"

It has nothing to do with experimental uncertainty.

"

My point has nothing to do with experimental uncertainty. It has to do with the fact that during measurement, the measured particles are open systems, not closed systems.

I don't think this claim can be asserted as fact at our current level of knowledge. When we make measurements on quantum systems, we bring into play huge sinks of energy and momentum (measuring devices and environments). But we don't measure the change in energy and momentum of the sinks. We only look at the measured systems. But if a measurement takes place, the measured systems are not closed systems and we should not in general expect them to obey conservation laws in isolation; they can exchange energy and momentum with measuring devices and environments. To know that conservation laws were violated we would have to include the changes in energy and momentum of the measuring devices and environments. But we don't. So I don't see that we have any basis to assert what you assert in the above quote. All we can say is that we have no way of testing conservation laws for such cases at our current level of technology.

"

My claim is a mathematical fact that follows from the Bell state formalism alone. It has nothing to do with experimental uncertainty.

a deterministic Universe we have give up free will for

"

You are aware that the MWI is 100% deterministic, correct?

Speculating that every time a “measurement” is made a new Universe comes into existence.

"

This is not what the MWI says. The "universe" in the MWI is the universal wave function, and there is always just one universal wave function. The wave function doesn't "split" when a measurement is made; that would violate unitary evolution, and the MWI says that the wave function always evolves in time by unitary evolution.

What you need to show is an example where a conservation law was VIOLATED when the observation is made.

"

No, you need to show that a conservation law must be violated if the universe is not fully 100% deterministic because you are the one who is making that claim. I am simply pointing out that you have not shown that. You have simply assumed it, and you can't just assume it. You have to show it.

The rest of your post is irrelevant to mine because I did not say any of the things you are talking about.

all forces have corresponding particles – the Standard Model.

"

The Standard Model is a quantum field theory. Certain quantum field states are described as "particles", but there are many quantum field states that cannot be described that way. The fundamental entities are fields.

If we

1. Assume conservation laws hold everywhere for all time and are exact

2. Assume speed of light is universal.

3. Assume causality depends on particles

then the Universe MUST be pre-determined…

"

You are completely ignoring Bell's Theorem. I realize that Bell himself has mentioned Superdeterminism (SD) as an "out" for his own theorem (as you point out). However, SD requires substantially more assumptions than the 3 you have above. In other words: unless you have substantially more (and progressively more outrageous) assumptions than those 3, then at least one of those 3 must not hold true.

And I get tired of saying this, but: There is no candidate SD theory in existence. By this I mean: one which explains why any choice of measurement basis leads to a violation of a Bell Inequality, in any of the following scenarios:

a. Measurement basis does not vary between pairs. This is the most common Bell test, and violates a Bell inequality.

b. Measure basis does vary:

i. By random selection, such as by computers or by radioactive samples. This too has been done, and violates a Bell inequality.

ii. By human choice (such as the Big Bell test, and violates a Bell inequality).

If there were such a theory, it could easily be falsified by suitable variations on the above. Further, there is no particular rational to invoke SD as an explanation for observed results in the area of entanglement, but no where else in all of science. You may as well claim that the "true" value of c is 2% higher than the observed value… due to Superdeterminism.

that means conservation of spin angular momentum is not exact when Alice and Bob are making different measurements. Conservation holds only on average

"

I don't think this claim can be asserted as fact at our current level of knowledge. When we make measurements on quantum systems, we bring into play huge sinks of energy and momentum (measuring devices and environments). But we don't measure the change in energy and momentum of the sinks. We only look at the measured systems. But if a measurement takes place, the measured systems are not closed systems and we should not in general expect them to obey conservation laws in isolation; they can exchange energy and momentum with measuring devices and environments. To know that conservation laws were violated we would have to include the changes in energy and momentum of the measuring devices and environments. But we don't. So I don't see that we have any basis to assert what you assert in the above quote. All we can say is that we have no way of testing conservation laws for such cases at our current level of technology.

A non-pre-determined Universe needs to violate conservation laws somewhere.

"

No, it doesn't. Events that are not pre-determined can still happen in a way that obeys conservation laws.

without particles we have no causality

"

This is not correct; field theories that do not contain any particles still have causality.

I am a novice here. But I have been studying about SD for about 8 months now. Going back to the basic issue Einstein had with non-locality…. No one seems to be addressing a point in this discussion – assume that causality cannot travel faster than the speed of light (we have never observed violation of speed of light, and without particles we have no causality).

We see measurements that SEEM to violate locality, BUT we do have a way out by SD (John Bell himself said so). We do believe in symmetry laws, conservation of energy, momentum, etc. We CAN predict in a real sense where planets, baseballs, etc will be in the future. I think, prior to QM we would all agree that if all factors were considered in initial conditions and all particles (heat=photons, etc) could be taken into account we could predict a small system in its entirety. I have never seen an argument where someone says “conservation of energy is only approximate”, because they would need to demonstrate this experimentally.

So given that conservation laws are all assumed to be exact (pre QM), what happened to the argument that all events are pre-determined? Not predictable as that is impossible, but pre-determined given the belief that we have discovered all the laws of motion and with the assumption that particles are real (again particles became non-real after QM, only when the wave function was available did we consider that ball are not real.)

So here is my question: If the Universe is not pre-determined, where did the differences in energy, momentum, etc go? A non-pre-determined Universe needs to violate conservation laws somewhere. Either we have not discovered all the laws, or there is a leak in Energy somewhere we have not discovered yet. Do we not believe our own laws of Physics?

If we

1. Assume conservation laws hold everywhere for all time and are exact

2. Assume speed of light is universal.

3. Assume causality depends on particles

then the Universe MUST be pre-determined, there is no way around it that we have actuality observed. NONE. SD, it seems to me does not need to prove itself, it seems to me that SD must be disproven, as it is an obvious result of the above assumptions. Which of the 3 assumptions would we abandon?

Now enter QM. We see entanglement experiments confirm QM predictions, but we also believe in the above 3 assumptions, then why do we need to introduce non-local interpretations at all – SD is the way the Universe works based on the 3 assumptions, what is the problem? Based on what I have read, the only argument seems to be the disbelief that we are not free to do Science. That is the only argument I have heard – that we FEEL that we are free to make decisions on our own and this somehow invalidates all our scientific evidence for SD.

But this was a problem early on – yes we SEE that our laws work, we understand we cannot take into account all factors when trying to predict an outcome – but the ASSUMPTION underneath was that there are laws that govern the Universe and therefore, unless someone can demonstrate how these laws are violated the Universe is pre-determined down to the last photon.

There are those who think we live in a simulation – pre-determined again. No evidence for that really, BUT the world view helps explain why we THINK we have free will. An AI living in a simulation may go through its life believing it had free will without ever realizing otherwise. If we ditch the FEELING that we are making free decisions, then SD is absolutely the simplest way to explain the seemingly non-local results of QM. Not “many worlds” which has no observational evidence. I do not see an alternative to SD that is consistent with all our laws and measurements, and the AI worldview easily explains at least ONE way we can be fooled into believing we have free will. But in any case, FEELINGS have been the bane of science forever.

Non-local QM theories are not necessary, as far as I can tell, if SD is considered an option. If AIs and simulations had been around BEFORE QM was discovered I do not think that non-local theories would ever have been seriously considered.

"

As I showed in this Insight, the indeterminism we have in QM is unavoidable according to the relativity principle. And, yes, that means conservation of spin angular momentum is not exact when Alice and Bob are making different measurements. Conservation holds only on average (Bob saying Alice must average her results and Alice saying the same about Bob) when they make different measurements.

https://www.physicsforums.com/threads/derivation-of-statistical-mechanics.1013629/

https://www.physicsforums.com/threads/interpretations-of-the-no-communication-theorem.1013630/

This thread is now reopened, with a reminder to please keep it focused on discussion of the article about superdeterminism referenced in the OP.

It seems to me that poor Ruta's insight has been well and truly hijacked here.

We have dozens of posts of pointless argument which has nothing to do with the original Insight.

It's so disrespectful, IMHO.

"

A thread split might be warranted here, yes.

For future reference, a better way to prompt that kind of consideration is the Report button.

The orbit of the moon is in principle measurable by humans

"

Then what isn't in principle measurable by humans? Your basic rule seems to be that anything to which the Born rule applies is "in principle measurable by humans" by definition, which is arguing in a circle.