Views On Complex Numbers

Table of Contents

Abstract

Why do we need yet another article about complex numbers? This is a valid question and I have asked it myself. I could mention that I wanted to gather the many different views that can be found elsewhere – Euler’s and Gauß’s perspectives, i.e. various historical views in the light of the traditionally parallel development of mathematics and physics, e.g. the use of complex coordinates in kinematics, the analytical or topological views, e.g. the Radish or the mysterious Liouville’s theorem about bounded entire functions that are already constant, or the algebraic view that led to the many non-algebraic proofs of the fundamental theorem of algebra. The complex numbers have so many faces and appear in so many contexts that I could as well have written a list of bookmarks. All of that is true to some extent. The real reason is, that I want to break the automatism of the association of complex numbers with, and the factual reduction to points in the Gaußian plane

$$

\mathbb{C}=\{a+i b\,|\,(a,b)\in \mathbb{R}^2\}\neq \mathbb{R}^2.

$$

We need two dimensions to visualize complex numbers but that doesn’t make them two-dimensional. They are a one-dimensional field in the first place, i.e. a single set of certain elements that obey the same axiomatic arithmetic rules as the rational numbers do. They are one set that is not just a plane! The reason they exist and bar us from visual access is finally a tiny positive distance we can see.

The Algebraic View

Let ##\mathbb{F}## be a field of characteristic zero with an Archimedean ordering. This is algebra talk. A field means that we can add, subtract, multiply, and divide the way we are used to. Characteristic zero means, that

$$

1+1+ \ldots + 1 \neq 0

$$

no matter how many ones we add. Don’t laugh, you are – right now – using a device that has ##1+1=0## as its most fundamental law! An Archimedean ordering only means

$$

\forall \;a\in \mathbb{F} \;\exists \; n \in \mathbb{N}\, : \,n>a.

$$

And once again, don’t laugh, some fields contain the rational numbers and are not Archimedean. For the sake of simplicity, imagine ##\mathbb{F}=\mathbb{Q}## or ##\mathbb{F}=\mathbb{R}.## The following algebraic constructions work with rational numbers, too, i.e. the algebraic perspective does not require real numbers. We can have the algebraic closure first and the topological closure next, or vice versa.

$$

\begin{matrix}&&\overline{\mathbb{Q}}[i]&&\\

&\nearrow_{alg.} && \searrow^{top.} &\\

\mathbb{Q}&&&&\mathbb{C} \\

&\searrow^{top.} && \nearrow_{alg.} &\\

&&\mathbb{R}&&

\end{matrix}

$$

The central observation is that the polynomial ring ##\mathbb{F}[x]## is an integral domain and a principal ideal domain, i.e. any ideal in ##\mathbb{F}[x]## is already generated by a single polynomial. The reason for this is that ##\mathbb{F}[x]## is an Euclidean ring where we can perform a long division with the polynomial degree as the quantity decreases in the process. It is the size of the remainder that decreases in the usual process of the Euclidean algorithm. The size of polynomials is their degree.

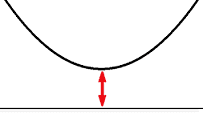

The availability of the Euclidean algorithm, however, has far-reaching consequences. The possibility of dividing polynomials allows the distinction between polynomials that have factors and those which do not. The latter are called irreducible polynomials. The tiny distance ##d\in \mathbb{F}_{>0}## with the red arrow in the image above guarantees that

$$

x^2+d

$$

is an irreducible polynomial over ##\mathbb{F}.## We cannot write it as a product of polynomials of degree one. A typical algebraic scheme of proof would be:

Assume ##x^2+d=(x+a)(x+b)=x^2+(a+b)x+ab.## Then ##a+b=0## and ##ab=-a^2=d.## Therefore ##x^2+d=x^2-a^2=(x-a)(x+a).## This polynomial has two zeros at ##x=a## and ##x=-a,## or one zero in case ##a=0.## But the image shows that ##x^2+d## does not cross the line ##y=0,## i.e. the polynomial has no zeros. This contradiction means that ##x^2+d## is indeed irreducible.

Since ##\mathbb{F}[x]## is a principal ideal domain, the irreducible polynomial ##x^2+d## is automatically a prime element, and it generates a prime ideal ##\bigl\langle x^2+d \bigr\rangle ## that is automatically a maximal ideal, so that the factor ring

$$

\mathbb{F}[x]/\bigl\langle x^2+d \bigr\rangle = \mathbb{F}\left[ \sqrt{-d}\right]

$$

is automatically a field. Now that ##x^2+d## is made zero, we can identify ##x## with ##\sqrt{-d}## and

$$

x^2+d=\left(x-\sqrt{-d}\right)\cdot \left(x+\sqrt{-d}\right)\equiv 0

$$

has two new zeros ##\pm \sqrt{-d}##, however, outside of ##\mathbb{F}.## If ##d=1## and ##\mathbb{F}=\mathbb{R}## then we call this field the complex numbers

$$

\mathbb{R}[x]/\bigl\langle x^2+1 \bigr\rangle =\mathbb{R}\left[ \sqrt{-1}\right]=\mathbb{C}.

$$

The Arithmetic Rules

When I said that complex numbers and rational numbers obey the same axiomatic rules, I referred to the fact that both have an additive and a multiplicative group connected by the distributive laws as in any field. Derived rules, abbreviations, or interpretations are no longer automatically true, simply because ##z^2\geqq 0## is no longer true. This has consequences. The most prominent example is

$$

-1=\sqrt{-1}\cdot \sqrt{-1} \neq \sqrt{(-1)\cdot (-1)}=\sqrt{1}=1.

$$

The derived rule ##\sqrt{a\cdot b}=\sqrt{a}\cdot \sqrt{b}## for real numbers does not hold anymore. But how can we know which ones still hold and which ones do not without searching for a proof in every single case? Well, we could learn what is written in this article (Things Which Can Go Wrong with Complex Numbers) or use the definition we just learned. This means that we identify ##i=\sqrt{-1}## with the indeterminate ##x## of the real polynomial ring ##\mathbb{R}[x]## and establish the law ##x^2+1 \equiv 0.## The equation above becomes

$$

x\cdot x \equiv -1 \neq 1=\sqrt{1}=\sqrt{(-1)^2}

$$

We can write the equations on the right because ##(-1)^2\geqq 0## for real numbers, however, ##x^2\ngeqq 0## in ##\mathbb{R}[x]/\bigl\langle x^2+1 \bigr\rangle ,## and ##\sqrt{x^2}## isn’t even defined in ##\mathbb{R}[x]/\bigl\langle x^2+1 \bigr\rangle .## Hence, the algebraic view on complex numbers can prevent us from making arithmetic mistakes. All we have is a field of scalars of characteristic zero. Any functions like square roots, logarithms, etc. have to be reconsidered. ##\mathbb{C}## doesn’t even have an Archimedean ordering any longer.

Reconsidered Analysis

As much as the algebraic view can help to avoid arithmetic mistakes, as much does it have a significant disadvantage if we want to perform analysis on ##\mathbb{R}[x]/\bigl\langle x^2+1 \bigr\rangle .## It is inconvenient and ambiguous since we will need polynomials in their analytical meaning as functions, too. Hence even if I may not like the point of view as points in the Gaußian plane, we have to consider the complex numbers as real vectors, too. I’m not too fond of it because it supports the impression that complex numbers are only real vectors. They are not, they are scalars, and especially complex analysis is full of examples where this fact is important. Nevertheless, we need help in the form of visualization and we can only see the real world.

The Real Vector Space

\begin{align*}

\mathbb{C}&=\mathbb{R}[x]/\bigl\langle x^2+1 \bigr\rangle = \{p(x)=a+bx\,|\,(a,b)\in \mathbb{R}^2 \wedge x^2+1\equiv 0\} \\[12pt]

\mathbb{C}& =\{z=a+i b\,|\,(a,b)\in \mathbb{R}^2\}=\mathbb{R} \oplus i\cdot \mathbb{R}

\end{align*}

are both representations of the complex numbers as primarily a two-dimensional real vector space with the – in my opinion a bit hidden – additional property ##x^2=-1,## resp. ##i^2=-1.## The two components ##(a,b)## of a complex number ##z## are called

\begin{align*}

a&=\mathfrak{Re}(z)\text{, real part of }z\text{ and}\\[6pt]

b&=\mathfrak{Im}(z)\text{, imaginary part of }z.

\end{align*}

They are the Cartesian coordinates in the Gaußian plane. The corresponding polar coordinates $$z=r\cdot e^{i \varphi }=r\cdot (\cos \varphi +i \sin \varphi )$$ which are very important in physics but often a bit neglected in mathematics are called

\begin{align*}

r&=\sqrt{a^2+b^2}\text{, the absolute value of }z\text{ and}\\[6pt]

\varphi &= \sphericalangle (a,b)\text{, the argument of }z.

\end{align*}

The absolute value is the Euclidean distance from the origin of the Gaußian plane, and the argument is the direction to ##(a,b)## measured as an angle from the positive real axis. However, the additional arithmetic law

$$

(i\cdot \mathbb{R})\cdot (i\cdot \mathbb{R}) \subseteq \mathbb{R}

$$

other than in an ordinary real Euclidean vector space makes a crucial difference and should not be forgotten. I think the connection between real and complex numbers can best be memorized by a formula many mathematicians consider the most beautiful equation of all

$$

e^{i\cdot\pi}+1=0 .

$$

The Radish

The formula ##(i\cdot \mathbb{R})^2 \subseteq \mathbb{R} ## should better be written as

$$

( i \cdot \mathbb{R})^{2n} \subseteq \mathbb{R}\, ,\,( i \cdot \mathbb{R})^{2n+1} \subseteq i \cdot \mathbb{R}\, , \,n \in \mathbb{Z}

$$

to note that we could switch as often as we want between the two dimensions by a simple multiplication. It reflects the more general case of multiplication which becomes obvious in polar coordinates

$$

\left(r\cdot e^{i \varphi }\right)\cdot \left(s\cdot e^{i \psi }\right) = (rs)\cdot e^{i(\varphi + \psi )}.

$$

Multiplication is a rotation of directions and we can all of a sudden count how often we pass the gauge line, the positive real axis. We have a radish.

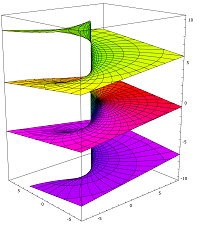

This picture is particularly important for the complex logarithm function since

$$

\log z = \log \left(re^{i \varphi }\right)= (\log r) + i \varphi

$$

does not tell us on which slice ##n ## of the radish we are. We only know it up to full rotations

$$

\varphi = \varphi_0 + 2n\pi .

$$

The range ##\varphi_0 \in (-\pi,\pi]## is called the principal value, and the corresponding slice of the radish ##n=0## is called the principal branch. The radish is cut along the negative real axis and the origin is called branch point. Mathematicians prefer to speak of branches instead of radish slices but the picture helps to understand what is going on. An official picture of the radish would be

The Functions

Complex function theory goes far beyond our subject of complex numbers. We have just seen in the example of the complex logarithm that winding numbers and poles play a central role. Note that ##\log (0)## is a pole and no value can be attached to it. One could call complex function theory Cauchy’s winding and residue calculus because of the residue theorem, a generalization of Cauchy’s integral theorem and integral formula,

$$

\oint_\gamma f(z)\,dz=2\pi i\cdot \sum_{\substack{\text{poles}\\[2pt]p_k}} \underbrace{\operatorname{Ind}_\gamma (p_k)}_{\substack{\text{windings }\\[3pt] \text{around }p_k\text{ of} \\[3pt] \text{integration path }\gamma }}\cdot \underbrace{\operatorname{Res}_{p_k}(f)}_{\substack{\text{coefficient }-1\text{st}\\[3pt] \text{ in the Laurent }\\[3pt] \text{series of }f\text{ at }p_k}}.

$$

But this isn’t the only property of complex functions we are not used to from real calculus. Any complex function that is complex differentiable is already smooth, i.e. it is infinitely often complex differentiable. And if it is in addition bounded, then it is already constant (Liouville’s theorem):

\begin{align*}

\left|f'(z)\right|&=\left|\dfrac{1}{2\pi i}\oint_{\partial U_r(z)}\dfrac{f(\zeta)}{(\zeta-z)^2}d\zeta\right|\leqq \dfrac{1}{2\pi}\cdot 2 \pi r \cdot \dfrac{C}{r^2}\stackrel{r\to \infty }{\longrightarrow }0.

\end{align*}

We can write every complex function as

$$

f(z)=f(a+ib)=\mathfrak{Re}(f(z))+\mathfrak{Im}(f(z))

=u(a,b)+i\cdot v(a,b)

$$

with two real functions ##u,v\, : \,\mathbb{R}^2\rightarrow \mathbb{R}.## I have learned that the complex function ##f## is differentiable if the real functions ##u,v## are differentiable and the Cauchy-Riemann equations hold

$$

\dfrac{\partial u}{\partial a}=\dfrac{\partial v}{\partial b}

\ ,\ \dfrac{\partial u}{\partial b}=-\dfrac{\partial v}{\partial a}.

$$

As true as it is, it is in my mind an insufficient perspective. I like Weierstraß’s decomposition formula

$$

f(z)=f(a) + D_a(f) \cdot (z-a) +o(z-a)

$$

to define differentiability. It displays all necessary aspects and puts the limit where it belongs, into the remainder term ##o(z-a).## Differentiability at a point ##a\in \mathbb{C}## – a local property(!) – is then the existence of the ##\mathbb{C}##-linear function ##f'(a)=D_a(f),## the derivative in ##a##. The emphasis on ##\mathbb{C}##-linearity is crucial here. After all, it is the reason behind the Cauchy-Riemann equations and why I prefer to consider complex numbers as a field of scalars rather than a real vector space with extras. The clumsy definition by real differentia-

bility plus Cauchy-Riemann equations are all contained in the simple requirement that ##D_a(f)## is ##\mathbb{C}##-linear, see [3].

FTA And The Two Closures

The fundamental theorem of algebra (FTA), that every complex polynomial ##f(z)## of degree at least one has at least one zero, can be proven quite elegantly with the help of Liouville’s theorem. Since

$$

\lim_{n \to \infty}\inf_{|z|=n}|f(z)|=\infty

$$

there is a real number ##r## such that ##|f(0)|\leqq |f(z)|## for all ##z\in \{z\in \mathbb{C}\,|\,|z|>r\}##. Since ##f## and in addition to that ##|f|## is continuous, it takes a minimum, say at ##z_0,## in the compact disc ##D=\{z\in \mathbb{C}\,|\,|z|\leqq r\}## according to Weierstraß’s theorem about the minimum and maximum. This means that

$$

0\leqq C:=|f(z_0)|\leqq |f(z)|\;\text{ for all }z\in D.

$$

This value is already a global minimum per construction. If ##C>0## then

$$

f^{-1}\, : \,z\longmapsto \dfrac{1}{f(z)} \leqq \dfrac{1}{C}

$$

would be a holomorph, bounded function defined on ##\mathbb{C}.## Liouville’s theorem now says that ##f^{-1}## has to be constant, i.e. ##f## is also constant, contradicting our choice of the polynomial ##f## of at least degree one. Thus ##C=0=f(z_0)## and we have found a zero ##z_0## of ##f.\; \square ##

Note that we used pure analytical tools to prove the fundamental theorem of algebra. We also needed both closures of ##\mathbb{C}.## What does that mean? A sequence ##(a_n)_{n\in\mathbb{N}}## is called a Cauchy sequence if

$$

\displaystyle{\lim_{n,m \to \infty}|a_n-a_m|=0}.

$$

Unfortunately, this does not mean that ##\displaystyle{\lim_{n \to \infty}a_n}## exists. If we define for instance

$$

a_1=2\;\text{ and }\; a_{n+1}=\dfrac12 \left(a_n+\dfrac{2}{a_n}\right)\text{ for }n\in \mathbb{N}

$$

we get a decreasing Cauchy sequence of rational numbers converging to ##\sqrt2.## But this limit does not exist in ##\mathbb{Q}.## To make all limits available, we topologically complete the rational numbers by adding all possible limits of Cauchy sequences obtaining the real numbers. The existence of ##z_0,## i.e. the existence of a Cauchy limit ##z_0## in the proof above has been provided by a topological argument about real numbers hidden in Weierstraß’s theorem.

The topological closure is not the only closure that we need. If we think about our first example ##f(x)=x^2+d \;(d>0),## then we have a parabola – a polynomial of degree two – that does not have a real zero. It does not cross the real axis. Completion of the square

$$

0=x^2+px+q=\left(x+\dfrac{p}{2}+\sqrt{\dfrac{p^2}{4}-q}\right)\cdot \left(x+\dfrac{p}{2}-\sqrt{\dfrac{p^2}{4}-q}\right)

$$

is a standard method to find the zeros of quadratic polynomials. This means for our original example

$$

0=x^2+d=\left(x+\sqrt{-d}\right)\left(x-\sqrt{-d}\right)=\left(x+i \cdot \sqrt{d}\right)\left(x-i \cdot \sqrt{d}\right)

$$

that we have two complex roots ##\pm i\cdot \sqrt{d}.## In general, we have the situation that

$$

\sqrt{\dfrac{p^2}{4}-q}=\dfrac{1}{2}\sqrt{p^2-4q}=\begin{cases}

\dfrac{1}{2}\sqrt{p^2-4q} \in \mathbb{R}&\text{ if }p^2>4q\\[6pt]

\quad \quad \quad 0&\text{ if }p^2=4q\\[6pt]

\dfrac{i}{2}\sqrt{4q-p^2}\in i\mathbb{R}&\text{ if }p^2<4q\\[6pt]

\end{cases}

$$

decides whether we have two real, one real, or two complex solutions. The term ##\Delta=p^2-4q## is called the discriminant of ##x^2+px+q.## If we have a real, monic (highest coefficient is one) polynomial ##f(x)## of degree ##2n+1## then

$$

\lim_{x \to -\infty}f(x)=-\infty \;\text{ and }\;\lim_{x \to +\infty}f(x)=+\infty

$$

and by topological completeness of ##\mathbb{R}## we have a real zero ##x_0\in \mathbb{R}## and may write

$$

f(x)=g(x)(x-x_0) \;\text{ with }\;\deg g(x)=2n.

$$

It can be proven now that the zeros of polynomials of even degree always appear as conjugates

$$

\left(-\dfrac{p}{2}\right) + \left(\dfrac{\sqrt{\Delta}}{2}\right) \;\text{ and }\;\left(-\dfrac{p}{2}\right) – \left(\dfrac{\sqrt{\Delta}}{2}\right).

$$

This means that the example with the parabola is a typical one, and we only have to attach ##\pm i\cdot\sqrt{d}## to the real numbers to decompose any polynomial into linear factors. Since ##sqrt{d}in mathbb{R},## it is sufficient to attach ## i ## as the formal solution to the quadratic polynomial equation ##x^2+1=0.## This formal symbol is the reason why we first considered

$$

\mathbb{C}=\mathbb{R}[x]/\bigl\langle x^2+1 \bigr\rangle =\mathbb{R}[i].

$$

The field extension ##\mathbb{R}\subseteq \mathbb{R}[ i ]## is called the algebraic closure of ##\mathbb{R}.## Which closure comes first and which one next doesn’t matter as long as we arrive at the field of complex numbers. The algebraic closure necessary to find all polynomial zeros is even better hidden in the above proof of the FTA than the topological closure. It is ultimately hidden in Cauchy’s integral formula that is used to prove Liouville’s theorem. For those who prefer an axiomatic description of the complex numbers, see [5] which cites Spivak’s calculus book. For a formal algebraic construction of real and complex numbers, I recommend van der Waerden’s book on Algebra [7].

Sources

Sources

[1] Image Source: https://upload.wikimedia.org/wikipedia/commons/a/ab/Riemann_surface_log.svg

Attribution: Leonid 2, CC BY-SA 3.0 <https://creativecommons.org/licenses/by-sa/3.0>, via Wikimedia Commons

[2] https://www.physicsforums.com/insights/things-can-go-wrong-complex-numbers/

[3] https://www.physicsforums.com/insights/an-overview-of-complex-differentiation-and-integration/

[4] Jean Dieudonné, Geschichte der Mathematik 1700-1900, Vieweg Verlag 1985

[5] https://math.stackexchange.com/questions/257184/defining-the-complex-numbers

[6] https://www.physicsforums.com/insights/pantheon-derivatives-part-v/#Liouvilles-Theorem-2425

[7] B.L. van der Waerden, Algebra Vol.1, 8-th ed., Springer-Verlag, Berlin 1971 https://www.amazon.de/Algebra-German-B-van-Waerden/dp/3642855288/

@jbriggs444 . "one way" of solving the problem in your post was to use vectors (quantity + direction). There was a snippet on eigen values, which was stated succinctly as a situation where vector computation is reduced/equivalent to scalar computation, the scalar components being eigen values. Am I correct? How now this? Gracias for engaging with me.

@FactChecker , danke for the examples. I failed to ask the right question, which is why did orientation of the triangles used in the proof of The Pythagorean Theorem matter? I was penalized for marking (say) ##\triangle ABC \cong \triangle DEF## as a valid step in the proof; The correct answer was ##\triangle CBA \cong \triangle DEF##. From the bits and pieces I remember, the corresponding angles had to be equal and in the former statement ##\triangle ABC \cong \triangle DEF## this was not the case, while in the latter ##\triangle CBA \cong \triangle DEF## it was.

"

In the bottom case, congruence doesn't depend on orientation, but by a combination of relations between sizes of sides, angles. I'm not sure the ancient Greeks who laid out such notions were even aware of general notions of orientation, orientability.

When does orientation become important? The example above was a step in the proof of the Pythagorean Theorem, cogito (fairly certain).

"

Any motion, force, acceleration, etc in 3-space has a direction in 3-space.

When does orientation become important?

"

One example — which may not be what you are thinking about at all…

Suppose that you are contemplating a three dimensional surface enclosing a radiant source. This three dimensional surface may not be convex. It may be pretty twisty.

You may want to think about how much total radiant flow goes through the surface. So you decide to integrate. You integrate incremental cross-sectional area ##a## times the radiating charge ##q## divided by ##r^2## for the inverse square law. And you get the wrong answer.

Because not all of the area elements are facing the radiating source. And the shape is folded enough that some area elements are facing the wrong way — the radiant flux is actually flowing "in" rather than "out" through some elements.

So you invent the idea of an oriented area element. So that the area element is ##\vec{a}## rather than just ##a##. And stick a vector dot product into your integral so that you can correctly track whether the area element is pointed "in", "out" or "mostly sideways".

I've not been exposed to such an idea, but it seems to me that one could calculate the oriented area of triangle ##\bigtriangleup {ABC}## in terms of the vector cross product of two oriented sides: $$\vec{a} = \frac {(\vec{C} – \vec{B}) \times (\vec{B} – \vec{A})} {2} = \frac{(\vec{A} – \vec{C}) \times (\vec{C} – \vec{B})} {2} = \frac{(\vec{B} – \vec{A}) \times (\vec{A} – \vec{C})} {2}$$Meanwhile, for the mirror image triangle ##\bigtriangleup {CBA}## we have

$$-\vec{a} = \vec{a_\text{mirror}} = \frac {(\vec{A} – \vec{B}) \times (\vec{B} – \vec{C})} {2} = \frac{(\vec{C} – \vec{A}) \times (\vec{A} – \vec{B})} {2} = \frac{(\vec{B} – \vec{C}) \times (\vec{C} – \vec{A})} {2}$$

In two dimensions, the notion of a winding number depends on the orientation of a closed planar curve. If you follow the curve from one end to the other, it will have (at least locally) a right side and a left side. You trace a path from a chosen point to infinity and count the number of times the path goes through the curve from left to right minus the number of times it passes from right to left. The result is the winding number.

Not to contradict you, but you mean to say that by adding direction to a length it now can be transformed?

"

It can be rotated if it has an initial direction. Vectors have length and direction. A length (eg length=5 feet) does not. I wouldn't say that all transformations require a direction.

"

Transformations can be/done on line segments, no?

"

A straight line segment would need to be given an orientation before you could say that it was "rotated".

"

How does adding a direction enable transformations? Do triangles have direction?

"

Every side of a given triangle has a length and two endpoints. We would have to do more. Each side would need a starting point and a endpoint so that they have directions. For instance, there would be clockwise and counterclockwise orientations of the sides. And some might have mixed combinations of side orientations that are neither all clockwise or all counterclockwise.

Not to contradict you, but you mean to say that by adding direction to a length it now can be transformed?

"

Maybe rather than direction, you may say they have ( or be given), an orientation.

As long as ##e^{i \varphi}## is a rotation of ##1## by an angle ##\varphi##, it's ok. Is it?

The general formula being ##re^{i \varphi}##, where the length ##r## is being rotated countreclockwise by ##\varphi##.

"

My concern is that you can not really say that a "length" is rotated. A "length" does not have a direction, so it can not be rotated. A vector has both a length and a direction. It is better to say that ##re^{i \varphi}## is a vector in the complex plane of length ##r## pointing in the direction ##\varphi##. The vector from the origin to the positive number ##r## on the real line is rotated. A "rotation" happens when you multiply another complex number, ##z##, by ##e^{i \varphi}## and there will be the usual change in length if you also multiply by a real number, ##r##.

As long as ##e^{i \varphi}## is a rotation of ##1## by an angle ##\varphi##, it's ok. Is it?

The general formula being ##re^{i \varphi}##, where the length ##r## is being rotated countreclockwise by ##\varphi##.

"

Correct.

As long as ##e^{i \varphi}## is a rotation of ##1## by an angle ##\varphi##, it's ok. Is it?

The general formula being ##re^{i \varphi}##, where the length ##r## is being rotated countreclockwise by ##\varphi##.

"

You mean scaling by a factor of ##1##? But, yes, Complex multiplication is equivalent to scaling and rotation.

What is its geometric meaning, if I may ask?

"

I'm afraid that I did you a disservice by concentrating on the geometry of Euler's formula. The geometric properties of general analytic and harmonic functions are beautiful.

I have no problem with the Gaußian plane. It is useful and necessary. I have a problem with the fact that its inadequacy is lost. It cannot represent ##(\mathrm{i}\mathbb{R})\cdot (\mathrm{i}\mathbb{R})\subseteq \mathbb{R}## in a sufficient way. It is information that got lost by representing a one-dimensional field in a two-dimensional Euclidean plane. But it is a very important information. Starting with the plane does not adequately take this into account and must be painfully corrected later on.

"

I am not sure what you mean. The information is not lost, noone defines the complex numbers without the multiplication. So surely the product of imaginary numbers is real is clear no matter what the definition is. By the way what was your preferred way of defining the complex numbers?

Exactly, that's my point. This is the arrow world and ##\mathbb{F}[T]/ \bigl\langle T^2+1 \bigr\rangle## something completely different.

"

What exactly do you mean by an arrow?

"

I conclude that you are so hardened in your personal opinions that you aren't willing to even consider my point of view. There is no way to reason against "We always did it this way!".

"

How? How do you know my opinion? I haven't expressed it!

"

What's next? "If everybody would do this?" or "I have my rules."?

You could at least accept that not everybody thinks that "We always did it this way!" is a satisfactory argument.

"

It is funny you should say that, because the dual numbers ##\mathbb{F}[T]/ \bigl\langle T^2 \bigr\rangle## can be used to define the arrows that you objected to.

"

Exactly, that's my point. This is the arrow world and ##\mathbb{F}[T]/ \bigl\langle T^2+1 \bigr\rangle## something completely different.

I conclude that you are so hardened in your personal opinions that you aren't willing to even consider my point of view. There is no way to reason against "We always did it this way!".

What's next? "If everybody would do this?" or "I have my rules."?

You could at least accept that not everybody thinks that "We always did it this way!" is a satisfactory argument.

But you have a valid point, imo. If ##z## is a Complex variable, why is it broken into Real and Complex parts in so many settings?

Are there intermediate extensions between ## \mathbb Q \subset \mathbb R \subset \mathbb C ##

?

I think that if you stress that the complex numbers are a field, complex analysis will still look like a miracle.

"

Very well said. I can't think of a good way to go from field properties to geometric properties like conformal mappings, although it does set the stage for inner product spaces.

The complex numbers and the real numbers carry many structures. I wanted to point out the field structure because that is what is necessary for doing complex calculus.

"

The field structure is not enough, you need the absolute value. Otherwise you could do calculus in any field, which is not the case.

"

The vector space structure is insufficient. Two-dimensional real analysis and complex analysis are very different.

"

Yes, but the two dimensional real space has additional structure, the complex structure. It is possible, and often done, to view any complex manifold as a real manifold with a complex structure.

"

Yet, students are introduced to complex numbers via the Gaußian plane. They start with a handicap for no reason and everything appears to be miraculous when complex analysis starts – only because some teachers prefer to draw little arrows instead of teaching the algebra as a field behind it.

"

I don't think the first introduction of complex numbers is always done with the idea that they are needed for complex analysis. Some think of them from a more algebraic point of view. I think the choice to emphasize the complex plane is purely pedagogical. Also, I think that if you stress that the complex numbers are a field, complex analysis will still look like a miracle.

"

And the algebraic part of an algebraic closure and the field axioms aren't even difficult. But no, little arrows rule. This is in my opinion a bad approach. The little arrows are necessary, but after the algebraic subject is settled, not before. There is a reason people come up with equations like

$$

-1=\sqrt{-1}\cdot \sqrt{-1} =\sqrt{(-1)\cdot(-1)}= \sqrt{1}=1 \quad (*)

$$

The reason is, that in the world of little arrows, everything looks real, just two-dimensional. I know, that my opinion is inconvenient since it is against the inertia of the old argument: We always did it this way.

"

No, I don't think your opinion is inconvenient, but I also don't see the problem with the standard aproach to complex numbers. The more ways to see something the better the understanding.

"

I need the two-dimensional view, too, by writing ##\mathbb{C}\cong \mathbb{R}[T]/ \bigl\langle T^2+1 \bigr\rangle ,## of course not without mentioning that ## \bigl\langle T^2+1 \bigr\rangle## is a maximal ideal. My imaginary unit would be the holomorphic image ##t## of the variable ##T## and ##(*)## would be impossible to set equal:

$$

-1 \equiv t^2 \equiv t\cdot t \not\equiv 1\cdot 1 =1.

$$

The algebraic view is also the key to understanding that complex numbers don't have an Archimedean order and that squares are not automatically positive anymore. If these concepts are settled, let's speak about our handicapped possibilities to visualize complex numbers despite the fact that

$$

\mathbb{R}[T]/ \bigl\langle T^2+1 \bigr\rangle \neq \mathbb{R}[T]/ \bigl\langle T^2 \bigr\rangle

$$

"

It is funny you should say that, because the dual numbers ##\mathbb{F}[T]/ \bigl\langle T^2 \bigr\rangle## can be used to define the arrows that you objected to.

It requires taylor series.

"

That is one good way. There are a couple of ways to define the complex exponent and each definition of ##e^{i\theta}## has its own proof of Euler's formula. Wikipedia has a few options here.

An interesting question is whether we can define s multiplication in ## \mathbb R^2## to turn it into an Algebra. The standard inner product won't work, but why not other operation? And ##<T^2>## is not maximal, so quotient wont be a field.

"

You could multiply it componentwise, or use a Lie multiplication of a two-dimensional real Lie algebra. However, you won't get a field, except if you use the graduation ##\mathbb{R}\oplus i \mathbb{R}## with ##(i\mathbb{R})\cdot (i\mathbb{R}) \subseteq \mathbb{R}.##

The complex numbers and the real numbers carry many structures. I wanted to point out the field structure because that is what is necessary for doing complex calculus. The vector space structure is insufficient. Two-dimensional real analysis and complex analysis are very different. Yet, students are introduced to complex numbers via the Gaußian plane. They start with a handicap for no we everything appears to be miraculous when complex analysis starts – only because some teachers prefer to draw little arrows instead of teaching the algebra as a field behind it. And the algebraic part of an algebraic closure and the field axioms aren't even difficult. But no, little arrows rule. This is in my opinion a bad approach. The little arrows are necessary, but after the algebraic subject is settled, not before. There is a reason people come up with equations like

$$

-1=\sqrt{-1}\cdot \sqrt{-1} =\sqrt{(-1)\cdot(-1)}= \sqrt{1}=1 \quad (*)

$$

The reason is, that in the world of little arrows, everything looks real, just two-dimensional. I know, that my opinion is inconvenient since it is against the inertia of the old argument: We always did it this way.

I need the two-dimensional view, too, by writing ##\mathbb{C}\cong \mathbb{R}[T]/ \bigl\langle T^2+1 \bigr\rangle ,## of course not without mentioning that ## \bigl\langle T^2+1 \bigr\rangle## is a maximal ideal. My imaginary unit would be the holomorphic image ##t## of the variable ##T## and ##(*)## would be impossible to set equal:

$$

-1 \equiv t^2 \equiv t\cdot t \not\equiv 1\cdot 1 =1.

$$

The algebraic view is also the key to understanding that complex numbers don't have an Archimedean order and that squares are not automatically positive anymore. If these concepts are settled, let's speak about our handicapped possibilities to visualize complex numbers despite the fact that

$$

\mathbb{R}[T]/ \bigl\langle T^2+1 \bigr\rangle \neq \mathbb{R}[T]/ \bigl\langle T^2 \bigr\rangle

$$

"

An interesting question is whether we can define s multiplication in ## \mathbb R^2## to turn it into an Algebra. The standard inner product won't work, but why not other operation? Edit:nd #### is not maximal, so quotient wont be a field. But, good point, the two aren't order-isomorphic. Similar for ##\mathbb C^{n}## and ##\mathbb R^{2n}##.

At what level of structure? Clearly one is an Algebra, while Euclidean space isn't. Though they are Topologically but not Geometrically identical.

"

The complex numbers and the real numbers carry many structures. I wanted to point out the field structure because that is what is necessary for doing complex calculus. The vector space structure is insufficient. Two-dimensional real analysis and complex analysis are very different. Yet, students are introduced to complex numbers via the Gaußian plane. They start with a handicap for no reason and everything appears to be miraculous when complex analysis starts – only because some teachers prefer to draw little arrows instead of teaching the algebra as a field behind it. And the algebraic part of an algebraic closure and the field axioms aren't even difficult. But no, little arrows rule. This is in my opinion a bad approach. The little arrows are necessary, but after the algebraic subject is settled, not before. There is a reason people come up with equations like

$$

-1=\sqrt{-1}\cdot \sqrt{-1} =\sqrt{(-1)\cdot(-1)}= \sqrt{1}=1 \quad (*)

$$

The reason is, that in the world of little arrows, everything looks real, just two-dimensional. I know, that my opinion is inconvenient since it is against the inertia of the old argument: We always did it this way.

I need the two-dimensional view, too, by writing ##\mathbb{C}\cong \mathbb{R}[T]/ \bigl\langle T^2+1 \bigr\rangle ,## of course not without mentioning that ## \bigl\langle T^2+1 \bigr\rangle## is a maximal ideal. My imaginary unit would be the holomorphic image ##t## of the variable ##T## and ##(*)## would be impossible to set equal:

$$

-1 \equiv t^2 \equiv t\cdot t \not\equiv 1\cdot 1 =1.

$$

The algebraic view is also the key to understanding that complex numbers don't have an Archimedean order and that squares are not automatically positive anymore. If these concepts are settled, let's speak about our handicapped possibilities to visualize complex numbers despite the fact that

$$

\mathbb{R}[T]/ \bigl\langle T^2+1 \bigr\rangle \neq \mathbb{R}[T]/ \bigl\langle T^2 \bigr\rangle

$$

Some people call certain objects "comex curves", other people call them "Riemann surfaces".

"

Before getting into more advanced subjects like Riemann surfaces, there are conformal mappings between simply connected open sets in the complex plane.

Separating complex numbers into real and imaginary parts is exactly what this article wants to put into the second row of consideration, and viewing complex numbers as elements of one field in the first place rather than reducing them to a simple, real vector space.

This narrowed view as ##a+ib## is in my opinion what hides the beauty of complex analysis, or the algebraic background of complex numbers. It is a widespread disease and not really helpful. This article was all about

$$

\mathbb{C} \neq \mathbb{R}^2.

$$

"

At what level of structure? Clearly one is an Algebra, while Euclidean space isn't. Though they are Topologically but not Geometrically identical.

Some people call certain objects "comex curves", other people call them "Riemann surfaces".

"

Ah, the commercial exchange. They got great curves ;).

I still don't get it. When I say ##y = 2 + 3## I know that ##y = 5## and that ##5 = 2 + 3##. I can, looks like, unpack the ##5## into ##3 + 2##. Can I do something to ##e^{i \varphi}## (is there an algebraic algorithm?) to get to ##a + ib##?

I do know that ##e^{i \varphi} = \cos \varphi + i \sin \varphi##, but it's the same issue here too. Is there summation of a series or a limit of a function or something else involved in proving this equality?

"

Imagine any point in a planar, two-dimensional coordinate system, except the origin that requires particular handling since the distance between the origin and the origin is zero and we cannot measure an angle between two lines of length zero.

So given any point ##z## in that grid, the coordinate system of the plane. How would you describe it to me if I asked you to show me a way from the origin to the point ##z##?

As I see it, you could either tell me to go ##a## units to the right and ##b## units to the top, which means in equations that ##z=(a,b)=a+ib## if we identify the plane with complex numbers, or you could tell me turn myself and look horizontally to the right, then turn myself by an angle of ##\varphi ## counterclockwise and walk ##r## units into the new direction, which means ##z=(a,b)= r\cdot e^{i \varphi }.##

##r=|z|=\sqrt{a^2+b^2}## (Pythagoras) and times ##e^{i \varphi }## is the turning from looking horizontally to looking towards ##z.##

There are several ways to see why times ##e^{i \varphi }## is a counterclockwise rotation of the point ##(r,0)## to the point ##(a,b).## One is to look at Euler's formula (as linked to twice in previous posts) or by the study of complex multiplication (see picture in post #59). The only ambiguity here is, that the vertical coordinate is once the second in ##(a,b),## the ##b## and at the same time identified with the complex number ##i b##. You can imagine this as units. We have the unit ##[1]## horizontally and the unit ## [\mathrm{i}]## vertically. We do not write the units in ##(a,b)## if we refer to a point on the grid, and do write the units if we identify such a point with a complex number: ##(a,b)=a+ i b.##

Gracias, but do I have a case if I say that the equality ##re^{i\varphi} = a + ib## is not as evident/obvious as the one you see in ##5 = 5##?

"

Good point. In fact, the definition of what a complex exponent means is not so simple. The correct meaning of ##e^{i\theta}## can only be accepted after some work. The work is not elementary. I think that the definition that stays within the more basic facts is the limit definition. The associated proofs are still not elementary. That is why it took a genius like Euler to discover Euler's formula.

PS. You should get comfortable in knowing that there are solid proofs that Euler's formula, ##e^{i\theta}=\cos(\theta)+i \sin(\theta)##. Then you are free to use it and forget the details of the proof. It is very useful.

@fresh_42 I see. I'm amazed that a "simple" expression like ##e^{i \theta}## equals ##a + bi##. How??

"

By Euler's formula, or if you prefer that, by expressing the same (complex) number ##z## once is Cartesian coordinates ##z=(x,y)=x+iy## and then by polar coordinates ##z=r\cdot e^{i \varphi }## where ##r=|z|=\sqrt{x^2+y^2}## and ##\varphi ## determined by ##\dfrac{x}{r}=\cos \varphi ## and ##\dfrac{y}{r}=\sin \varphi .## It uses the fact that we can represent complex numbers in a planar coordinate system with a real axis (##x##) and a purely imaginary axis (##i y ##).

"

So if ##z = 1## then ##1 = |1| \times e^{i \times 0} = e^0 = 1##?

"

Yes.

Is there software online where I can test this?

So ##e^{i \theta} \times z## will rotate ##z## counterclockwise by ##\theta##?

"

You can use https://www.wolframalpha.com/ for such calculations but they aren't made for such calculations that are quite basic.

Once you studied/learned/accepted Euler's formula, and the Wikipedia link above has several proofs of the identity

$$

e^{i\varphi }=\cos \varphi + i \sin \varphi

$$

then it is quite easy. Every complex number ##z=x+iy## can be written as ##z=|z|\cdot e^{i\varphi }## where ##|z|=\sqrt{x^2+y^2}## is the absolute value of ##z## that is the distance from the origin ##0+i\cdot 0=0## of the coordinate system, and ##\varphi ## the so-called argument of ##z,## the counterclockwise measured angle from the positive real axis, the ##x##-axis.

Now we get

$$

e^{i \theta} \cdot z = e^{i \theta} \cdot |z|\cdot e^{i\varphi }=|z|\cdot e^{i\theta + i\varphi }=|z|\cdot e^{i(\theta + \varphi)} .

$$

The result is therefore a complex number of absolute value ##|z|## and an argument ##\theta + \varphi ## which is the original ##\varphi ## rotated counterclockwise (we always measure from the positive real axis counterclockwise, of course, if the angles are positive) to ##\theta + \varphi ## which is just a rotation by ##\theta.##

View attachment 348656

Source: https://de.wikipedia.org/wiki/Komplexe_Zahl#Multiplikation

The pictures in the English version were a bit messy.

Is there software online where I can test this?

So ##e^{i \theta} \times z## will rotate ##z## counterclockwise by ##\theta##?

"

It looks like Wolfram\Alpha can help, but I am not familiar with it. See this.

That's really kind of you to explain what seems to be the relationship between complex numbers ##z = x + iy## and ##e^{i \theta}##. What does ##e^{i\theta}## do? Rotate ##1## by angle of ##\theta##?

"

Yes. That would be the position of ##e^{i\theta}## in the complex plane. The rotation is counter-clockwise. In addition, multiplication of any complex number, ##z##, by ##e^{i\theta}## will rotate ##z## counter-clockwise by the angle ##\theta##.

What is its geometric meaning, if I may ask?

"

I can only give some rather vague intuitive reactions.

1) I see it as relating the XY coordinate system to the polar coordinate system.

2) The way that complex multiplication relates to rotations around the origin, then leads to the rotations in the other direction relating to division. So there is always a complex multiplicative inverse (unlike general matrices).

3) Rotations are significant in cyclic functions.

4) The behavior of the exponential function under differentiation is very significant in differential equations.

I'm sure that others can give a more concrete and coherent answer.

What is its geometric meaning, if I may ask?

"

View attachment 348633

We have the complex number ##z=x+iy = e^{i \theta}.## Its real ##x##-component is ##x=\sin \theta## and its imaginary ##iy##-component is ##y=\cos \theta## by the definition of sine and cosine at the right triangle. Together, this becomes

$$

z=x+iy=\cos \theta +i \sin \theta

$$

That the RHS equals ##e^{i \theta }## is Euler's formula.

Square roots of negative numbers are imaginary numbers e.g. ##\sqrt {-3}##

"

If you ignore multiplication and consider only addition, the imaginari numbers are indistiguishable from the reals.

Yes, but he probably meant vector spaces over ##\mathbb R##.

"

This is at the same level as saying they are both additive groups. ##\mathbb{C}## and ##\mathbb{R}^2## have a common understanding as, resp. field, two-dimensional real vector space. Of course, one can always right them as ##\mathbb{C}=\left(\mathbb{C},s_1,\ldots,s_n\right)## or ##\mathbb{R}^2=\left( \mathbb{R}^2, t_1, \ldots , t_m \right) ## where ##s_i , t_j## represent the countless structures they can carry but nobody does this.

This [##\mathbb{C}\neq \mathbb{R}^2##, ed.] is not true without any context. The opposite is true.

"

What??? This is a clear misinformation!

$$

\underbrace{\mathbb{C}}_{\nearrow \text{ field }} \quad \neq \quad \underbrace{\mathbb{R}^2}_{\text{ no field }\nwarrow}

$$

The Gaussian integers are primarily a ring and not a ##\mathbb{Z}##-module. I guess, I have the same objections.

Separating complex numbers into real and imaginary parts is exactly what this article wants to put into the second row of consideration, and viewing complex numbers as elements of one field in the first place rather than reducing them to a simple, real vector space.

"

It is not reducing, it is using it.

"

This narrowed view as ##a+ib## is in my opinion what hides the beauty of complex analysis, or the algebraic background of complex numbers. It is a widespread disease and not really helpful.

"

Why is it not helpful? It definitely is for some things.

"

This article was all about

$$

\mathbb{C} \neq \mathbb{R}^2.

$$

"

This is not true without any context. The opposite is true.

Ps. Do you have a similar objection for the Gaussian integers?

The geometry of the complex plane is very important in many applications of complex analysis. IMO, a lot is lost when it is only considered algebraically.

"

It is a tool, and shouldn't be the central view that it often unfortunately is. The complex plane supports the perspective of a two-dimensional real vector space. You can throw complex analysis in the trash with this limited view (sounded better in German).

$$

\begin{pmatrix}0\\1\end{pmatrix}\cdot \begin{pmatrix}0\\1\end{pmatrix}=\begin{pmatrix}-1\\0\end{pmatrix}

$$

is crucial!

This narrowed view as ##a+ib## is in my opinion what hides the beauty of complex analysis, or the algebraic background of complex numbers. It is a widespread disease and not really helpful. This article was all about

$$

\mathbb{C} \neq \mathbb{R}^2.

$$

"

The geometry of the complex plane is very important in many applications of complex analysis. IMO, a lot is lost when it is only considered algebraically.

It's in the Bible and everything – be fruitful and multiply!

The concept being discussed is called closure. If I have c = a ⊗ b where ⊗ is addition, subtraction, multiplication or division, and a and b are both real, so is c. If a and b are both complex, so is c. If a and b are purely imaginary, c might or might not be – it is not closed.

This makes purely imaginary numbers less useful.

"

Separating complex numbers into real and imaginary parts is exactly what this article wants to put into the second row of consideration, and viewing complex numbers as elements of one field in the first place rather than reducing them to a simple, real vector space.

This narrowed view as ##a+ib## is in my opinion what hides the beauty of complex analysis, or the algebraic background of complex numbers. It is a widespread disease and not really helpful. This article was all about

$$

\mathbb{C} \neq \mathbb{R}^2.

$$

So you're one of those people who believe in multiplication?

"

It's in the Bible and everything – be fruitful and multiply!

The concept being discussed is called closure. If I have c = a ⊗ b where ⊗ is addition, subtraction, multiplication or division, and a and b are both real, so is c. If a and b are both complex, so is c. If a and b are purely imaginary, c might or might not be – it is not closed.

This makes purely imaginary numbers less useful.

Jene sais pas, but we could exclude these anomalies from our study, oui? Even division seems to be a problem since ##\frac{ai}{bi} = \frac{a}{b}##. We would be restricted to addition and subtraction i.e. the set of purely imaginary numbers would be closed under addition and subtraction only. Is ##0## both real AND imaginary? The complex and real lines do intersect at ##(0, 0)##.

"

Then what is the difference from the real numbers?

So you're one of those people who believe in multiplication? It's a Deep State trick! ;-)

"

Multiplication is the key to survival.

When you multiply two of those you get a real number ##i\cdot i =-1##.

"

So you're one of those people who believe in multiplication? It's a Deep State trick! ;-)

Why not study pure complex numbers. Drop the real part and simply investigate the imaginary part. It is a number line too, just like the real number line, oui? Is there an imaginary π, a πi? Don't we have LaTex on this site? An imaginary circle, looks like will have to have a real circumference or diameter.

"

When you multiply two of those you get a real number ##i\cdot i =-1##.

That is not what meant. I meant the Krull dimension of a ring, then any field has dimension 0.

"

Added to the list. It's hard to find a dimension without ideals.

I have meanwhile 21 keywords. And I haven't even looked up the history which should be exciting, too, especially topology and fractals, let alone …

"

… some linked papers that do exhibit confusion.

"

And Greg said that he does not like articles split into parts.

https://de.wikipedia.org/wiki/Krulldimension#Beispiele

"

That is not what meant. I meant the Krull dimension of a ring, then any field has dimension 0.

Which wiki page is that?

"

https://de.wikipedia.org/wiki/Krulldimension#Beispiele

Just a remark on the Krull dimension. I haven't done the math but Wiki says that ##\dim_K(\mathbb{C},\text{Hausdorff})=0## and ##\dim_K(\mathbb{C},\text{Zariski})=1.##

That projected article about dimensions gets longer the closer I look at it.

"

Which wiki page is that?

This is not an argument. I am not trying to prove anything. This is just another way to look at things.

"

Just a remark on the Krull dimension. I haven't done the math but Wiki says that ##\dim_K(\mathbb{C},\text{Hausdorff})=0## and ##\dim_K(\mathbb{C},\text{Zariski})=1.##

That projected article about dimensions gets longer the closer I look at it.

This argument is circular. You start with a real Riemann plane and conclude that it is two-dimensional.

"

This is not an argument. I am not trying to prove anything. This is just another way to look at things.

A topological manifold is a topological space, which is locally homwomorphic to ##\mathbb R^n##. This ##n## is the dimenssion of the topological manifold. As such, ##\mathbb C## is two dimenssional.

"

This argument is circular. You start with a real Riemann plane and conclude that it is two-dimensional.

Could you elaborate on what you meant @martinbn in post #7?

"

A topological manifold is a topological space, which is locally homwomorphic to ##\mathbb R^n##. This ##n## is the dimenssion of the topological manifold. As such, ##\mathbb C## is two dimenssional.

My favourite is Analytic Continuation and its use is summing divergent or otherwise meaningless sums. It may seem a pure math pastime, but Borel Summation (which is justified by analytic continuation – reversing the sum and integral can only be done on a region, but by analytic continuation can be extended to the whole complex plane) is very useful in differential equations:

https://math.osu.edu/~costin/adiab.pdf

For this reason, Borel Summation is included in many Engineering Mathematics textbooks such as Advanced Engineering Mathematics by Lopez and Benders Lectures on Mathematical Physics (a treasure trove of all sorts of interesting stuff). But, naughty, naughty, they do not explain why you can reverse the sum and integral.

Thanks

Bill

As to your initial question, not really. But inspired by some of the discussion in this thread, it could be interesting to survey the different way dimension is used in mathematics and/or how the same object, e.g., complex numbers, have many different dimensions depending on how you look at them. It doesn't have to be exhaustive or advocate a point of view. The latter is probably what usually invites the most nitpicking (well, more nitpicking than I already expect from mathematicians

"

Dimension is indeed an interesting topic! There came at least half a dozen keywords to my mind without doing any research (17 so far). And it is not as trivial as it sounds! Let me take the chance and make a little survey here:

a) Does any of the participants, critics, or readers here want to write that article instead of me?

b) Would you mind giving me some keywords I should not forget to mention?

I have already read Krull, and topology. However, the latter was a bit unprecise and I'm not sure I know what had been meant in post #7, i.e. which objects and topologies had been meant. I mean, since zero wasn't excluded, it sounded like the comparison of additive groups, but that is guesswork from my side. Could you elaborate on what you meant @martinbn in post #7?

Perhaps the next insight article should be about the not-so-trivial dimensions of mathematical objects.

"

The Krull dimenssion of any field is zero. From that point of view the field of complex numbers is a point.

Perhaps the next insight article should be about the not-so-trivial dimensions of mathematical objects.

"

Do you have some specific objects in mind? I'd prefer a topic that isn't in the category of "religion" like arithmetic rules or complex numbers. Those topics are of the kind that literally everybody has to say something about, everybody has a different view and complains if you don't hit their perspective, everybody knows it better, but none of those critics wrote it down before. It is tedious.

How about history? I have a rather thick book about the history of mathematics between 1700 and 1900. It is a bit biased towards French mathematicians since it is by a French author, but not as much as the Britannica is biased towards British people.

One has to keep in mind that the set of complex numbers is not just a field. It is also a topological space and as such is homeomorphic to the plane and in this context dimension two is the correct one.

"

I haven't said that this is an incorrect view, except if it is reduced to ##\mathbb{R}^2##. I think, it just shouldn't be the first view. But, hey, let's consider it as vector space over the rationals.

It is written for students who aren't trained to accept only one view. It was written to keep in mind that ##\mathbb{C}## is a field in the first place, not a vector space and even less a plane. Cauchy's work is the real achievement in calculus, neither Newton's nor Leibniz's. And Cauchy's theorems are not linear algebra.

Complex numbers contain two independent quantities so they are two-dimensional. An Excel spreadsheet with a hundred columns can be one-hundred dimensional. And so forth.

"

Sorry, but this is wrong, and why I wrote that article. Complex numbers are scalars (one quantity) and as such one-dimensional. Every field is a one-dimensional vector space over itself. Please read the article and its purpose before you spread misinformation. They can be viewed as a two-dimensional real vector space, but this is a reduction. A reduction that doesn't allow complex analysis. Moreover, the complex numbers are an infinite-dimensional rational vector space. So claiming they are two-dimensional is as right and wrong as it is to claim they are infinite-dimensional. Both perspectives are insufficient to perform analysis and physics.

A spreadsheet in Excel is two-dimensional, no matter how many columns it has. You can make an Excel file three-dimensional by adding more sheets to the same file, but that's it.