- #1

Negatron

- 73

- 0

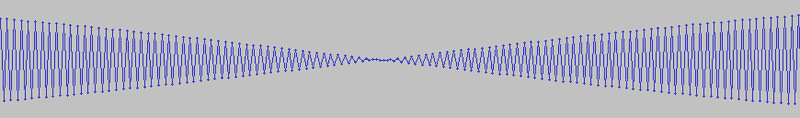

I used Audacity to generate a 5500Hz tone at a sampling rate of 11025.

This is the result:

To me this appears to be a tone at a frequency of (11025/2) with oscillating amplitude rather than a frequency of 5500.

The tone also sounds like it looks. It has audible oscillations in amplitude.

At increasingly higher sampling rates this is less and less of a problem and eventually the tone sounds consistent.

This conflicts with what I have learned, that a sampling rate of 2f can exactly represented a signal of frequencies between 0 and f. My intuition told me that there are going to be problems with the signal near f and sure enough playing around with signal generators this seems to be confirmed.

So now I'm in conflict. I understand why the amplitude oscillations occur but I don't understand how the claim of the sampling theorem can be upheld when such problems exist within the range of sample-able frequencies.

This is the result:

To me this appears to be a tone at a frequency of (11025/2) with oscillating amplitude rather than a frequency of 5500.

The tone also sounds like it looks. It has audible oscillations in amplitude.

At increasingly higher sampling rates this is less and less of a problem and eventually the tone sounds consistent.

This conflicts with what I have learned, that a sampling rate of 2f can exactly represented a signal of frequencies between 0 and f. My intuition told me that there are going to be problems with the signal near f and sure enough playing around with signal generators this seems to be confirmed.

So now I'm in conflict. I understand why the amplitude oscillations occur but I don't understand how the claim of the sampling theorem can be upheld when such problems exist within the range of sample-able frequencies.

).

).