- #1

Phy73

- 3

- 0

Hi,

I need some directions to target a problem that is bothering me quite a lot, even some links or small explanation if possible, thank you in advance!

I have a huge dataset Ω (unknown) of experimental values {[itex]e_i[/itex]} that should approximate (with noise both in the value and in the variable) a unknown curve f, with the condition that f is not-decreasing.

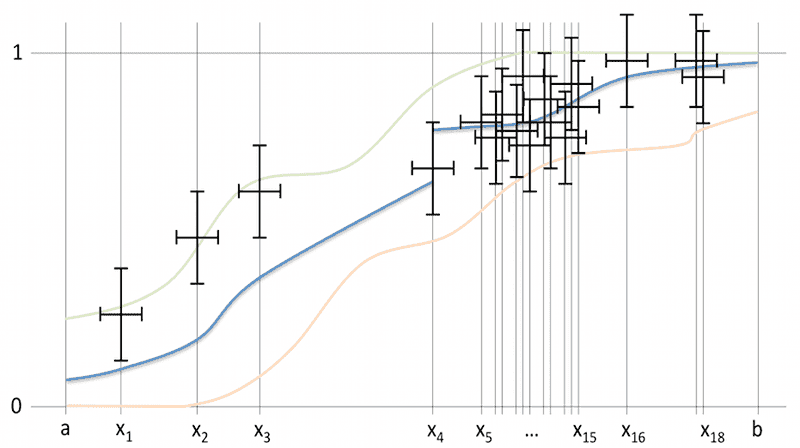

I get a subset of Ω, called "training subset", that is randomly chosen, so that should reflect the same characteristics of Ω in distribution and other geometrical evaluators. In the following image the subset is Ω' = {[itex]x_1,...,x_{18}[/itex]}, just to explain it with an intuitive drawing:

Now, what I like is to find the "best" curve fitting the data, and if possible two curves of confidence, like the orange and the green in the previous image, expressing some percentile confidence that the curve will be between the two curves.

I see some different issues:

I really like to understand how to address this kind of problem, even using some computational algorithm, but I've difficulty trying to find the proper terms to look for, or a hint to show me where to start.

thank you in advance!

I need some directions to target a problem that is bothering me quite a lot, even some links or small explanation if possible, thank you in advance!

I have a huge dataset Ω (unknown) of experimental values {[itex]e_i[/itex]} that should approximate (with noise both in the value and in the variable) a unknown curve f, with the condition that f is not-decreasing.

I get a subset of Ω, called "training subset", that is randomly chosen, so that should reflect the same characteristics of Ω in distribution and other geometrical evaluators. In the following image the subset is Ω' = {[itex]x_1,...,x_{18}[/itex]}, just to explain it with an intuitive drawing:

Now, what I like is to find the "best" curve fitting the data, and if possible two curves of confidence, like the orange and the green in the previous image, expressing some percentile confidence that the curve will be between the two curves.

I see some different issues:

- what means "best" curve? well, if we have many data with overlapping variable confidence intervals (like between [itex]x_5[/itex] and [itex]x_{15}[/itex]), it means the more probable value; more points I add to Ω' the more "precise" I expect to be the expected curve;

- because there are errors in variable, can it make sense to evaluate [itex]f[/itex] only in a finite number of points with some algorithm?

- where points are dense, I expect almost to see the distribution of errors and the 2 confidence curves be nearer to the "best" curve, while where points are not dense the curve should be highly imprecise (confidence curves far away); are these 2 cases to be treated separately?

- the condition of monotonicity for f implies some limitation of the confidence curves also in absence of non dense intervals, as I expect confidence curves monotonous too (am I right?), but what this means in terms of construction of the "best" curve fitting the data?

- the errors of the data can be considered random gaussian errors, with σ to be determined; while the error distribution in the value can be evaluated within a dense interval, how to estimate the error distribution in variable?

I really like to understand how to address this kind of problem, even using some computational algorithm, but I've difficulty trying to find the proper terms to look for, or a hint to show me where to start.

thank you in advance!