- #36

- 1,254

- 141

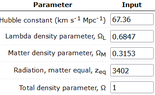

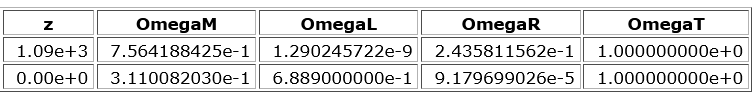

You are right, but to output OmegaT,0 >1 would also be a discrepancy, because without giving the value explicitly, the collaboration the spatial flatness in words, if I read the paper correctly.JimJCW said:I think if at input Ωm,0 = 0.3111 but at output it becomes Ωm,0 = 0.3110082030, it is a discrepancy.

So maybe we miss something in the equations, but as a non-cosmologist, I can't figure out what.

Our previous versions of Lightcone8 suffered the same problem.

I'm also still perplexed as to why the Trial Version does not have CSV output option working when selected. I did upload it with the OuputCSV.js file (a new file that was not there is earlier versions) and it shows on my fork.