Myslius

- 124

- 5

Let's say an object far far away from the Earth free falls in gravitational field. At Earth's surface free falling object gains kinetic energy E_1.

Let's say an electron far away from the proton free falls in electromagnetic field. At Bohr's radius free falling electron gains kinetic energy E_2.

Let's say on object is standing still on the surface of the Earth and it gets an energy equal to E_1, object escapes to infinity.

Now here's the problem, that i don't get. Free falling electron gains E_2=13.6eV when distance to the nucleus is Bohr's radius * 2.

13.6 eV is energy required to strip electron from hydrogen nucleus (confirmed experimentally).

Electron gains 27.2eV at Bohr's radius. Where's this factor of 2 coming from?

Calculations come from a little simulation program that i wrote:

In the case of gravity, program integrates Newton's gravitational law.

In the case of electromagnetism program integrates coulomb's law

Everything looks fine in the case of gravity, but in the case of electromagnetism, i get an output:

V1: 3093155.3510288

V2: 1684.5850519587

A1: 9.047093214817E+22

A2: 4.9272009529973E+19

M1: 2.8176738503187E-24

M2: 2.8176738503187E-24

Total Time: 0.06977473942106

Distance: 5.290930084121E-11

Energy1: 4.3577514737836E-18, eV: 27.201084699357

Energy2: 2.3733056247709E-21, eV: 0.01481417370982

V looks fine (velocity m/s)

A looks fine (acceleration m/s^2)

M looks fine (momentum of a proton and momentum of an electron)

Energy and distance have a weird factor of 2. Why?

My initial thought was that Lorentz force is a sum of both forces between the electron and the proton, however that doesn't seem to be the case.

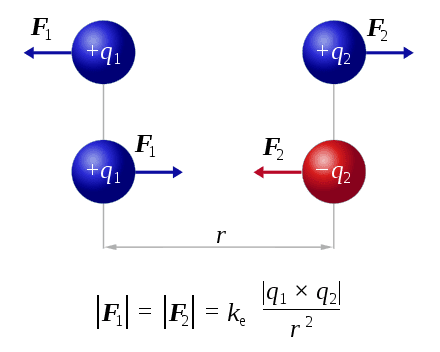

F1 is equal to F2 and is equal to k_e * q1 * q2 / (r*r)

and not

F1 + F2 = k_c * q1 * q2 / (r*r)

What is wrong here?

Let's say an electron far away from the proton free falls in electromagnetic field. At Bohr's radius free falling electron gains kinetic energy E_2.

Let's say on object is standing still on the surface of the Earth and it gets an energy equal to E_1, object escapes to infinity.

Now here's the problem, that i don't get. Free falling electron gains E_2=13.6eV when distance to the nucleus is Bohr's radius * 2.

13.6 eV is energy required to strip electron from hydrogen nucleus (confirmed experimentally).

Electron gains 27.2eV at Bohr's radius. Where's this factor of 2 coming from?

Calculations come from a little simulation program that i wrote:

In the case of gravity, program integrates Newton's gravitational law.

In the case of electromagnetism program integrates coulomb's law

Everything looks fine in the case of gravity, but in the case of electromagnetism, i get an output:

V1: 3093155.3510288

V2: 1684.5850519587

A1: 9.047093214817E+22

A2: 4.9272009529973E+19

M1: 2.8176738503187E-24

M2: 2.8176738503187E-24

Total Time: 0.06977473942106

Distance: 5.290930084121E-11

Energy1: 4.3577514737836E-18, eV: 27.201084699357

Energy2: 2.3733056247709E-21, eV: 0.01481417370982

V looks fine (velocity m/s)

A looks fine (acceleration m/s^2)

M looks fine (momentum of a proton and momentum of an electron)

Energy and distance have a weird factor of 2. Why?

My initial thought was that Lorentz force is a sum of both forces between the electron and the proton, however that doesn't seem to be the case.

F1 is equal to F2 and is equal to k_e * q1 * q2 / (r*r)

and not

F1 + F2 = k_c * q1 * q2 / (r*r)

What is wrong here?

Last edited: