- #1

Math Amateur

Gold Member

MHB

- 3,996

- 48

I am reading Matej Bresar's book, "Introduction to Noncommutative Algebra" and am currently focussed on Chapter 1: Finite Dimensional Division Algebras ... ...

I need help with another aspect of the proof of Lemma 1.1 ... ...

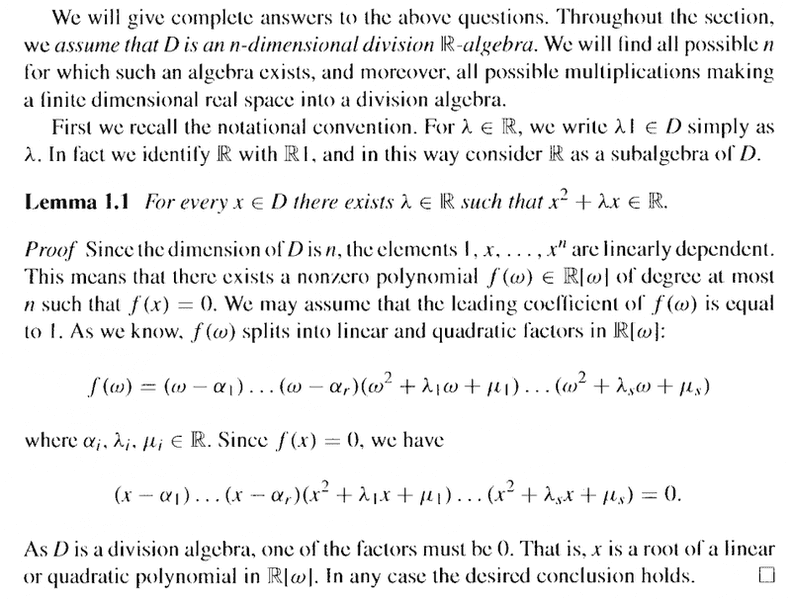

Lemma 1.1 reads as follows:

My questions regarding Bresar's proof above are as follows:Question 1

In the above text from Bresar, in the proof of Lemma 1.1 we read the following:

"... ... As we know, ##f( \omega )## splits into linear and quadratic factors in ##\mathbb{R} [ \omega ]## ... ..."

My question is ... how exactly do we know that ##f( \omega )## splits into linear and quadratic factors in ##\mathbb{R} [ \omega ]## ... can someone please explain this fact ... ...

Question 2

In the above text from Bresar, in the proof of Lemma 1.1 we read the following:

" ... ... Since ##f(x) = 0## we have

##( x - \alpha_1 ) \ ... \ ... \ ( x - \alpha_r )( x^2 + \lambda_1 x + \mu_1 ) \ ... \ ... \ ( x^2 + \lambda_s x + \mu_s ) = 0##

As ##D## is a division algebra, one of the factors must be ##0##. ... ... "

My question is ... why does ##D## being a division algebra mean that one of the factors must be zero ...?

Help with questions 1 and 2 above will be appreciated .. ...

Peter

==============================================================================

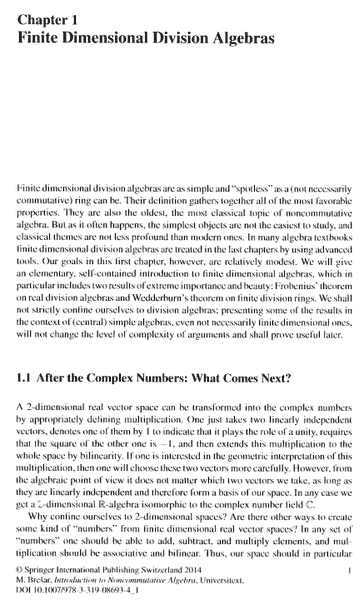

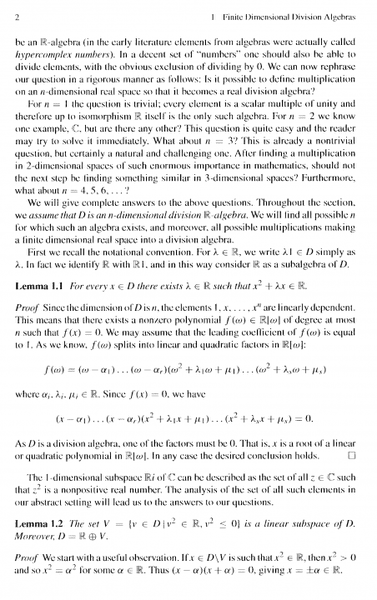

In order for readers of the above post to appreciate the context of the post I am providing pages 1-2 of Bresar ... as follows ...

I need help with another aspect of the proof of Lemma 1.1 ... ...

Lemma 1.1 reads as follows:

My questions regarding Bresar's proof above are as follows:Question 1

In the above text from Bresar, in the proof of Lemma 1.1 we read the following:

"... ... As we know, ##f( \omega )## splits into linear and quadratic factors in ##\mathbb{R} [ \omega ]## ... ..."

My question is ... how exactly do we know that ##f( \omega )## splits into linear and quadratic factors in ##\mathbb{R} [ \omega ]## ... can someone please explain this fact ... ...

Question 2

In the above text from Bresar, in the proof of Lemma 1.1 we read the following:

" ... ... Since ##f(x) = 0## we have

##( x - \alpha_1 ) \ ... \ ... \ ( x - \alpha_r )( x^2 + \lambda_1 x + \mu_1 ) \ ... \ ... \ ( x^2 + \lambda_s x + \mu_s ) = 0##

As ##D## is a division algebra, one of the factors must be ##0##. ... ... "

My question is ... why does ##D## being a division algebra mean that one of the factors must be zero ...?

Help with questions 1 and 2 above will be appreciated .. ...

Peter

==============================================================================

In order for readers of the above post to appreciate the context of the post I am providing pages 1-2 of Bresar ... as follows ...