GravityX

- 19

- 1

- Homework Statement

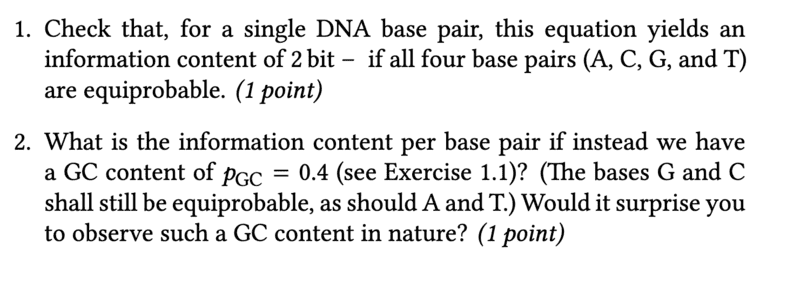

- Calculate the information content of a DNA base pair

- Relevant Equations

- ##I(A)=-\sum\limits_{x=A}^{}P_xlog_2(P_x)##

Unfortunately, I have problems with the following task

For task 1, I proceeded as follows. Since the four bases have the same probability, this is ##P=\frac{1}{4}## I then simply used this probability in the formula for the Shannon entropy:

$$I=-\frac{1}{4}log_2(\frac{1}{4})-\frac{1}{4}log_2(\frac{1}{4})-\frac{1}{4}log_2(\frac{1}{4})-\frac{1}{4}log_2(\frac{1}{4})=2$$

Unfortunately, I am not quite sure about task 2, but a GC content indicates how high the proportion of GC is in the DNA, so it means that AT must be present at 60 % and GC at 40 %. Is the calculation then as follows:

$$I=-0.4log_2(0.4)-0.4log_2(0.4)-0.6log_2(0.6)-0.6log_2(0.6)=1.94$$

For task 1, I proceeded as follows. Since the four bases have the same probability, this is ##P=\frac{1}{4}## I then simply used this probability in the formula for the Shannon entropy:

$$I=-\frac{1}{4}log_2(\frac{1}{4})-\frac{1}{4}log_2(\frac{1}{4})-\frac{1}{4}log_2(\frac{1}{4})-\frac{1}{4}log_2(\frac{1}{4})=2$$

Unfortunately, I am not quite sure about task 2, but a GC content indicates how high the proportion of GC is in the DNA, so it means that AT must be present at 60 % and GC at 40 %. Is the calculation then as follows:

$$I=-0.4log_2(0.4)-0.4log_2(0.4)-0.6log_2(0.6)-0.6log_2(0.6)=1.94$$