jaumzaum

- 433

- 33

Hello!

I was studying odds ratio and its relation to relative risk. By what I understood, the statistics that is indeed important for us and that have a nice interpretation for the context is relative risk (I was also wondering if odds ratio has any interpretation). But relative risk sometimes is difficult or expansive to calculate, because we need the prevalence of the disease, and for rare diseases this means a big sample.

So what many studies do is to calculate the odds ratio and interpret it as being nearly the relative risk. And that's true for rare diseases.

I have two things that I didn't understand, considering this example from wikipedia:

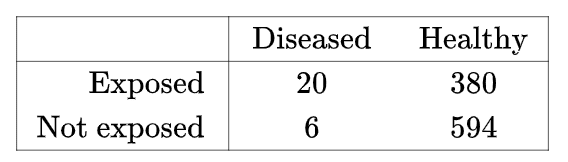

This is a village from 1000 people and we want to study if a radiation leak increased the incidence of a disease.

RR = (20/400)/(6/600)=5

OR = (20/380)/(6/594)=5.2

I understand how this values were calculated.

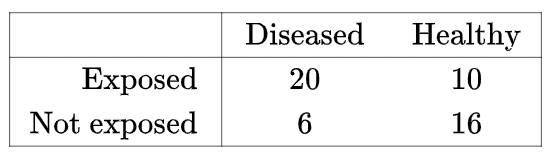

Then they say that, for many studies, we rarely have information about the whole population. They take a sample of 50 cases, 25 that were exposed to the radiation leak and 25 that weren't:

And they calculate de OR = (20/10)/(6/16)=5.3

They say the OR of the sample is a good estimate of the OR of the population, and then, using the rare disease assumption, that it is also a good estimation of the relative risk of the population.

But when we try to calculate the relative risk for the sample, we have some problems:

RR = (20/30)/(6/22)=2,4

My question is, why is the OR of the sample a good estimate of the OR of the population, but not the RR?

And also, if there is any interpretation for the OR?

Thanks !

I was studying odds ratio and its relation to relative risk. By what I understood, the statistics that is indeed important for us and that have a nice interpretation for the context is relative risk (I was also wondering if odds ratio has any interpretation). But relative risk sometimes is difficult or expansive to calculate, because we need the prevalence of the disease, and for rare diseases this means a big sample.

So what many studies do is to calculate the odds ratio and interpret it as being nearly the relative risk. And that's true for rare diseases.

I have two things that I didn't understand, considering this example from wikipedia:

This is a village from 1000 people and we want to study if a radiation leak increased the incidence of a disease.

RR = (20/400)/(6/600)=5

OR = (20/380)/(6/594)=5.2

I understand how this values were calculated.

Then they say that, for many studies, we rarely have information about the whole population. They take a sample of 50 cases, 25 that were exposed to the radiation leak and 25 that weren't:

And they calculate de OR = (20/10)/(6/16)=5.3

They say the OR of the sample is a good estimate of the OR of the population, and then, using the rare disease assumption, that it is also a good estimation of the relative risk of the population.

But when we try to calculate the relative risk for the sample, we have some problems:

RR = (20/30)/(6/22)=2,4

My question is, why is the OR of the sample a good estimate of the OR of the population, but not the RR?

And also, if there is any interpretation for the OR?

Thanks !

Last edited: