andrewkirk said:

Hello Peter.

Broadly you have the correct idea but your approach relies on two implicit assumptions that do not hold in the general case.

Firstly, by writing ##\langle \alpha|## and ##|\alpha\rangle## as each having three components, you are assuming the vector space is finite dimensional. Dirac's notation is most used in quantum mechanics, where the vector spaces are usually infinite-dimensional, so such a representation as a finite set of components does not appear.

Secondly, you replace the inner product ##\langle\alpha|A\rangle## by a simple multiplication of a row vector ##\langle\alpha|## by a column vector ##|A\rangle##. That is only correct when those vectors are representations in an orthonormal basis. From your first embedded image, that seems to be the case here, but you need to exercise care that it will not always be the case. The more general formula requires use of a metric tensor so that ##\langle\alpha|A\rangle = \langle\alpha| \times M \times |\alpha\rangle## where ##M## is the representation of the metric tensor in the given basis. For an orthonormal basis ##M## will be the identity matrix (with an infinite number of rows and columns if the space is infinite-dimensional!) so the calculation collapses to look like what you have shown.

Lastly, to avoid confusion, avoid writing things like $$

\vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix}$$

The left-hand side is a vector in the space. The right-hand side is a representation of that vector in a particular basis. The two sides are not the same sort of object and should not be connected by an equals sign.

This can seem confusing if you've been introduced to linear algebra through vectors and matrices that are rows, columns or rectangle of numbers. Vector spaces are abstract objects, of which numeric number tuples are only one type.

andrewkirk said:

Hello Peter.

Broadly you have the correct idea but your approach relies on two implicit assumptions that do not hold in the general case.

Firstly, by writing ##\langle \alpha|## and ##|\alpha\rangle## as each having three components, you are assuming the vector space is finite dimensional. Dirac's notation is most used in quantum mechanics, where the vector spaces are usually infinite-dimensional, so such a representation as a finite set of components does not appear.

Secondly, you replace the inner product ##\langle\alpha|A\rangle## by a simple multiplication of a row vector ##\langle\alpha|## by a column vector ##|A\rangle##. That is only correct when those vectors are representations in an orthonormal basis. From your first embedded image, that seems to be the case here, but you need to exercise care that it will not always be the case. The more general formula requires use of a metric tensor so that ##\langle\alpha|A\rangle = \langle\alpha| \times M \times |\alpha\rangle## where ##M## is the representation of the metric tensor in the given basis. For an orthonormal basis ##M## will be the identity matrix (with an infinite number of rows and columns if the space is infinite-dimensional!) so the calculation collapses to look like what you have shown.

Lastly, to avoid confusion, avoid writing things like $$

\vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix}$$

The left-hand side is a vector in the space. The right-hand side is a representation of that vector in a particular basis. The two sides are not the same sort of object and should not be connected by an equals sign.

This can seem confusing if you've been introduced to linear algebra through vectors and matrices that are rows, columns or rectangle of numbers. Vector spaces are abstract objects, of which numeric number tuples are only one type.

andrewkirk said:

Hello Peter.

Broadly you have the correct idea but your approach relies on two implicit assumptions that do not hold in the general case.

Firstly, by writing ##\langle \alpha|## and ##|\alpha\rangle## as each having three components, you are assuming the vector space is finite dimensional. Dirac's notation is most used in quantum mechanics, where the vector spaces are usually infinite-dimensional, so such a representation as a finite set of components does not appear.

Secondly, you replace the inner product ##\langle\alpha|A\rangle## by a simple multiplication of a row vector ##\langle\alpha|## by a column vector ##|A\rangle##. That is only correct when those vectors are representations in an orthonormal basis. From your first embedded image, that seems to be the case here, but you need to exercise care that it will not always be the case. The more general formula requires use of a metric tensor so that ##\langle\alpha|A\rangle = \langle\alpha| \times M \times |\alpha\rangle## where ##M## is the representation of the metric tensor in the given basis. For an orthonormal basis ##M## will be the identity matrix (with an infinite number of rows and columns if the space is infinite-dimensional!) so the calculation collapses to look like what you have shown.

Lastly, to avoid confusion, avoid writing things like $$

\vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix}$$

The left-hand side is a vector in the space. The right-hand side is a representation of that vector in a particular basis. The two sides are not the same sort of object and should not be connected by an equals sign.

This can seem confusing if you've been introduced to linear algebra through vectors and matrices that are rows, columns or rectangle of numbers. Vector spaces are abstract objects, of which numeric number tuples are only one type.

Hello Andrew,

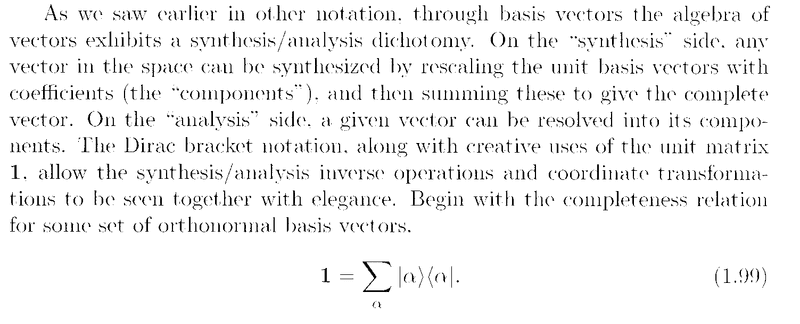

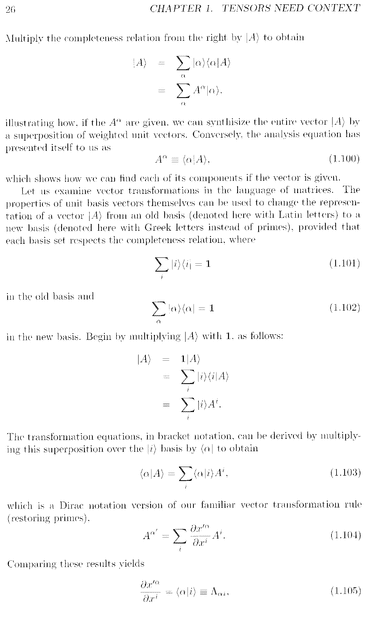

Thanks again on your help with Neuenschwander Section 1.9 ... {Note ... A scan of Neuenschwander Section 1.9 from the start to page 26 is available below ...}

I am hoping you can clarify some issues for me ...

You write:

" ... ... Lastly, to avoid confusion, avoid writing things like

## \vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix} ##

The left-hand side is a vector in the space. The right-hand side is a

representation of that vector in a particular basis. The two sides are not the same sort of object and should not be connected by an equals sign.

... ... ... ... "

I note that the author tends to interpret the situation in 3 dimensions ... so for convenience I did the same ...

The author also says the basis ## \alpha ## is orthonormal ( see note directly before equation (1.99) [see scanned text below ... page 25 ...]

Given the context of a 3-dimension space and an orthonormal basis ... and further given the fact that we have a given basis ## \alpha ### am I justified in writing something like

## \vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix} ##

... see also authors equation (1.86) which has an expression similar to mine above ...

[ see scanned notes below ...]

... on another issue i am completely stumped with the equations directly following equation ( 1.99 ) [ see scanned notes below ... top of page 26 ... ] ... ... that is ...##\vert A \rangle = \sum_{ \alpha } \vert \alpha \rangle \langle \alpha \vert A \rangle ## ... ... ... (*)

In (*) all I can think in terms of spelling out the meaning is to take

## \vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix} ##

and

## | \alpha \rangle =

\begin{bmatrix}

\alpha^1 \\

\alpha^2 \\

\alpha^3

\end{bmatrix}

##

and

## \langle \alpha \vert A \rangle =

\begin{bmatrix}

\alpha^1 & \alpha^2 & \alpha^3

\end{bmatrix}

\begin{bmatrix}

A^1 \\

A^2 \\

A^3

\end{bmatrix}

= \alpha^1 A^1 + \alpha^2 A^2 + \alpha^3 A^3 ##

so ...

## \vert A \rangle \langle \alpha \vert A \rangle = \begin{bmatrix} \alpha^1 \\ \alpha^2 \\ \alpha^3 \end{bmatrix} [ \alpha^1 A^1 + \alpha^2 A^2 + \alpha^3 A^3 ]

= \begin{bmatrix} (\alpha^1)^2 A^1 + \alpha^1 \alpha^2 A^2 + \alpha^1 \alpha^3 A^3 \\ \alpha^2 \alpha^1 A^1 + (\alpha^2)^2 A^2 + \alpha^1 \alpha^3 A^3 \\ \alpha^3 \alpha^1 A^1 + \alpha^3 \alpha^2 A^2 + (\alpha^3)^2 A^3 \end{bmatrix} ##

... however ... why do we need the ## \sum_{ \alpha } ## in ## \sum_{ \alpha } \vert \alpha \rangle \langle \alpha \vert A \rangle ## ... and further ... how should I interpret ## \sum_{ \alpha } A^{ \alpha} \vert \alpha \rangle ## ... Is the above making sense ... ? Is the above reasonable interpretation of Neuenschwander ... ??

Hope you can help ... ?

PeterIt will be helpful if you have access to Neuenschwander Section 1.9 so i have scanned the relevant pages of Section 1.9 ... and the read as follows ...