Hamiltonian

- 296

- 193

I was following David tongs notes on GR, right after deriving the Euler Lagrange equation, he jumps into writing the Lagrangian of a free particle and then applying the EL equation to it, he mentions curved spaces by specifying the infinitesimal distance between any two points, ##x^i##and ##x^i + dx^i##, the line element as ##ds^2 = g_{ij}(x)dx^i dx^j##

and the Lagrangian for the free particle as:

$$\mathcal{L} = \frac{1}{2}mg_{ij}(x)\dot{x^j}\dot{x^i}$$

I don't understand why he has introduced the metric tensor here. He doesn't really explain how he has written the above equations and I feel a bit lost.

His handouts state clearly that you don't need previous experience with GR to follow it, am I missing something obvious?

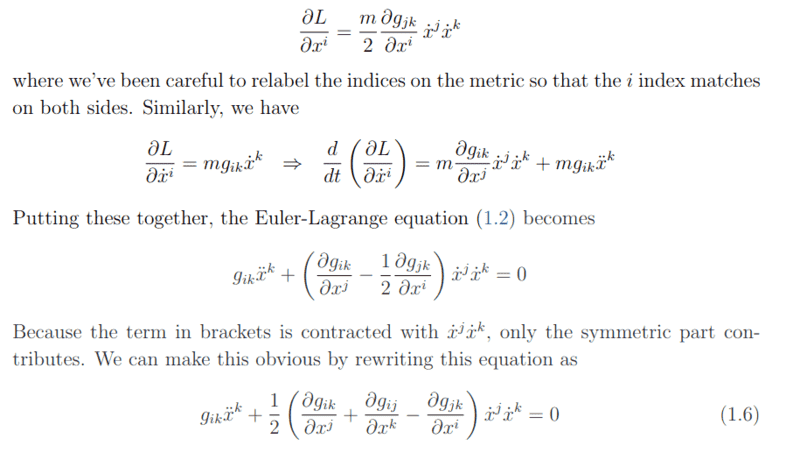

I also don't understand how he is taking the derivatives of the the Lagrangian and then putting everything together to get the geodesic equation.

and the Lagrangian for the free particle as:

$$\mathcal{L} = \frac{1}{2}mg_{ij}(x)\dot{x^j}\dot{x^i}$$

I don't understand why he has introduced the metric tensor here. He doesn't really explain how he has written the above equations and I feel a bit lost.

His handouts state clearly that you don't need previous experience with GR to follow it, am I missing something obvious?

I also don't understand how he is taking the derivatives of the the Lagrangian and then putting everything together to get the geodesic equation.

Last edited: