- #1

Math Amateur

Gold Member

MHB

- 3,998

- 48

I am reading Paul E. Bland's book: Rings and Their Modules and am currently focused on Section 4.2 Noetherian and Artinian Modules ... ...

I need help with fully understanding Example 6 "Right Artinian but not Left Artinian" ... in Section 4.2 ... ...

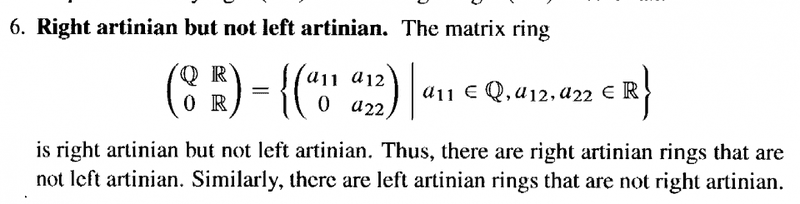

Example 6 reads as follows:

My problem is how to prove, explicitly and formally, that the matrix ring:##\begin{pmatrix} \mathbb{Q} & \mathbb{R} \\ 0 & \mathbb{R} \end{pmatrix}##Also, a related problem is that I am trying to explicitly determine/calculate the form of all the ideals of the above matrix ring ... but without success ... help with this issue would be appreciated as well ...

My problem is how to prove, explicitly and formally, that the matrix ring:##\begin{pmatrix} \mathbb{Q} & \mathbb{R} \\ 0 & \mathbb{R} \end{pmatrix}##Also, a related problem is that I am trying to explicitly determine/calculate the form of all the ideals of the above matrix ring ... but without success ... help with this issue would be appreciated as well ...

Reading around this problem leads me to believe that showing the above matrix ring to be right artinian but not left artinian would involve showing that all descending chains of right ideals terminate ... but that this does not hold for descending chains of left ideals ... ...

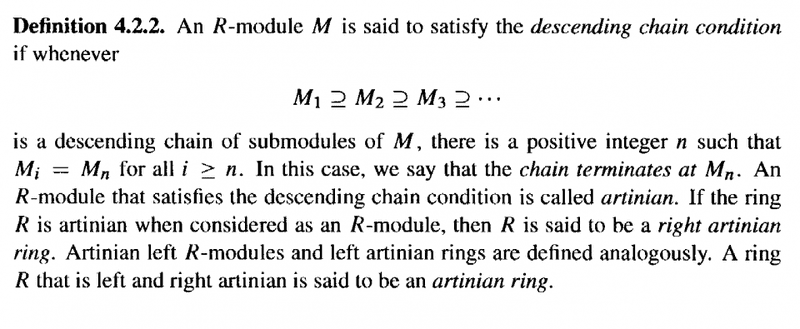

Note that the conditions regarding descending chains of right (left) ideals for right (left) Artinian rings are not given in the definition of right (left) Artinian rings by Bland, but I believe Bland's definition implies them ... is that correct? ... see Bland's definition of Artinian rings below ...

Bland's definition of Artinian rings and modules is as follows:

Hope someone can help,

Peter

I need help with fully understanding Example 6 "Right Artinian but not Left Artinian" ... in Section 4.2 ... ...

Example 6 reads as follows:

Reading around this problem leads me to believe that showing the above matrix ring to be right artinian but not left artinian would involve showing that all descending chains of right ideals terminate ... but that this does not hold for descending chains of left ideals ... ...

Note that the conditions regarding descending chains of right (left) ideals for right (left) Artinian rings are not given in the definition of right (left) Artinian rings by Bland, but I believe Bland's definition implies them ... is that correct? ... see Bland's definition of Artinian rings below ...

Bland's definition of Artinian rings and modules is as follows:

Hope someone can help,

Peter