- 20,683

- 28,041

The maximal values in my example are ##w_1=w_x=0.3\, , \,w_2=w_y=0.3\, , \,w_3=w_z=0.8## and the unique solution is ##(0.1\, , \,0.1\, , \,0.8).## Your algorithm doesn't find that.

My question in the previous post was not about the algorithm as I already agreed that it does not work in the general case. Rather, I am trying to make sense of the diagram in post #26. That diagram does not seem to conform to the second point of post #18. Is that condition too not applicable to the original question? I ask because if a worker accepts a partition based on only the workload assigned to him and does not bother about the workloads of others, then ##(0.1, 0.1, 0.8)## will be a solution but not a unique one. Instead, all points in the pink-colored region of the diagram in my previous post will be acceptable to all 3 workers. However, your diagram shows two unconnected green triangles suggesting that worker 2 accepts a workload of 0.25 (or any value in ##[0,0.3]## for that matter) only in a more restricted sense. For e.g., the point ##(0.75, 0.25, 0)## lies in the bottom green triangle in your diagram (the region labeled X_y) but ##(0, 0.25, 0.75)## appears to belong only to X_z (grey region) and is not indicated as also belonging X_y.fresh_42 said:The maximal values in my example are ##w_1=w_x=0.3\, , \,w_2=w_y=0.3\, , \,w_3=w_z=0.8## and the unique solution is ##(0.1\, , \,0.1\, , \,0.8).## Your algorithm doesn't find that.

Does this imply that in a 3-worker case (for example), it is possible and valid for worker 1 to accept the partition ##(a, b, c)## without necessarily accepting the partition ##(a, c, b)## (where ##a,b,c \in [0,1]## and ##a+b+c=1##) even though his workload is the same in both partitions? In other words, the feasible points of a worker are not determined by a single axis? If the answer is yes, then that would resolve my confusion regarding your diagram and it then becomes clear why ##(0.1, 0.1, 0.8)## is the unique solution for partitions acceptable to all three workers.fresh_42 said:Every worker has his own set of feasible points regardless of the others. These sets don't have to be convex or even connected. The crucial point is that are part of these sets of feasible partitions.

Does "both sequences" here refer to (1) ##(x_n)_{n \in \mathbb{N}}## and ##(x_{n^2})_{n \in \mathbb{N}}##, or to (2) ##(\frac{x_n}{n})_{n \in \mathbb{N}}## and ##(\frac{x_{n^2}}{n})_{n \in \mathbb{N}}##?fresh_42 said:Extra: (open) Prove that both sequences converge to ##0##

True. Definitely not easy for me at least. Even with just 3 dimensions, I can imagine shapes that seem wild! Jigsaw puzzle piece shapes, for instance (wild in a fun way)fresh_42 said:I am not sure whether there is an easy one since the shapes can be rather wild, especially in higher dimensions

Show that the sequence ##\dfrac{x_n}{n}## converges to zero.Not anonymous said:Does "both sequences" here refer to (1) ##(x_n)_{n \in \mathbb{N}}## and ##(x_{n^2})_{n \in \mathbb{N}}##, or to (2) ##(\frac{x_n}{n})_{n \in \mathbb{N}}## and ##(\frac{x_{n^2}}{n})_{n \in \mathbb{N}}##?

Yes. My method uses Brouwer's fixed point theorem and the trick is to create a situation where it can be applied.Not anonymous said:True. Definitely not easy for me at least. Even with just 3 dimensions, I can imagine shapes that seem wild! Jigsaw puzzle piece shapes, for instance (wild in a fun way)

fresh_42 said:Show that the sequence ##\dfrac{x_n}{n}## converges to zero.

Two issues. A minor one: you may only assume ##\dfrac{x_n}{n}>\delta ## for all ##n\geq N## for some ##N\in \mathbb{N},## but this is only a technical point. Your argument remains valid if the inequality does not hold for small ##n.## Another question is: Why does the sequence converge at all? (Heuristic is sufficient.)Not anonymous said:Since it is given that ##\sum\limits_{i=1}^{n} \dfrac{x_{i^2}}{i} \leq 1## for all ##n \in \mathbb{N}## and that all ##x_{i}##'s are positive values, it must be obvious that ##x_1 < 1## (since otherwise, we will have the sum start to exceed 1 either from ##n=1##, if ##x_1 > 1##, or from ##n=2##, if ##x_1 = 1##). And since it is given that ##x_1 > x_2 > x_3 > ...##, it follows that ##(\dfrac{x_n}{n})_{n \in \mathbb{N}}## also form a strictly decreasing sequence and that ##\dfrac{x_n}{n} < 1## for all ##n##.

(1)

Suppose the sequence ##\left(\dfrac{x_n}{n}\right)_{n \in \mathbb{N}}## does not converge to 0. Then there must be some ##0 < \delta < 1## such that ##\dfrac{x_n}{n} > \delta## for all ##n \in \mathbb{N}##.

(2)

Let ##K \equiv \left\lceil\dfrac{1}{\delta}\right\rceil##. Now consider the value of ##\dfrac{x_{(K+1)n}}{(K+1)n}##, an element which belongs to the same sequence.

Since ##(K+1)n > n## we must have ##x_{(K+1)n} < x_n##. Therefore, ##\dfrac{x_{(K+1)n}}{(K+1)n} < \dfrac{x_n}{(K+1)n} < \dfrac{1}{K} \dfrac{x_n}{n}##

(3)

By definition of ##K##, ##\dfrac{1}{K} \leq \delta##. And from (1), we know that ##\dfrac{x_n}{n} < 1##. Therefore, ##\dfrac{1}{K} \dfrac{x_n}{n} < \delta##. Applying this in (3) gives ##\dfrac{x_{(K+1)n}}{(K+1)n} < \delta##, but this contradicts (2). Therefore the assumption that the sequence does not converge to 0 must be wrong, proving that it must converge to 0.

fresh_42 said:Two issues. A minor one: you may only assume ##\dfrac{x_n}{n}>\delta ## for all ##n\geq N## for some ##N\in \mathbb{N},## but this is only a technical point. Your argument remains valid if the inequality does not hold for small ##n.## Another question is: Why does the sequence converge at all? (Heuristic is sufficient.)

Not anonymous said:Why does the sequence converge at all? I believe it must converge because of the series is strictly decreasing and it contains only positive values.

When the series is strictly decreasing, the value of successive elements must either keep decreasing without a bound (and so tend to ##-\infty ##) or must decrease indefinitely with some finite bound. Since the series contains only positive values, it cannot touch or drop below zero, meaning 0 acts as a strict lower bound to the limit toward which such a series can tend towards.

fresh_42 said:Extra: (open)

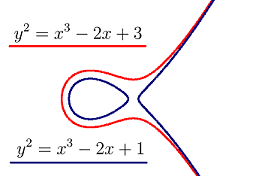

Consider the two elliptic curves and observe that one has two connection components and the other one has only one. Determine the constant ##c \in [1,3]## in ##y^2 = x^3 - 2x + c## where this behavior exactly changes. What is the left-most point of this curve?