ashah99

- 55

- 2

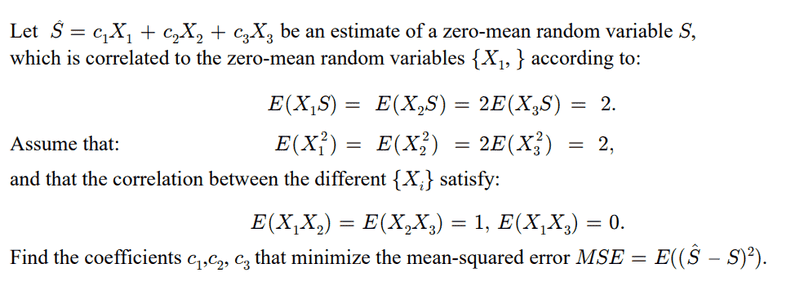

- Homework Statement

- Please see below: finding an MSE estimate for random variables

- Relevant Equations

- Expectation formula, MSE = E( (S_hat - S)^2 )

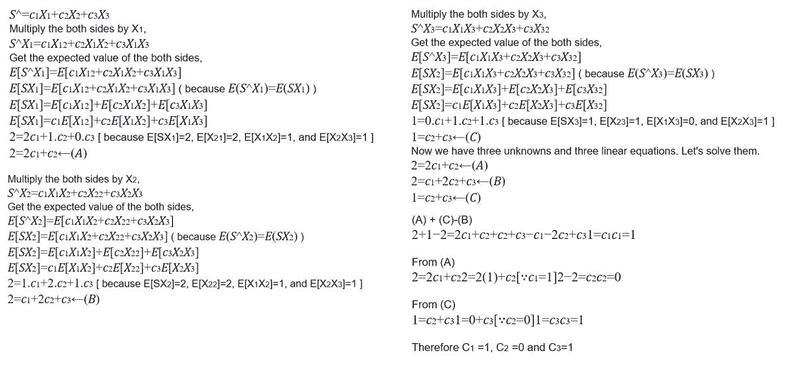

Hello all, I am wondering if my approach is correct for the following problem on MSE estimation/linear prediction on a zero-mean random variable. My final answer would be c1 = 1, c2 = 0, and c3 = 1. If my approach is incorrect, I certainly appreciate some guidance on the problem. Thank you.

Problem

Approach:

Problem

Approach: