- 7,688

- 3,773

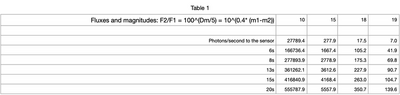

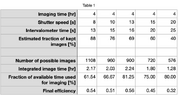

I'm hoping there's a reasonable answer to this. To summarize, data I acquired when imaging a particular target shows that I can retain 75% of my images for stacking at 10s exposure times, but only 50% of the images taken with 15s exposures. The difference is entirely due to tracking error and no, I am not going to get an autotracker.

Possibly the most important metric (for me) is 'efficiency': what fraction of the total time I spend on any particular night, from setup to takedown, consists of 'stackable integration time'. Factoring in everything, 10s exposures results in an efficiency of 50% (e.g. 4 hours outside = 2 hours integration time), while 15s exposures gives me a final efficiency of 40%.

It's not clear what's best: more images decreases the noise, but longer exposure times increases the signal so I can image fainter objects (let's assume I never saturate the detector). More images means I can generate final images in fewer nights, longer exposures means I have fewer images to process to get the same total integration time.

For what it's worth, in my example I would obtain 480 10s images or 303 15s images per night. My final stacked image would likely consist of a few thousand individual images, obtained over a few weeks.

I haven't seen a quantitative argument supporting one or the other.... thoughts?

Possibly the most important metric (for me) is 'efficiency': what fraction of the total time I spend on any particular night, from setup to takedown, consists of 'stackable integration time'. Factoring in everything, 10s exposures results in an efficiency of 50% (e.g. 4 hours outside = 2 hours integration time), while 15s exposures gives me a final efficiency of 40%.

It's not clear what's best: more images decreases the noise, but longer exposure times increases the signal so I can image fainter objects (let's assume I never saturate the detector). More images means I can generate final images in fewer nights, longer exposures means I have fewer images to process to get the same total integration time.

For what it's worth, in my example I would obtain 480 10s images or 303 15s images per night. My final stacked image would likely consist of a few thousand individual images, obtained over a few weeks.

I haven't seen a quantitative argument supporting one or the other.... thoughts?