mohamed_a

- 36

- 6

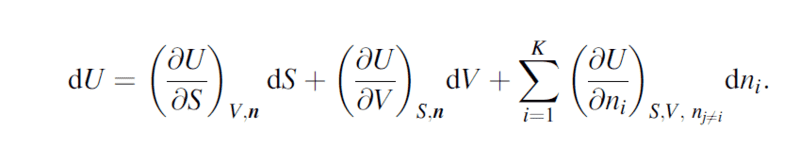

I was reading about thermodynamics postulates when i came over the differnetial fundamental equation:

I understand that the second element is just pressure and last element is chemical energy, but he problem is i don't understand what is the use of entropy and how does it contribute to a systems energy

For example, if i have a container of gas and decided to increase entropy i will just pump out some gas and so the entropy will be increased as less molecules will have more space to move to, but isn't this just equivalent to the change in number of chemical energies (molecules) that exited and change in pressure.

Another example: if i try to exclude the last two elements and imagine how could I increase the energy of a system without changing pressure and chemical energy i am just left with temperature. but if i change the temperature, i would increase pressure and energy of the chemical substituents so why would i need to add a third element(entropy) if i could describe the system wit two only

I think entropy is the measure of inverse the intermolecular energy (hydrogen bonds,etc) , pressure is the measure of extramolecular energy (that is generated by breaking the intermolecular constraints) and chemical energy is the enthalpy of compounds (intramolecular energy). Am I right in my analysis? (i,e that kinetic energy of compounds and elements isn't considered in the third term but rather is included in the entropy)

And if this is correct why isn't nuclear energy added to the system (##E=mc^2##) as a forth element (intramolecular intranuclear energy)

I understand that the second element is just pressure and last element is chemical energy, but he problem is i don't understand what is the use of entropy and how does it contribute to a systems energy

For example, if i have a container of gas and decided to increase entropy i will just pump out some gas and so the entropy will be increased as less molecules will have more space to move to, but isn't this just equivalent to the change in number of chemical energies (molecules) that exited and change in pressure.

Another example: if i try to exclude the last two elements and imagine how could I increase the energy of a system without changing pressure and chemical energy i am just left with temperature. but if i change the temperature, i would increase pressure and energy of the chemical substituents so why would i need to add a third element(entropy) if i could describe the system wit two only

I think entropy is the measure of inverse the intermolecular energy (hydrogen bonds,etc) , pressure is the measure of extramolecular energy (that is generated by breaking the intermolecular constraints) and chemical energy is the enthalpy of compounds (intramolecular energy). Am I right in my analysis? (i,e that kinetic energy of compounds and elements isn't considered in the third term but rather is included in the entropy)

And if this is correct why isn't nuclear energy added to the system (##E=mc^2##) as a forth element (intramolecular intranuclear energy)

Last edited: