Albertgauss

Gold Member

- 294

- 37

- TL;DR Summary

- I need help in applying "Propagation of Errors" to understand Ohm's Law for error analysis

Hello,

I need help with making sure I am using instrumentation error analysis correctly through an experiment in which I verify Ohm’s Law for a simple circuit. I do have a few questions below. I calculated and measured the error two different ways and did not get the same error by both methods, so I need help in understanding what/if I did wrong.

First question: Is “Propagation of Errors” the best choice of error analysis where your measured errors are due to your measuring devices? For this example, I am going to use multimeters to measure the current/voltage through/across a resistor.

V=IR is Ohm’s Law here for the simple circuit below. I am predicting what the voltage across the resistor should be given a measured current through a measured resistance, and then measuring that voltage directly for comparison.

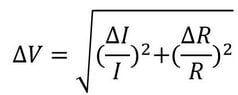

I believe the error I measure for the voltage across the resistor should be (since V=IR depends on its quantities through multiplication) from the current and resistance:

Is this correct?

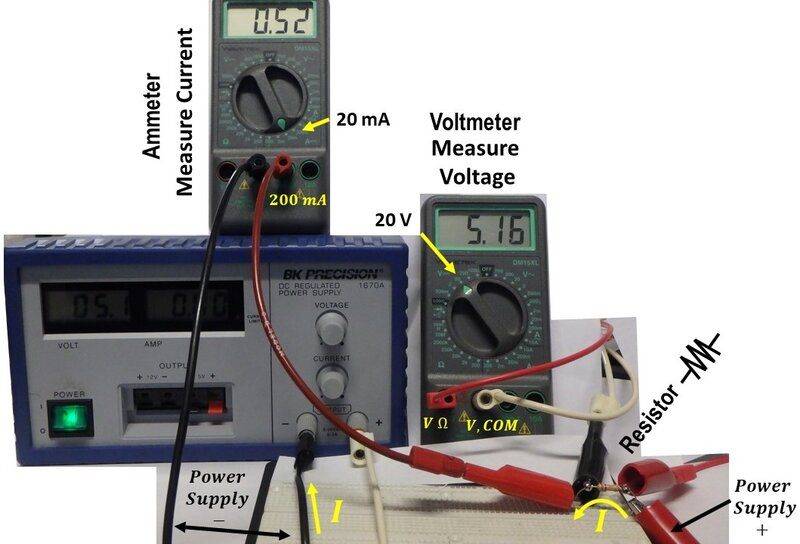

In the picture below, you can see some of my measurements and my setup.

The resistor in the bottom right of the image is a 10 k-Ohm which has an error of 5%. So the uncertainty would be 500 Ohms on the resistor.

The resistor in the bottom right of the image is a 10 k-Ohm which has an error of 5%. So the uncertainty would be 500 Ohms on the resistor.

I measured a current of 0.52 mA through the resistor. According to the manual for this particular multimeter (Wavetek DM15XL) the 20mA setting has

15XL: ±(1.2% Rdg + 1 dgt) 10/15/16XL: ±(1% Rdg + 1 dgt)

Do I interpret this correctly when I say the error on the 20mA setting is 1% of 0.52mA, or .0052mA? I‘m not sure what the “+ 1 dgt” means. Something to do with “1 digit” but which digit, is my guess?

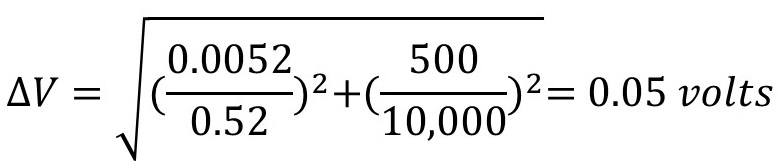

Putting these values in, the measured error on my voltage would be, as derived from the values of the resistance and current:

Now, this is more uncertain (twice as much) than the uncertainty of the voltage quoted by the wavetek manual as (0.5% rdg +1 dgt), which would be 0.0258 Volts.

Which error would I would use as the actual error on the voltage, the error derived from the RI measurements (0.05 Volts) or the error published for the device in its manual upon measuring voltage directly (0.0258 Volts)?

I need help with making sure I am using instrumentation error analysis correctly through an experiment in which I verify Ohm’s Law for a simple circuit. I do have a few questions below. I calculated and measured the error two different ways and did not get the same error by both methods, so I need help in understanding what/if I did wrong.

First question: Is “Propagation of Errors” the best choice of error analysis where your measured errors are due to your measuring devices? For this example, I am going to use multimeters to measure the current/voltage through/across a resistor.

V=IR is Ohm’s Law here for the simple circuit below. I am predicting what the voltage across the resistor should be given a measured current through a measured resistance, and then measuring that voltage directly for comparison.

I believe the error I measure for the voltage across the resistor should be (since V=IR depends on its quantities through multiplication) from the current and resistance:

Is this correct?

In the picture below, you can see some of my measurements and my setup.

I measured a current of 0.52 mA through the resistor. According to the manual for this particular multimeter (Wavetek DM15XL) the 20mA setting has

15XL: ±(1.2% Rdg + 1 dgt) 10/15/16XL: ±(1% Rdg + 1 dgt)

Do I interpret this correctly when I say the error on the 20mA setting is 1% of 0.52mA, or .0052mA? I‘m not sure what the “+ 1 dgt” means. Something to do with “1 digit” but which digit, is my guess?

Putting these values in, the measured error on my voltage would be, as derived from the values of the resistance and current:

Now, this is more uncertain (twice as much) than the uncertainty of the voltage quoted by the wavetek manual as (0.5% rdg +1 dgt), which would be 0.0258 Volts.

Which error would I would use as the actual error on the voltage, the error derived from the RI measurements (0.05 Volts) or the error published for the device in its manual upon measuring voltage directly (0.0258 Volts)?