Hall

- 351

- 87

- Homework Statement

- T is transformation which rotates every vector in 2D by a fixed angle ##\phi##. Prove that T is a linear transformation.

- Relevant Equations

- ##T(\mathbf{v_1} + \mathbf{v_2}) = T( \mathbf{v_1}) + T (\mathbf{v_2})##

##T(c\mathbf{v_1}) = c T (\mathbf{v_1})##

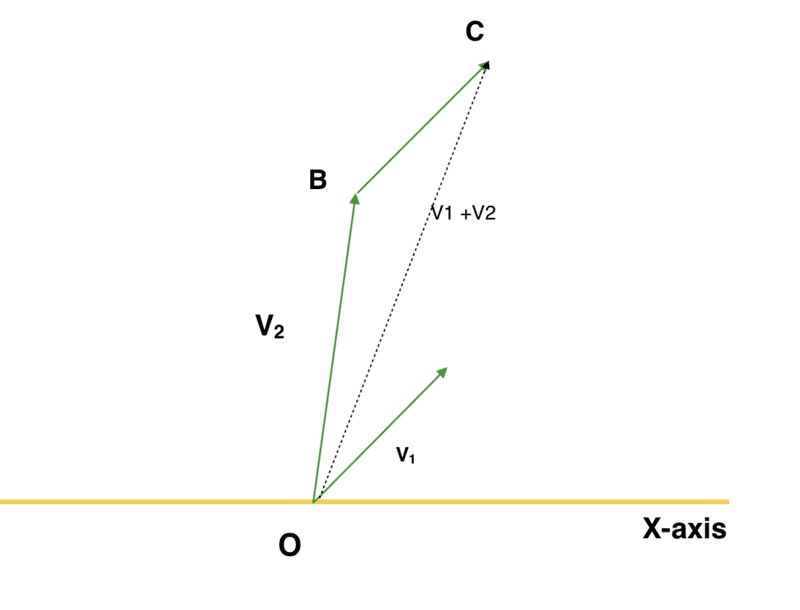

We got two vectors ##\mathbf{v_1}## and ##\mathbf{v_2}##, their sum is, geometrically, :

Now, let us rotate the triangle by angle ##\phi## (is this type of things allowed in mathematics?)

OC got rotated by angle ##\phi##, therefore ##OC' = T ( \mathbf{v_1} + \mathbf{v_2})##, and similarly ##OB' = T(\mathbf{v_2})##. The little pointer guy which is alone, at the right corner (which was marked ##\mathbf{v_1}## in the previous diagram), also got rotated by ##\phi## so, now it represents ##T(\mathbf{v_1})##, which gives us ##B'C' = T(\mathbf{v_1})##. So, by the geometrical law of vector addition:

$$

\mathbf{OB'} + \mathbf{B'C'} = \mathbf{OC'}$$

$$

T(\mathbf{v_2}) + T(\mathbf{v_2}) = T( \mathbf{v_1 + v_2})$$

AND

##T( c(r \cos \theta, r \sin \theta) ) = (rc \cos (\theta + \phi) , rc \sin (\theta + \phi) )##

## c T (r\cos \theta, r\sin \theta) = c(r \cos (\theta + \phi), r\sin (\theta + \phi) = (rc, \cos (\theta + \phi), rc \sin (\theta + \phi) )= T( c(r \cos \theta, r \sin \theta) ) ##

We're done, finally.

The big question: Am I right?

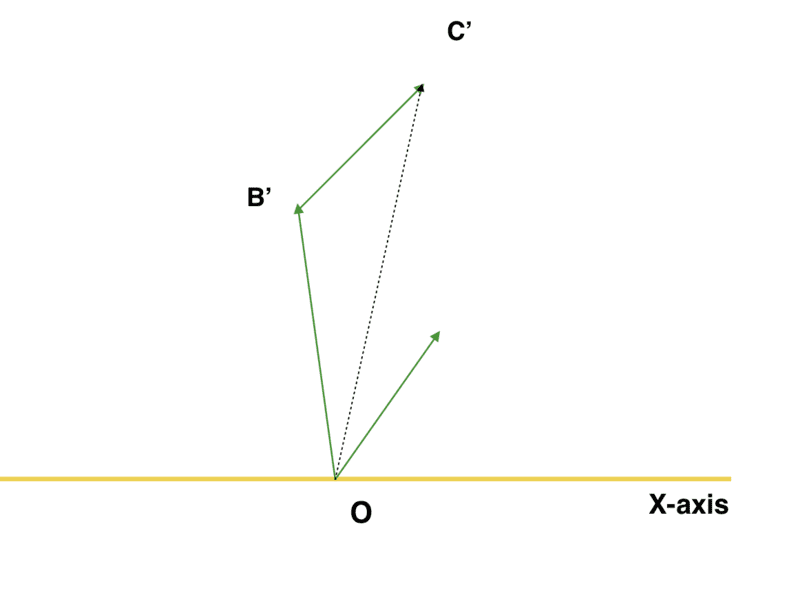

Now, let us rotate the triangle by angle ##\phi## (is this type of things allowed in mathematics?)

OC got rotated by angle ##\phi##, therefore ##OC' = T ( \mathbf{v_1} + \mathbf{v_2})##, and similarly ##OB' = T(\mathbf{v_2})##. The little pointer guy which is alone, at the right corner (which was marked ##\mathbf{v_1}## in the previous diagram), also got rotated by ##\phi## so, now it represents ##T(\mathbf{v_1})##, which gives us ##B'C' = T(\mathbf{v_1})##. So, by the geometrical law of vector addition:

$$

\mathbf{OB'} + \mathbf{B'C'} = \mathbf{OC'}$$

$$

T(\mathbf{v_2}) + T(\mathbf{v_2}) = T( \mathbf{v_1 + v_2})$$

AND

##T( c(r \cos \theta, r \sin \theta) ) = (rc \cos (\theta + \phi) , rc \sin (\theta + \phi) )##

## c T (r\cos \theta, r\sin \theta) = c(r \cos (\theta + \phi), r\sin (\theta + \phi) = (rc, \cos (\theta + \phi), rc \sin (\theta + \phi) )= T( c(r \cos \theta, r \sin \theta) ) ##

We're done, finally.

The big question: Am I right?

Last edited: