jv07cs said:

So the summation representation in the direct sum is just a way to represent the tuples, but the addition of elements still works in the component-wise manner of the tuple representation, where we only add elements of the same vector space, since we can't add tensors of different type, right?

Not sure what you mean. We can add tensors of different types

formally: ##(a)+ (u\otimes v)=a+u\otimes v.## Whether you write them as tuples or as sums don't make a difference. But since we are dealing with vector spaces, sums are the preferred choice. Here are two examples written both ways:

\begin{align*}

(a,u,v\otimes w)+(b,u',v'\otimes w')&=(a+b,u+u',v\otimes w+v'\otimes w')\\

a+u+v\otimes w + b+u'+v'\otimes w'&=(a+b)+(u+u')+(v\otimes w+v'\otimes w')\\

(0,0,u\otimes v\otimes w) + (b,0,u\otimes v\otimes z)&=(b,0,u\otimes v\otimes (w+z))\\

u\otimes v\otimes w+b+u\otimes v\otimes z &=b+u\otimes v\otimes (w+z)

\end{align*}

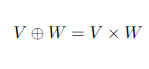

See, there is another reason to use sums. You do not expect sums to be infinitely long, but the tuples that I wrote as finite tuples should be filled up with infinitely many zeros. If you write them as infinite sums, then you must add that only finitely many terms are unequal zero. If you have a specific tensor, then you can just use finite sums. If you use finite tuples, then you have always to count the position and that is a source of mistakes.

jv07cs said:

If so, I would then have just three more questions:

- In way, would it be correct to state that the binary operation ##\cdot: T(V)\times T(V) \rightarrow T(V)## is not exactly the tensor product (as previously defined by a bilinear map that takes elements of a space of (p,q)-tensors and elements of a space of (m,r)-tensors and returns elements of a space of (p+m,q+r)-tensors), but is more specifically a binary operation that kind of distributes the already defined tensor product?

You shouldn't speak of ##(p,q)## tensors this way because it has a different meaning, especially for physicists and I am not sure which meaning you use here.

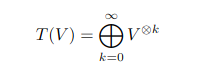

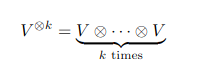

If we have a tensor of degree ##p## and a tensor of degree ##q##, then their multiplication

is the tensor product. It has degree ##p+q.## This is the multiplication is ##T(V).## It has to obey the distributive law, the sums of tensors are formal sums, the product of tensors is the tensor product of those tensors.

If you mean a ##(p,q)## tensor as physicists use it, namely as an element of the vector space

$$

\underbrace{V^*\otimes \ldots V^*}_{p-\text{times}} \otimes \underbrace{V\otimes \ldots\otimes V}_{q-\text{times}}

$$

then we speak actually about the tensor algebra ##T(V^*)\otimes T(V)\cong T(V^*\otimes V).## The multiplication of tensors of degree ##(p,q)## with tensors of degree ##(r,m)## is then again a tensor product, one of degree ##(p+r,q+m)## with a rearrangment such that all vectors from ##V^*## are grouped on the left.

jv07cs said:

- Are the elements of ##T(V)## tensors?

Yes.

jv07cs said:

- And what is the need in defining the vector space ##T(V)##? Is it just a way to kind of reunite in a single set all tensors?

For one, yes. It contains all multiples and (formal) sums of pure tensors ##v_1\otimes \ldots\otimes v_n.##

The sense is what I described above. Physicists encounter tensors from the tensor algebra ##

T(V^*)\otimes T(V)## like e.g. curvature tensors, and also Graßmann and Lie algebras that are obtained by the procedure I described in post #4 via the ideals. The universality of the tensor algebra grants many applications with different sets of additional rules expressed in the vectors that span the ideal ##\mathcal{I}(V)## I spoke of in post #4.

The word tensor is normally used very sloppy. People often mean pure tensors like ##a\otimes b## and forget that ##a\otimes b +c\otimes d## is also a tensor of the same degree. Then they say tensor when they mean a general tensor from ##T(V)## with multiple components of different degrees and sums thereof, or if they mean a tensor from ##T(V^*)\otimes T(V).## They always say tensor.

jv07cs said:

- The algebra ##T(V)## only deals with contravariant tensors and we could define a ##T(V*)## algebra that deals with covariant tensors. Is there an algebra that deals with both?

Yes, see above, ##T(V^*)\otimes T(V)\cong T(V^*\otimes V).## Maybe I confused the order when I wrote about a ##(p,q)## tensor. Not sure, what you call co- and what contravariant, and what comes left and what on the right side. That's a physical thing. There is no mathematical necessity to name them. And if that wasn't already confusing, the terms co- and contravariant are used differently in mathematics. It is a historical issue.