Joe1998

- 36

- 4

Misplaced Homework Thread

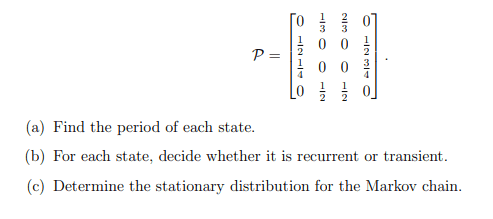

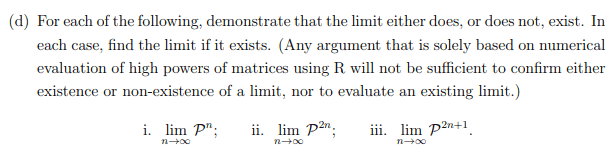

Consider a Markov chain with state space {1, 2, 3, 4} and transition matrix P given below:

Now, I have already figured out the solutions for parts a,b and c. However, I don't know how to go about solving part d? I mean the question says we can't use higher powers of matrices to justify our answer, then what else can we use to prove that the limit of that transition matrix exist?

Any help would be appreciate it, thanks.

Now, I have already figured out the solutions for parts a,b and c. However, I don't know how to go about solving part d? I mean the question says we can't use higher powers of matrices to justify our answer, then what else can we use to prove that the limit of that transition matrix exist?

Any help would be appreciate it, thanks.