fog37

- 1,566

- 108

Hello,

I understand that a dataset that involves time ##T## as a variable and other variables ##Variable1##, ##Variable2##, ##Variable3##, represents a multivariate time-series, i.e. we can plot each variable ##Variable1##, ##Variable2##, ##Variable3## vs time ##T##.

When dealing with regular cross-sectional datasets (no time involved), we can use statistical models like linear regression, logistic regression, random forest, decision trees, etc. to perform classification and regression...

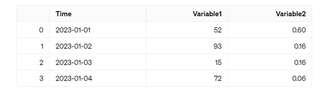

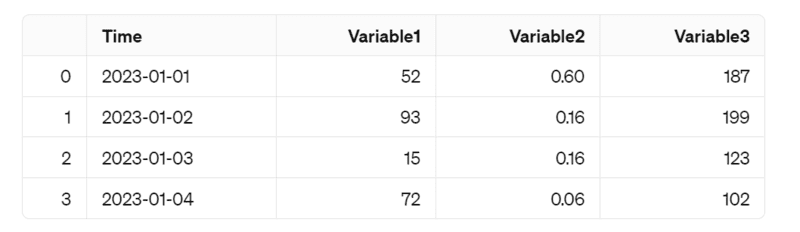

Can we use these same models if the dataset has the time variable ##T##? I don't think so. For example, let's consider the fictional dataset:

Or do we need to use specialized time series models like ARMA, SARIMA, Vector Autoregression (VAR), Long Short-Term Memory Networks (LSTMs), Convolutional Neural Networks (CNNs), etc.?

I don't think it would make sense to create a linear regression model like this ## Variable3 = a \cdot Variable1 +b \cdot Variable2+ c \cdot Time##...Or does it?

What kind of model would I use with a dataset like that?

Thank you!

I understand that a dataset that involves time ##T## as a variable and other variables ##Variable1##, ##Variable2##, ##Variable3##, represents a multivariate time-series, i.e. we can plot each variable ##Variable1##, ##Variable2##, ##Variable3## vs time ##T##.

When dealing with regular cross-sectional datasets (no time involved), we can use statistical models like linear regression, logistic regression, random forest, decision trees, etc. to perform classification and regression...

Can we use these same models if the dataset has the time variable ##T##? I don't think so. For example, let's consider the fictional dataset:

Or do we need to use specialized time series models like ARMA, SARIMA, Vector Autoregression (VAR), Long Short-Term Memory Networks (LSTMs), Convolutional Neural Networks (CNNs), etc.?

I don't think it would make sense to create a linear regression model like this ## Variable3 = a \cdot Variable1 +b \cdot Variable2+ c \cdot Time##...Or does it?

What kind of model would I use with a dataset like that?

Thank you!