- #1

FluidStu

- 26

- 3

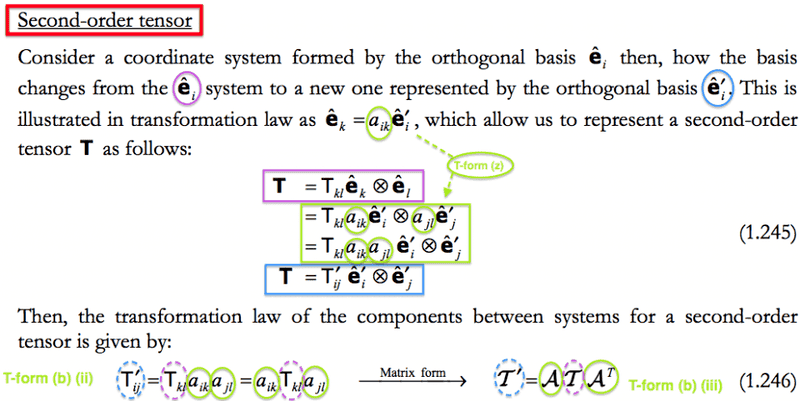

In the transformation of tensor components when changing the co-ordinate system, can someone explain the following:

Firstly, what is the point in re-writing the indicial form (on the left) as aikTklajl? Since we're representing the components in a matrix, and the transformation matrix is also a matrix, aren't we violating the non-commutativity of matrix multiplication (AB ≠ BA)?

Secondly, how does this mean A T AT in matrix form? Why are we transposing the matrix?

Thanks in advance

Firstly, what is the point in re-writing the indicial form (on the left) as aikTklajl? Since we're representing the components in a matrix, and the transformation matrix is also a matrix, aren't we violating the non-commutativity of matrix multiplication (AB ≠ BA)?

Secondly, how does this mean A T AT in matrix form? Why are we transposing the matrix?

Thanks in advance