srnixo

- 51

- 10

- Homework Statement

- I'm so confused how to calculate the uncertainties , i suggested a lot of methods i thought about, so please help me! i need a clear answers

- Relevant Equations

- .

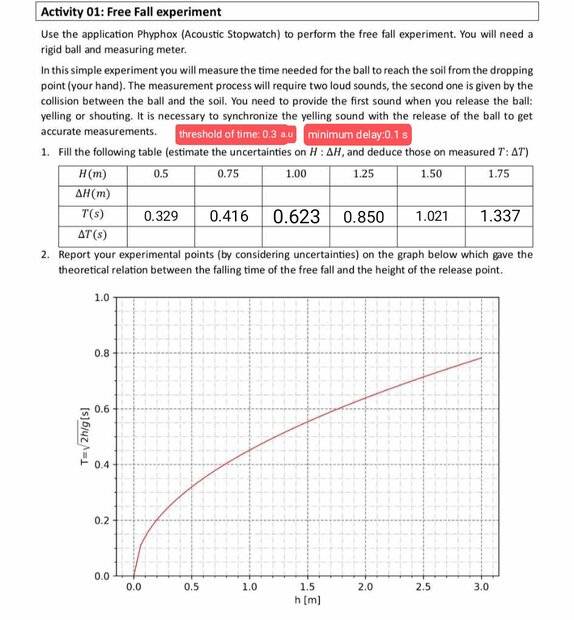

So as you can see in the image, I have noted the time in the [time column (s) ] on the table after conducting the experiment at home using the application phyphox.

And now, I have some questions to fill in the remaining gaps:

The first question: about ΔH (m) :

And now, I have some questions to fill in the remaining gaps:

The first question: about ΔH (m) :

- Should I set it equal to zero [ΔH=0] because I didn't calculate it, the values were directly given from the exercise?

- Or at least 0.1 [ΔH=0.1] due to the potential small error in the measurements of the used meter tool!

- Or nevertheless, I must calculate it, and let that be in that way :[I don't know if it's correct or not, this is the only method i know]:

----------------------------------------------------------------------------------------------------------------------------

The second question: about ΔT(s) :

First of all, in the app phyphox of calculating the T , there were a choice of threshold and minimum delay, and as i mentioned:

The threshold: 0.3 a.u ( i don't know what does a.u means! i don't even know which unit is that so i can't finish the calculations)

The minimum delay: 0.1 s - So, do i assume that the threshold corresponds to a time interval and i convert it to seconds so it becomes 0.003 seconds and then calculate it using [ Minimum delay + time interval ] which means [0.1+0.003= 0.103 seconds for all of them! ]

- Or i use the formula ΔT= threshold x measured time + minimum delay so that i keep threshold 0.3

- Or i calculate it in the same way previously , and i write the same result in all the gaps!

Or there is another correct way? please help!

Last edited by a moderator: