- #1

ognik

- 643

- 2

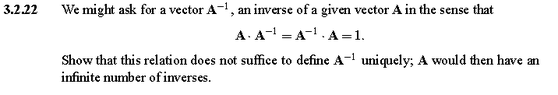

I have an exercise which says to show that for vectors, $ A \cdot A^{-1} = A^{-1} \cdot A = I $ does NOT define $ A^{-1}$ uniquely.

But, let's assume there are at least 2 of $ A^{-1} = B, C$

Then $ A \cdot B = I = A \cdot C , \therefore BAB = BAC, \therefore B=C$, therefore $ A^{-1}$ is unique? (got lazy with dots)

But, let's assume there are at least 2 of $ A^{-1} = B, C$

Then $ A \cdot B = I = A \cdot C , \therefore BAB = BAC, \therefore B=C$, therefore $ A^{-1}$ is unique? (got lazy with dots)

Last edited by a moderator: