WMDhamnekar

MHB

- 376

- 28

- Homework Statement

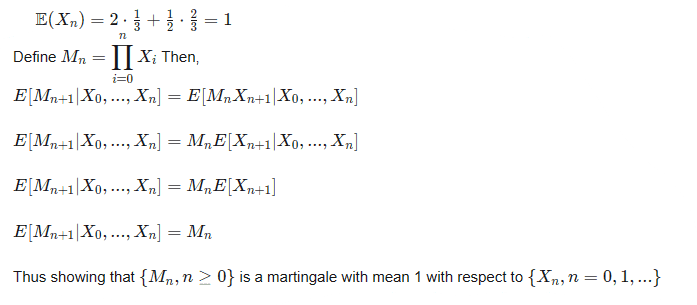

- Let ## X_1, X_2, . . . ## be independent, identically distributed random variables with ##\mathbb{P}\{X_j=2\} =\frac13 , \mathbb{P} \{ X_j = \frac12 \} =\frac23 ##

Let ##M_0=1 ## and for ##n \geq 1, M_n= X_1X_2... X_n ##

1. Show that ##M_n## is a martingale.

2. Explain why ##M_n## satisfies the conditions of the martingale convergence theorem.

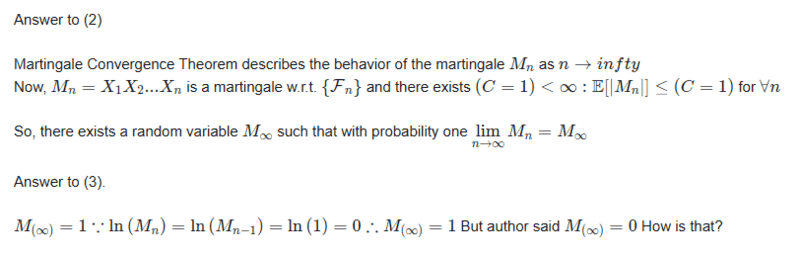

3. Let ##M_{\infty}= \lim\limits_{n\to\infty} M_n.## Explain why ##M_{\infty}=0## (Hint: there are at least two ways to show this. One is to consider ##\log M_n ## and use the law of large numbers. Another is to note that with probability one ##M_{n+1}/M_n## does not converge.)

4. Use the optional sampling theorem to determine the probability that ## M_n## ever attains a value as large as 64.

5. Does there exist a ##C < \infty ## such that ##\mathbb{E}[ M^2_n ] \leq C \forall n ##

- Relevant Equations

- No relevant equations

Answer to 1.

Answer to 2.

How would you answer rest of the questions 4 and 5 ?

Answer to 2.

How would you answer rest of the questions 4 and 5 ?

Answer to 3 and 4 are wrong. Answer to 4 is ##\frac{1}{2^6}##

Answer to 3 and 4 are wrong. Answer to 4 is ##\frac{1}{2^6}##