- #1

fab13

- 318

- 6

- TL;DR Summary

- I would like to understand better the plotting of contours and the signification of the diagonal, .ie knowing if it corresponds to posterior distribution or not.

I am curently working on Forecast in cosmology and I didn't grasp very well different details.

Forecast allows, wiht Fisher's formalism, to compute constraints on cosmological parameters.

I have 2 issues of understanding :

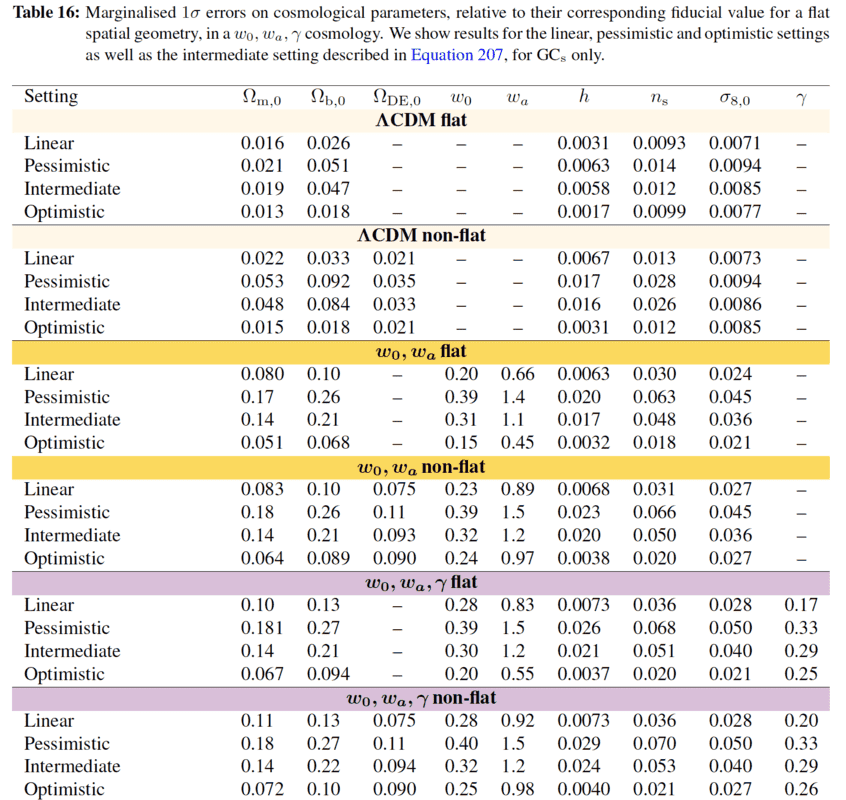

1) Here below a table containing all errors estimated on these parameters, for different cases :

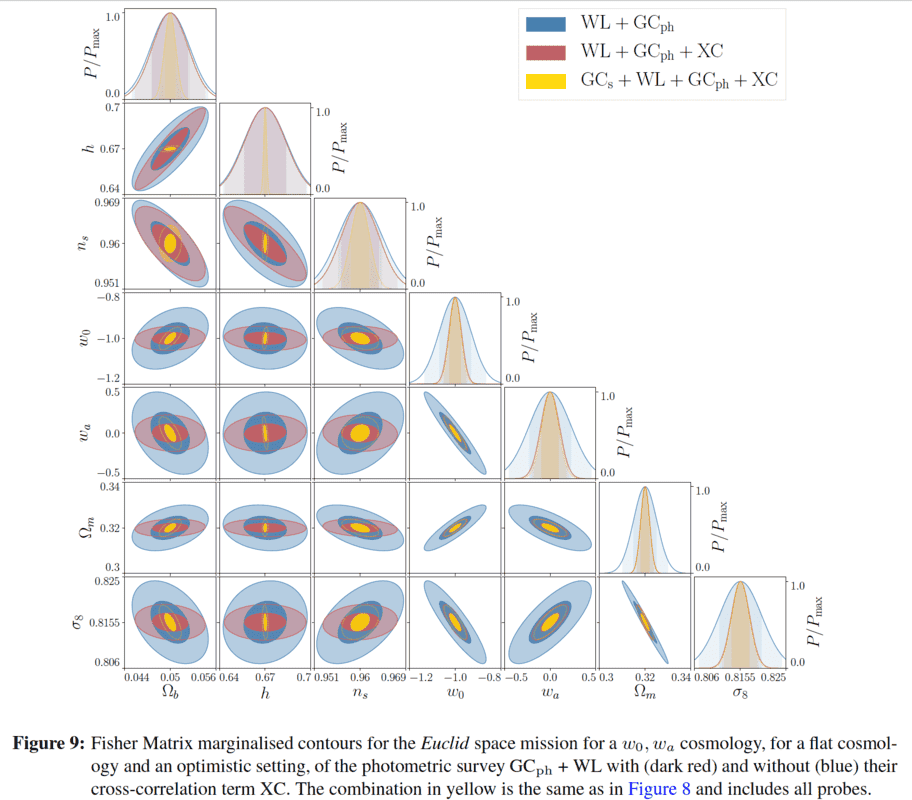

In the caption of Table 16, I don't understand what the term "Marginalized 1##\sigma## erros" means. Why do they say "Marginalized", it could be simply formulated as "the constraints got with a ##1\sigma## confidence level" or ""errors with a ##1\sigma## C.L (68% of probability to be in the interval of values)", couldn't it ?If it would have been written "marginalized ##2\sigma## error, the first value in Table 16 would have been equal to ##(\Omega_{m,0})_{2\sigma}=0.032 = (\Omega_{m,0})_{1\sigma} =0.016 \text{x} 2##, wouldn't it be the case ?I would like to understand this vocabulary which is very specific of this Forecast field.2.1) Here below a figure representing the correlations (by drawing contours at ##1\sigma## and ##2\sigma## C.L (confidence levels)) between the different cosmological parameters :

I have understood, the right diagonal (with Gaussian shapes) represent the posterior distribution, i.e ##\text{Probability(parameters|data)}## or the probability to get an interval of values for each parameter, knowing the data.But how to justify that I have these posterior distribution on this descending diagonal ?I know the relation : ##\text{posterior}= \dfrac{\text{likelihood}\,\times\,\text{prior}}{\text{evidence}}## or the equivalent :##p(\theta|d)={\dfrac{p(d|\theta)p(\theta)}{p(d)}}## with ##\theta## the parameters and ##d##the data.We can use Fisher's formalism assuming likelihood is Gaussian, and the posterior are obtained by inverting the Fisher's matrix.So I wonder what are the others cases (except this diagonal) plotted and mosty what they represent in the formula above, especially towards posterior distribution :##\text{posterior}= \dfrac{\text{likelihood}\,\times\,\text{prior}}{\text{evidence}}##It seems all this cases looks like "joint distribution" but I can't get to recall what this joint distribution corresponds to, and its link with posterior distribution.2.2) Finally, a last question, in the caption of figure 9, it is also noted "marginalized contours" : there too, why using the term "marginalized" ??Any help is welcome, I would be very grateful.If someone thinks this post should be moved to another forum, don't hesitate to do it. I posted here since there is a physical context but I may be wrong.Regardsps : GC represents Galaxy clustering probe, WL the weak lensing, GC##_{\text{ph}}## the photometric proble and XC the cross-correlations.

I have understood, the right diagonal (with Gaussian shapes) represent the posterior distribution, i.e ##\text{Probability(parameters|data)}## or the probability to get an interval of values for each parameter, knowing the data.But how to justify that I have these posterior distribution on this descending diagonal ?I know the relation : ##\text{posterior}= \dfrac{\text{likelihood}\,\times\,\text{prior}}{\text{evidence}}## or the equivalent :##p(\theta|d)={\dfrac{p(d|\theta)p(\theta)}{p(d)}}## with ##\theta## the parameters and ##d##the data.We can use Fisher's formalism assuming likelihood is Gaussian, and the posterior are obtained by inverting the Fisher's matrix.So I wonder what are the others cases (except this diagonal) plotted and mosty what they represent in the formula above, especially towards posterior distribution :##\text{posterior}= \dfrac{\text{likelihood}\,\times\,\text{prior}}{\text{evidence}}##It seems all this cases looks like "joint distribution" but I can't get to recall what this joint distribution corresponds to, and its link with posterior distribution.2.2) Finally, a last question, in the caption of figure 9, it is also noted "marginalized contours" : there too, why using the term "marginalized" ??Any help is welcome, I would be very grateful.If someone thinks this post should be moved to another forum, don't hesitate to do it. I posted here since there is a physical context but I may be wrong.Regardsps : GC represents Galaxy clustering probe, WL the weak lensing, GC##_{\text{ph}}## the photometric proble and XC the cross-correlations.

Forecast allows, wiht Fisher's formalism, to compute constraints on cosmological parameters.

I have 2 issues of understanding :

1) Here below a table containing all errors estimated on these parameters, for different cases :

In the caption of Table 16, I don't understand what the term "Marginalized 1##\sigma## erros" means. Why do they say "Marginalized", it could be simply formulated as "the constraints got with a ##1\sigma## confidence level" or ""errors with a ##1\sigma## C.L (68% of probability to be in the interval of values)", couldn't it ?If it would have been written "marginalized ##2\sigma## error, the first value in Table 16 would have been equal to ##(\Omega_{m,0})_{2\sigma}=0.032 = (\Omega_{m,0})_{1\sigma} =0.016 \text{x} 2##, wouldn't it be the case ?I would like to understand this vocabulary which is very specific of this Forecast field.2.1) Here below a figure representing the correlations (by drawing contours at ##1\sigma## and ##2\sigma## C.L (confidence levels)) between the different cosmological parameters :