- #1

sponsoredwalk

- 533

- 5

*Bit of reading involved here, worth it if you have any interest in, or

knowledge of, differential forms*.

It took me quite a while to find a good explanation of differential forms & I

finally found something that made sense, in a sense. Most of what I've written

below is just asking you to judge it's general correctness, the notation etc..., as

a some of it is based on intuition based on the material I read. At the bottom you'll

see I have an issue with projections & a concern about throwing around minus signs.

Also I can't find a second source that describes forms this way so hopefully someone

will learn something If anyone find any source with a comparable

If anyone find any source with a comparable

explanation please let me know

A single variable differential 1-form is a map of the form:

[itex] f(x) \ dx \ : \ [a,b] \rightarrow \ \int_a^b \ f(x) \ dx[/itex]

When you take the constant 1-form it becomes clearer:

[itex] dx \ : \ [a,b] \rightarrow \ \int_a^b \ \ dx \ = \ \Delta x \ = \ b \ - \ a[/itex]

Okay, didn't know that's what a form was Beautiful stuff! In my

Beautiful stuff! In my

favourite kind of notation too!

This looks an awful lot like the linear algebra idea of a linear functional in

a vector space (V,F,σ,I):

[itex] f \ : \ V \ \rightarrow \ F [/itex]

where you satisfy the linearity property.

In more than one variable you can have:

[itex] dx \ : \ [a_1,b_1] \rightarrow \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dx \ = \ b_1 \ - \ a_1[/itex]

[itex] dy \ : \ [a_2,b_2] \rightarrow \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dy \ = \ b_2 \ - \ a_2[/itex]

which leads me to think that the following notation makes sense:

[itex] dx \ + \ dy \ : \ [a_1,b_1]\times [a_2,b_2] \rightarrow \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dx \ + \ dy \ = \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dx \ + \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ dy = \ (b_1 \ - \ a_1) \ + \ (b_2 \ - \ a_2) \ = \ \Delta x \ + \ \Delta y[/itex]

The pull back stuff just "pulls" an integral in x variables "back" to 1

parametrized variable as far as I can see.

If [itex] \overline{a} \ =\ (a_1,a_2)[/itex] & [itex] \overline{b} \ =\ (b_1,b_2)[/itex] then:

[itex] \lambda_1 dx \ + \ \lambda_2 dy \ : \ [a_1,b_1]\times [a_2,b_2] \rightarrow \ \int_{ \overline{a}}^{ \overline{b}} \ \ \lambda_1 dx \ + \ \lambda_2 dy \ = \ \lambda_1 \int_{(a_1,a_2)}^{(b_1,b_2)} dx \ + \ \lambda_2 \int_{(a_1,a_2)}^{(b_1,b_2)} dy = \ \lambda_1 (b_1 \ - \ a_1) \ + \ \lambda_2 (b_2 \ - \ a_2) \ = \ \lambda_1 \Delta x \ + \ \lambda_2 \ \Delta y[/itex]

That's the notable stuff for 1-forms, also that they can be extended to

n dimensions very explicitly with this notation & things don't have to be

constant. The vector parallels (notably Work!) are just jumping out

already!

I'd like to quote the book now:

"Differential 1-forms are mappings from directed line segments to

the real numbers. Differential 2-forms are mappings from oriented

triangles to the real numbers".

So, by this comment what we're doing with a differential 2-form is

finding the area in a triangle. What do you do when you find area's?

Use the cross product! How does the cross product work? It works

by finding the area contained within (n - 1) vectors & expresses it

via a vector in n-space! Furthermore from what I gather the whole

Furthermore from what I gather the whole

theory is integration via simplices - p-dimensional triangles, or at least

the general idea is.

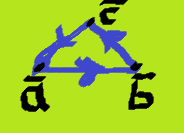

So if we have a positively oriented triangle:

Which we denote by [itex] T \ = \ [ \overline{a},\overline{b},\overline{c}][/itex] (This is all done in ℝ² for now)

what is the area of the triangle?

[itex] A \ = \ \frac{1}{2} \cdot b \cdot h \ = \ \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex]

Just extend it to 3 dimensions for the calculation & you get the result.

If you go from [itex] \overline{a}[/itex] to [itex] \overline{b}[/itex] to [itex] \overline{c}[/itex] you have

[itex] T \ = \ [ \overline{a},\overline{b},\overline{c}][/itex]

which is defined as a positive orientation & if you go from [itex] \overline{a}[/itex] to [itex] \overline{c}[/itex] to [itex] \overline{b}[/itex] you have

[itex] T \ = \ [ \overline{a},\overline{c},\overline{b}][/itex]

which is defined as a negative orientation.

[itex] dx \ dy \ : \ [ \overline{a},\overline{b},\overline{c}] \ \rightarrow \ 6[/itex]

[itex] dx \ dy \ : \ [ \overline{a},\overline{c},\overline{b}] \ \rightarrow \ - 6[/itex]

This is made clearer with the notation:

[itex] dx \ dy \ : \ T \ \rightarrow \ \int_T \ dx \ dy \ = \ \ \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex]

All of this I think I understand.

The next issue is defining 2-forms in 3 dimensional space. There is

talk of projections and such, I don't quite understand what's going on

though.

The projection of a point (x,y,z) onto the x-y plane is (x,y,0).

The projection of the triangle

[itex] T \ = \ [ \overline{a},\overline{b},\overline{c}] \ = \ [(a_1,a_2,a_3),(b_1,b_2,b_3),(c_1,c_2,c_3)][/itex]

onto the x-y plane is

[itex] T \ = \ [ \overline{a},\overline{b},\overline{c}] \ = \ [(a_1,a_2,0),(b_1,b_2,0),(c_1,c_2,0)][/itex].

They say that they will define the differential form [itex]dx \ dy[/itex]

to be the mapping from the oriented triangle [itex]T[/itex] to the

signed area of it's projection onto the x-y plane,

which is the z coordinate of [itex] \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex]

That doesn't make much sense, but I read on & see that

[itex] \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a})) \ = \ ( \int_T dy \ dz, \int_T dz \ dx, \int_T dx \ dy)[/itex]

Now, this makes sense in that what is orthogonal to dx dy is something

in the z coordinate, and what is orthogonal to dy dz is in the x

coordinate etc... But what does that justification paragraph actually say?

I get the feeling it's an insight I should know about, I don't understand

what's going on with the projections. I think that if I did I would have

predicted the integrals in the coordinates the way it's set up there!

Let me quote the actual paragraph in it's entirety just in case:

Also, based on all of that writing I still don't know why dx dy = - dy dx

What I mean by this is that after all that good work he just defines

dx dy to be the area of that triangle, I think you can see my problem

lies with the projection issues. I think if I understand what's going on

with the projections I'll get it. Please don't just resort to telling me that

[itex] \ihat{k} \ \times \ \ihat{j} \ = \ \ihat{i}[/itex] ,

,

I mean I can justify things as they stand in a sense because I know dy dx

goes to the z dimension with the negative of what happens when dx dy goes

to the z-axis (from the algebra involved in the cross product derivation

via the orthogonality of the dot product) but I still feel like something

is missing or suspect. I don't feel very confident about this because of orientation:

[itex] dx \ dy \ : \ [ \overline{a},\overline{b},\overline{c}] \ \rightarrow \ 6[/itex]

[itex] dx \ dy * \ : \ [ \overline{a},\overline{c},\overline{b}] \ \rightarrow \ - 6[/itex]

I mean dx dy = 6 = - (-6) = - dx dy *, I just feel a little iffy about

throwing out minus signs to justify anti-commutativity issues! I don't

think that dx dy = 6 = - dy dx = dx dy* = - (-6), but that could just be

confusion.

So, the question is just about the general correctness of what I wrote

& then the issue of projections, I couldn't just post a question about

projections because I'm not 100% sure my take on the theory that

leads up to this is 100% accurate (I think it is though!) & to be quite

honest seeing as I have spent ages trying to find someone who would

explain the theory in this way, but having been unable to find anyone

who would, makes me think very few people view this subject this way

& as such would love to see how different it is for someone who takes

the axiomatic, anti-commutative, definitions that are found in nearly all

of the books on google. If this is/isn't new please let me know anyway

(and help if possible )!

)!

knowledge of, differential forms*.

It took me quite a while to find a good explanation of differential forms & I

finally found something that made sense, in a sense. Most of what I've written

below is just asking you to judge it's general correctness, the notation etc..., as

a some of it is based on intuition based on the material I read. At the bottom you'll

see I have an issue with projections & a concern about throwing around minus signs.

Also I can't find a second source that describes forms this way so hopefully someone

will learn something

explanation please let me know

A single variable differential 1-form is a map of the form:

[itex] f(x) \ dx \ : \ [a,b] \rightarrow \ \int_a^b \ f(x) \ dx[/itex]

When you take the constant 1-form it becomes clearer:

[itex] dx \ : \ [a,b] \rightarrow \ \int_a^b \ \ dx \ = \ \Delta x \ = \ b \ - \ a[/itex]

Okay, didn't know that's what a form was

Beautiful stuff! In my

Beautiful stuff! In my favourite kind of notation too!

This looks an awful lot like the linear algebra idea of a linear functional in

a vector space (V,F,σ,I):

[itex] f \ : \ V \ \rightarrow \ F [/itex]

where you satisfy the linearity property.

In more than one variable you can have:

[itex] dx \ : \ [a_1,b_1] \rightarrow \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dx \ = \ b_1 \ - \ a_1[/itex]

[itex] dy \ : \ [a_2,b_2] \rightarrow \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dy \ = \ b_2 \ - \ a_2[/itex]

which leads me to think that the following notation makes sense:

[itex] dx \ + \ dy \ : \ [a_1,b_1]\times [a_2,b_2] \rightarrow \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dx \ + \ dy \ = \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ \ dx \ + \ \int_{(a_1,a_2)}^{(b_1,b_2)} \ dy = \ (b_1 \ - \ a_1) \ + \ (b_2 \ - \ a_2) \ = \ \Delta x \ + \ \Delta y[/itex]

The pull back stuff just "pulls" an integral in x variables "back" to 1

parametrized variable as far as I can see.

If [itex] \overline{a} \ =\ (a_1,a_2)[/itex] & [itex] \overline{b} \ =\ (b_1,b_2)[/itex] then:

[itex] \lambda_1 dx \ + \ \lambda_2 dy \ : \ [a_1,b_1]\times [a_2,b_2] \rightarrow \ \int_{ \overline{a}}^{ \overline{b}} \ \ \lambda_1 dx \ + \ \lambda_2 dy \ = \ \lambda_1 \int_{(a_1,a_2)}^{(b_1,b_2)} dx \ + \ \lambda_2 \int_{(a_1,a_2)}^{(b_1,b_2)} dy = \ \lambda_1 (b_1 \ - \ a_1) \ + \ \lambda_2 (b_2 \ - \ a_2) \ = \ \lambda_1 \Delta x \ + \ \lambda_2 \ \Delta y[/itex]

That's the notable stuff for 1-forms, also that they can be extended to

n dimensions very explicitly with this notation & things don't have to be

constant. The vector parallels (notably Work!) are just jumping out

already!

I'd like to quote the book now:

"Differential 1-forms are mappings from directed line segments to

the real numbers. Differential 2-forms are mappings from oriented

triangles to the real numbers".

So, by this comment what we're doing with a differential 2-form is

finding the area in a triangle. What do you do when you find area's?

Use the cross product! How does the cross product work? It works

by finding the area contained within (n - 1) vectors & expresses it

via a vector in n-space!

theory is integration via simplices - p-dimensional triangles, or at least

the general idea is.

So if we have a positively oriented triangle:

Which we denote by [itex] T \ = \ [ \overline{a},\overline{b},\overline{c}][/itex] (This is all done in ℝ² for now)

what is the area of the triangle?

[itex] A \ = \ \frac{1}{2} \cdot b \cdot h \ = \ \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex]

Just extend it to 3 dimensions for the calculation & you get the result.

If you go from [itex] \overline{a}[/itex] to [itex] \overline{b}[/itex] to [itex] \overline{c}[/itex] you have

[itex] T \ = \ [ \overline{a},\overline{b},\overline{c}][/itex]

which is defined as a positive orientation & if you go from [itex] \overline{a}[/itex] to [itex] \overline{c}[/itex] to [itex] \overline{b}[/itex] you have

[itex] T \ = \ [ \overline{a},\overline{c},\overline{b}][/itex]

which is defined as a negative orientation.

[itex] dx \ dy \ : \ [ \overline{a},\overline{b},\overline{c}] \ \rightarrow \ 6[/itex]

[itex] dx \ dy \ : \ [ \overline{a},\overline{c},\overline{b}] \ \rightarrow \ - 6[/itex]

This is made clearer with the notation:

[itex] dx \ dy \ : \ T \ \rightarrow \ \int_T \ dx \ dy \ = \ \ \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex]

All of this I think I understand.

The next issue is defining 2-forms in 3 dimensional space. There is

talk of projections and such, I don't quite understand what's going on

though.

The projection of a point (x,y,z) onto the x-y plane is (x,y,0).

The projection of the triangle

[itex] T \ = \ [ \overline{a},\overline{b},\overline{c}] \ = \ [(a_1,a_2,a_3),(b_1,b_2,b_3),(c_1,c_2,c_3)][/itex]

onto the x-y plane is

[itex] T \ = \ [ \overline{a},\overline{b},\overline{c}] \ = \ [(a_1,a_2,0),(b_1,b_2,0),(c_1,c_2,0)][/itex].

They say that they will define the differential form [itex]dx \ dy[/itex]

to be the mapping from the oriented triangle [itex]T[/itex] to the

signed area of it's projection onto the x-y plane,

which is the z coordinate of [itex] \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex]

That doesn't make much sense, but I read on & see that

[itex] \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a})) \ = \ ( \int_T dy \ dz, \int_T dz \ dx, \int_T dx \ dy)[/itex]

Now, this makes sense in that what is orthogonal to dx dy is something

in the z coordinate, and what is orthogonal to dy dz is in the x

coordinate etc... But what does that justification paragraph actually say?

I get the feeling it's an insight I should know about, I don't understand

what's going on with the projections. I think that if I did I would have

predicted the integrals in the coordinates the way it's set up there!

Let me quote the actual paragraph in it's entirety just in case:

We define the differential 2-form [itex]dx \ dy[/itex] in 2 dimensional

space to be the mapping from an oriented triangle [itex] T \ = \ [ \overline{a},\overline{b},\overline{c}][/itex]

to the signed area of it's projection onto the x,y plane, which is the z

coordinate of

[itex] \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex].

Similarly, [itex]dz \ dx [/itex] maps this triangle to the signed area

of it's projection onto the z,x plane which is the y coordinate of

[itex] \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex].

The 2-form [itex]dy \ dz [/itex] maps this triangle to the signed area

of it's projection onto the y,z plane, the x coordinate of

[itex] \frac{1}{2} \cdot ( ( \overline{c} \ - \ \overline{a}) \times ( \overline{b} \ - \ \overline{a}))[/itex].

"Second Year Calculus - D. Bressoud".

Also, based on all of that writing I still don't know why dx dy = - dy dx

What I mean by this is that after all that good work he just defines

dx dy to be the area of that triangle, I think you can see my problem

lies with the projection issues. I think if I understand what's going on

with the projections I'll get it. Please don't just resort to telling me that

[itex] \ihat{k} \ \times \ \ihat{j} \ = \ \ihat{i}[/itex]

I mean I can justify things as they stand in a sense because I know dy dx

goes to the z dimension with the negative of what happens when dx dy goes

to the z-axis (from the algebra involved in the cross product derivation

via the orthogonality of the dot product) but I still feel like something

is missing or suspect. I don't feel very confident about this because of orientation:

[itex] dx \ dy \ : \ [ \overline{a},\overline{b},\overline{c}] \ \rightarrow \ 6[/itex]

[itex] dx \ dy * \ : \ [ \overline{a},\overline{c},\overline{b}] \ \rightarrow \ - 6[/itex]

I mean dx dy = 6 = - (-6) = - dx dy *, I just feel a little iffy about

throwing out minus signs to justify anti-commutativity issues! I don't

think that dx dy = 6 = - dy dx = dx dy* = - (-6), but that could just be

confusion.

So, the question is just about the general correctness of what I wrote

& then the issue of projections, I couldn't just post a question about

projections because I'm not 100% sure my take on the theory that

leads up to this is 100% accurate (I think it is though!) & to be quite

honest seeing as I have spent ages trying to find someone who would

explain the theory in this way, but having been unable to find anyone

who would, makes me think very few people view this subject this way

& as such would love to see how different it is for someone who takes

the axiomatic, anti-commutative, definitions that are found in nearly all

of the books on google. If this is/isn't new please let me know anyway

(and help if possible

)!

)!