NoahsArk

Gold Member

- 258

- 24

- TL;DR Summary

- What unique contributions to math did linear algebra make?

I've been struggling to understand what was the key insight or insights that linear algebra brought to math, or what problems it allowed the solving of that couldn't be solved before. To make a comparison with calculus, I understand that calculus' two key insights were finding a method to determine the slope at a point on a curved line, and finding a way to calculate the area under a curve. With linear algebra it's much more vague to me what the contributions to math knowledge were. Also, calculus has two founders, Newton and Leibniz, whose work in discovering calculus is preserved, and it's clear what they discovered (derivatives and integrals as described above). I don't know if, if anyone, is the founder of linear algebra and what their key idea/s were in developing it.

First, you have ancient the Chinese manuscript from around 2,000 years ago describing how to solve a system of linear equations- this is used as an early example of linear algebra. Solving systems of linear equations, though, is a subject taught in regular algebra books and doesn't seem to be a unique contribution of linear algebra. Then there's the idea of matrices and vectors and how to multiply and perform other operations with them. While matrices and vectors are interesting to me as far as a unique way to symbolize groups of numbers, nothing jumps out at me about how or why this was the key insight of linear algebra, if it was the key insight at all.

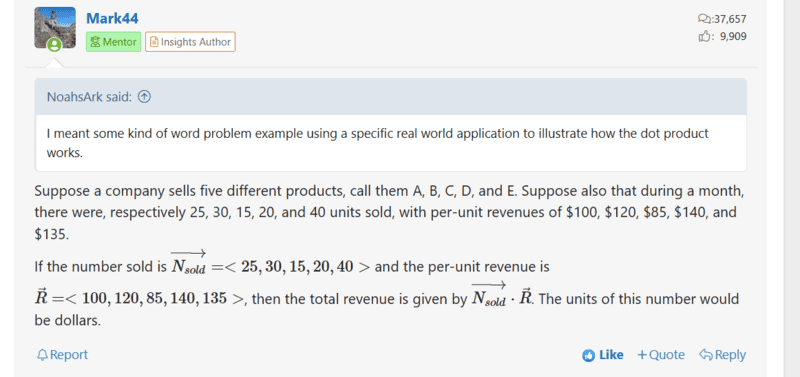

For example, @Mark44 , I previously was asking for a practical example of how dot product multiplication was useful, and you gave an example:

I've been meaning to ask about this for a while: So when you multiply these two vectors you get the result is $15,575 (25x100 + 30x120 + 15x85 + 20x140 + 40x135). This is a problem, though, that can be easily figured out without use of linear algebra and without using vectors. It seems the only unique thing here mathematically is the use of vectors as symbols (assuming those are even unique to linear algebra and weren't already used in other areas of math) to represent repeated multiplication and addition. I don't see where the teeth, are, though, in using the vector symbols in the operation as opposed to just multiplying each of the store's products by its price and adding all the dollar amounts together.

Similarly, with matrix multiplication, here we are also just multiplying and adding groups of numbers which we could've done without the language of matrices. Although the idea of a linear transformation, which can be done through matrix multiplication, is also interesting, it seems like this is again just repeated multiplying and adding of numbers.

Thanks

First, you have ancient the Chinese manuscript from around 2,000 years ago describing how to solve a system of linear equations- this is used as an early example of linear algebra. Solving systems of linear equations, though, is a subject taught in regular algebra books and doesn't seem to be a unique contribution of linear algebra. Then there's the idea of matrices and vectors and how to multiply and perform other operations with them. While matrices and vectors are interesting to me as far as a unique way to symbolize groups of numbers, nothing jumps out at me about how or why this was the key insight of linear algebra, if it was the key insight at all.

For example, @Mark44 , I previously was asking for a practical example of how dot product multiplication was useful, and you gave an example:

I've been meaning to ask about this for a while: So when you multiply these two vectors you get the result is $15,575 (25x100 + 30x120 + 15x85 + 20x140 + 40x135). This is a problem, though, that can be easily figured out without use of linear algebra and without using vectors. It seems the only unique thing here mathematically is the use of vectors as symbols (assuming those are even unique to linear algebra and weren't already used in other areas of math) to represent repeated multiplication and addition. I don't see where the teeth, are, though, in using the vector symbols in the operation as opposed to just multiplying each of the store's products by its price and adding all the dollar amounts together.

Similarly, with matrix multiplication, here we are also just multiplying and adding groups of numbers which we could've done without the language of matrices. Although the idea of a linear transformation, which can be done through matrix multiplication, is also interesting, it seems like this is again just repeated multiplying and adding of numbers.

Thanks