member 731016

- Homework Statement

- Please see below

- Relevant Equations

- Please see below

For this,

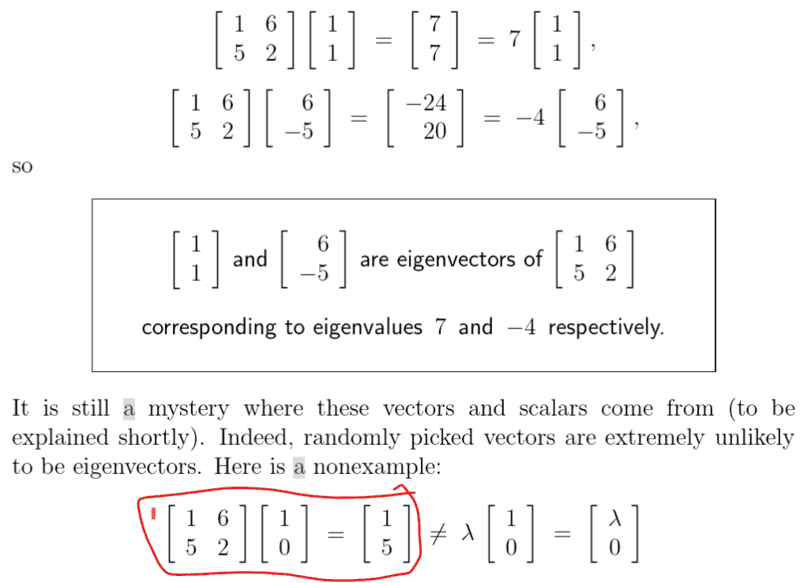

Dose anybody please know why we cannot say ##\lambda = 1## and then ##1## would be the eigenvalue of the matrix?

Many thanks!

Dose anybody please know why we cannot say ##\lambda = 1## and then ##1## would be the eigenvalue of the matrix?

Many thanks!