- #36

JimJCW

Gold Member

- 208

- 40

Jorrie said:Here is a patched version of Lightcone7, dated 2002-05-14, which numerically corrects for the offset in the low-z regime.

First, the output z range is still different from the input z range:

I did the following experiment:

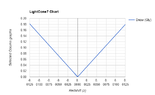

If I calculate Dnow using Gnedin's and your 2022 calculator and plot 'DGnedin - DJorrie' vs z, I expect to see points fluctuating around the Dnedin - DJorrie = 0 line. But I got the following result:

I wonder what clues we can get out of this.