falyusuf

- 35

- 3

- Homework Statement

- Remove the overfitting in the following example.

- Relevant Equations

- -

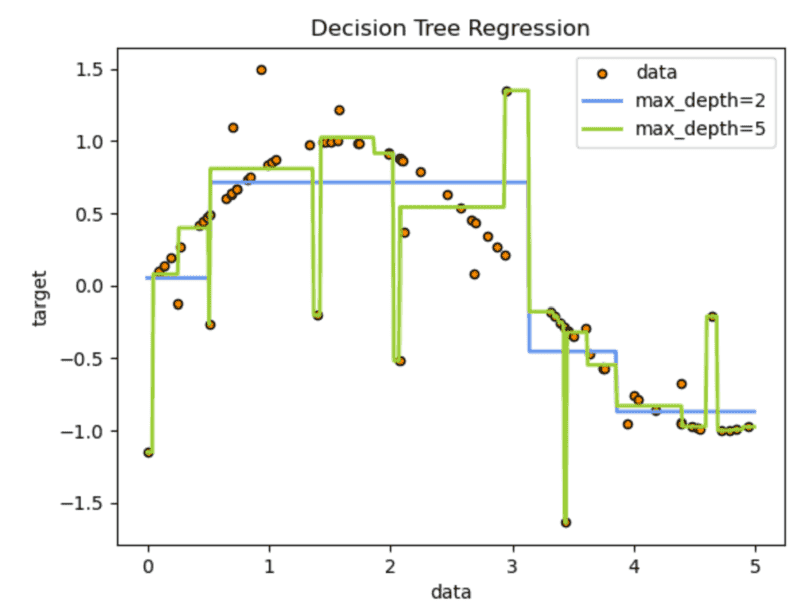

The decision tree in the following curve is too fine details of the training data and learn from the noise, (overfitting).

Ref: https://scikit-learn.org/stable/aut...lr-auto-examples-tree-plot-tree-regression-py

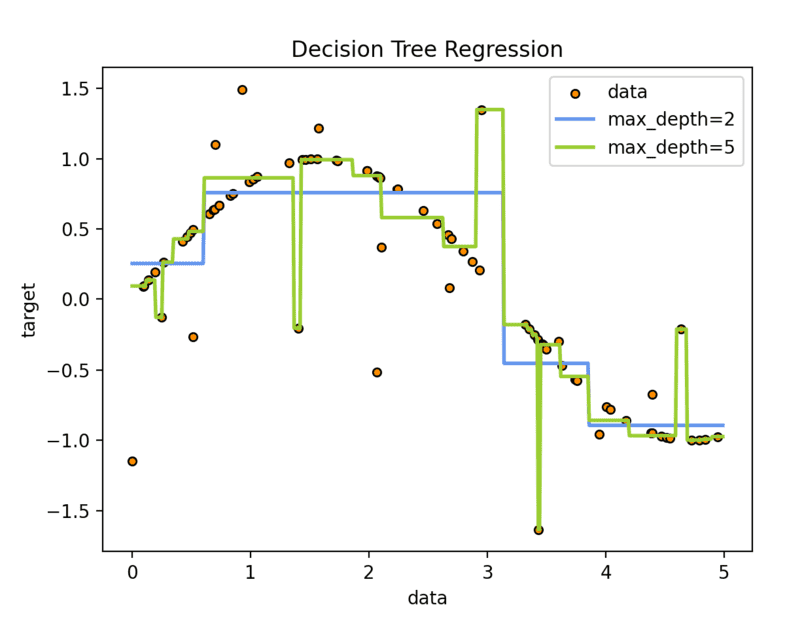

I tried to remove the overfitting but not sure about the result, can someone confirm my answer? Here's what I got:

Ref: https://scikit-learn.org/stable/aut...lr-auto-examples-tree-plot-tree-regression-py

I tried to remove the overfitting but not sure about the result, can someone confirm my answer? Here's what I got: