- #1

Math Amateur

Gold Member

MHB

- 3,998

- 48

I am reading Matej Bresar's book, "Introduction to Noncommutative Algebra" and am currently focussed on Chapter 1: Finite Dimensional Division Algebras ... ...

I need help with the proof of Lemma 1.25 ...

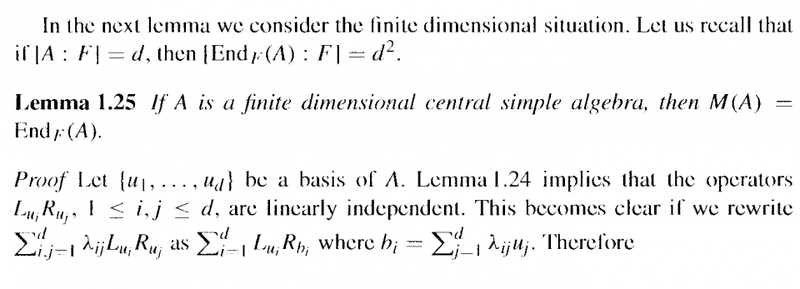

Lemma 1.25 reads as follows:

My questions on the proof of Lemma 1.25 are as follows:Question 1

In the above text from Bresar we read the following:

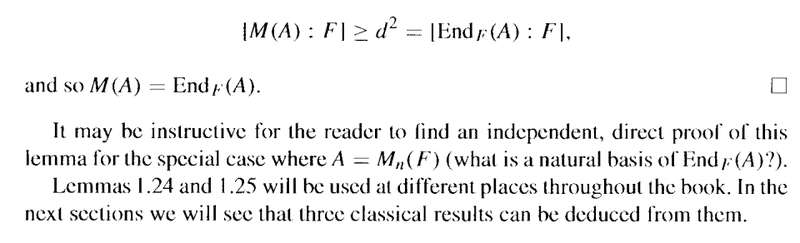

" ... ... Therefore ##[ M(A) \ : \ F ] \ge d^2 = [ \text{ End}_F (A) \ : \ F ]## ... ... "Can someone please explain exactly why Bresar is concluding that ##[ M(A) \ : \ F ] \ge d^2## ... ... ?Question 2

In the above text from Bresar we read the following:

" ... ... Therefore ##[ M(A) \ : \ F ] \ge d^2 = [ \text{ End}_F (A) \ : \ F ]##

and so ##M(A) = [ \text{ End}_F (A) \ : \ F ]##. ... ... "Can someone please explain exactly why ##[ M(A) \ : \ F ] \ge d^2 = [ \text{ End}_F (A) \ : \ F ]## ... ...

... implies that ... ##M(A) = [ \text{ End}_F (A) \ : \ F ]## ...

Hope someone can help ...

Peter

===========================================================*** NOTE ***

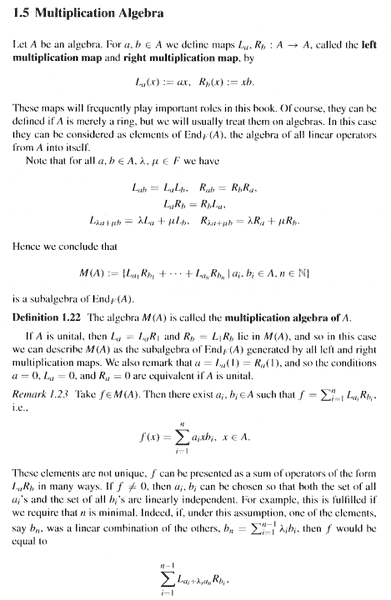

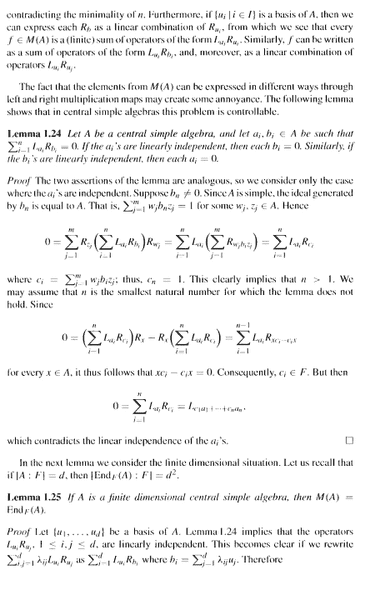

So that readers of the above post will be able to understand the context and notation of the post ... I am providing Bresar's first two pages on Multiplication Algebras ... ... as follows:

I need help with the proof of Lemma 1.25 ...

Lemma 1.25 reads as follows:

My questions on the proof of Lemma 1.25 are as follows:Question 1

In the above text from Bresar we read the following:

" ... ... Therefore ##[ M(A) \ : \ F ] \ge d^2 = [ \text{ End}_F (A) \ : \ F ]## ... ... "Can someone please explain exactly why Bresar is concluding that ##[ M(A) \ : \ F ] \ge d^2## ... ... ?Question 2

In the above text from Bresar we read the following:

" ... ... Therefore ##[ M(A) \ : \ F ] \ge d^2 = [ \text{ End}_F (A) \ : \ F ]##

and so ##M(A) = [ \text{ End}_F (A) \ : \ F ]##. ... ... "Can someone please explain exactly why ##[ M(A) \ : \ F ] \ge d^2 = [ \text{ End}_F (A) \ : \ F ]## ... ...

... implies that ... ##M(A) = [ \text{ End}_F (A) \ : \ F ]## ...

Hope someone can help ...

Peter

===========================================================*** NOTE ***

So that readers of the above post will be able to understand the context and notation of the post ... I am providing Bresar's first two pages on Multiplication Algebras ... ... as follows:

Attachments

-

Bresar - 1 - Lemma 1.25 - PART 1 ... ....png27.6 KB · Views: 589

Bresar - 1 - Lemma 1.25 - PART 1 ... ....png27.6 KB · Views: 589 -

Bresar - 2 - Lemma 1.25 - PART 2 ... ....png23.9 KB · Views: 696

Bresar - 2 - Lemma 1.25 - PART 2 ... ....png23.9 KB · Views: 696 -

Bresar - 1 - Section 1.5 Multiplication Algebra - PART 1 ... ....png27.6 KB · Views: 542

Bresar - 1 - Section 1.5 Multiplication Algebra - PART 1 ... ....png27.6 KB · Views: 542 -

Bresar - 2 - Section 1.5 Multiplication Algebra - PART 2 ... ....png29.5 KB · Views: 726

Bresar - 2 - Section 1.5 Multiplication Algebra - PART 2 ... ....png29.5 KB · Views: 726

Last edited: