Leo Liu

- 353

- 156

- Homework Statement

- .

- Relevant Equations

- ##\nabla f=\lambda\nabla g##

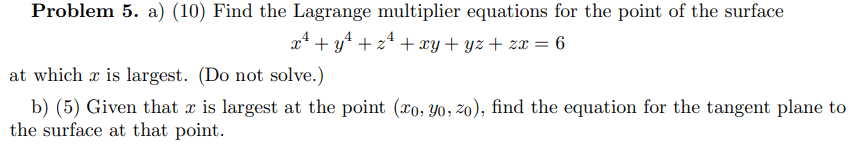

Problem:

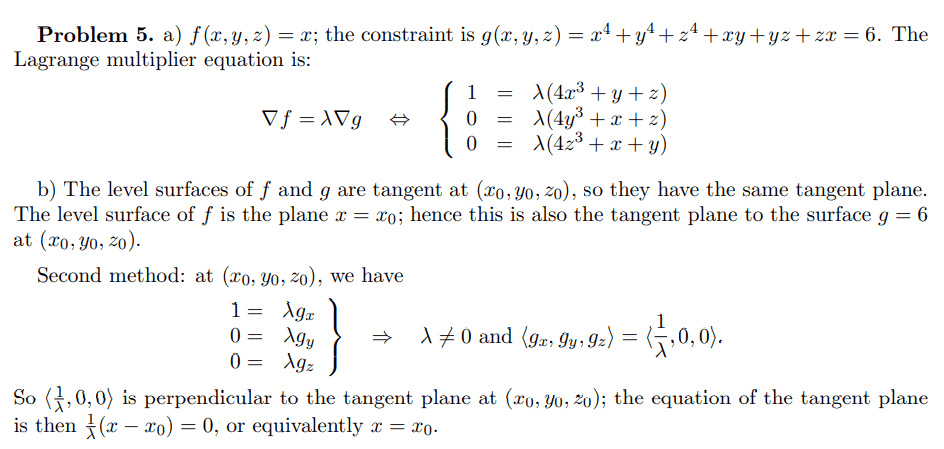

Solution:

My question:

My reasoning was that if x is max at the point then the gradient vector of g at the point has only x component; that is ##g_y=0,\, g_z=0##. This way I got:

$$\begin{cases}

4y^3+x+z=0\\

\\

4z^3+x+y=0\\

\\

\underbrace{x^4+y^4+z^4+xy+yz+zx=6}_\text{constraint equation}

\end{cases}$$

which produces the same solutions as the list of equations in the official answer does.

What puzzles me is why the answer defines that ##f(x,y,z)=x##. I understand the gradient vector should be parallel to the vector ##<1,0,0>##, and therefore the equation f is x. But this step is reverse engineered. Can someone please explain where ##f(x,y,z)=x## comes from?

Many thanks.

Solution:

My question:

My reasoning was that if x is max at the point then the gradient vector of g at the point has only x component; that is ##g_y=0,\, g_z=0##. This way I got:

$$\begin{cases}

4y^3+x+z=0\\

\\

4z^3+x+y=0\\

\\

\underbrace{x^4+y^4+z^4+xy+yz+zx=6}_\text{constraint equation}

\end{cases}$$

which produces the same solutions as the list of equations in the official answer does.

What puzzles me is why the answer defines that ##f(x,y,z)=x##. I understand the gradient vector should be parallel to the vector ##<1,0,0>##, and therefore the equation f is x. But this step is reverse engineered. Can someone please explain where ##f(x,y,z)=x## comes from?

Many thanks.