- #1

brotherbobby

- 702

- 163

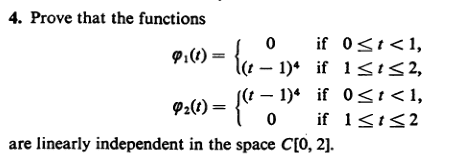

Summary:: I attach a picture of the given problem below, just before my attempt to solve it.

We are required to show that ##\alpha_1 \varphi_1(t) + \alpha_2 \varphi_2(t) = 0## for some ##\alpha_1, \alpha_2 \in \mathbb{R}## is only possible when both ##\alpha_1, \alpha_2 = 0##.

I don't know how to proceed from here. Surely in the interval ##[0,2]## there an infinitely many real number points. It is impossible to show that a linear combination of the given functions are L.I. at every point.

Let me make an attempt and try for two points of relevance, one exactly between [0,1] and the other between [1,2].

##t = 1/2##

We have ##\alpha_1\times 0 + \alpha_2 (1/2 - 1)^4 = 0## only if ##\alpha_2 = 0## but ##\alpha_1## doesn't have to be zero.

Likewise for the value ##t=3/2, \alpha_2\neq 0##, is admissible.

Hence, the two functions are linearly dependent by my reasoning!

A help would be welcome.

[Moderator's note: Moved from a technical forum and thus no template.]

We are required to show that ##\alpha_1 \varphi_1(t) + \alpha_2 \varphi_2(t) = 0## for some ##\alpha_1, \alpha_2 \in \mathbb{R}## is only possible when both ##\alpha_1, \alpha_2 = 0##.

I don't know how to proceed from here. Surely in the interval ##[0,2]## there an infinitely many real number points. It is impossible to show that a linear combination of the given functions are L.I. at every point.

Let me make an attempt and try for two points of relevance, one exactly between [0,1] and the other between [1,2].

##t = 1/2##

We have ##\alpha_1\times 0 + \alpha_2 (1/2 - 1)^4 = 0## only if ##\alpha_2 = 0## but ##\alpha_1## doesn't have to be zero.

Likewise for the value ##t=3/2, \alpha_2\neq 0##, is admissible.

Hence, the two functions are linearly dependent by my reasoning!

A help would be welcome.

[Moderator's note: Moved from a technical forum and thus no template.]

Last edited by a moderator: