bremenfallturm

- 81

- 13

- TL;DR Summary

- I know that the absolute error (for Runge-Kutta 4, and many other methods) is defined as ##\text{values at step length } h - \text{values at step length } 2h## (Def. 1). For my numerical approach to solving the problem, I don't get ##\text{length } \text{values at step length } h = \text{length } \text{values at step length } 2h \cdot 2## (Eqn. 1), which I think this has to do with the fact that I'm changing the step length near zero crossings. I need help finding out how to calculate the error

Hello! I'm currently working with a problem which allows modelling ball motion

$$\begin{aligned} m \ddot{x} & =-k_x \dot{x} \sqrt{\dot{x}^2+\dot{y}^2} \\ m \ddot{y} & =-k_y \dot{y} \sqrt{\dot{x}^2+\dot{y}^2}-m g \end{aligned}$$

Given that ##k_x, k_y=0.005##, ##m=0.01## and ##g=9.81## and when ##y## crosses zero, ##\dot y## should change sign.

The initial conditions are ##y(-1.2)=0.3##, ##\dot y(-1.2)=0##, ##\dot x(-1.2)=4##

I stated the equation above for context, but I don't think that it is the core of my question.

More about the problem:

I'm new to this forum and have used Matlab to solve the problem, but it seems to me like the Matlab category is more for the coding aspect of things. This question is more algorithm-oriented and specifically about error approximation. I apologize if I put it in the wrong place.

My current algorithm to solve this differential equation is:

I will refer to "Runge-Kutta 4" as RK4 below.

5. To find the value of ##x=x_0##, pick the ##n+1## ##x##-values around ##x_0## and interpolate. I calculate the error by using

##|P_{n+1}-P_{n}(x_0)|##, where ##P_n## gives the ##y##-value of the interpolated polynomial with degree ##n## at ##x_0##.

Here, also given the conditional that ##|P_{n+1}-P_{n}(x_0)|<\mathcal O##

______

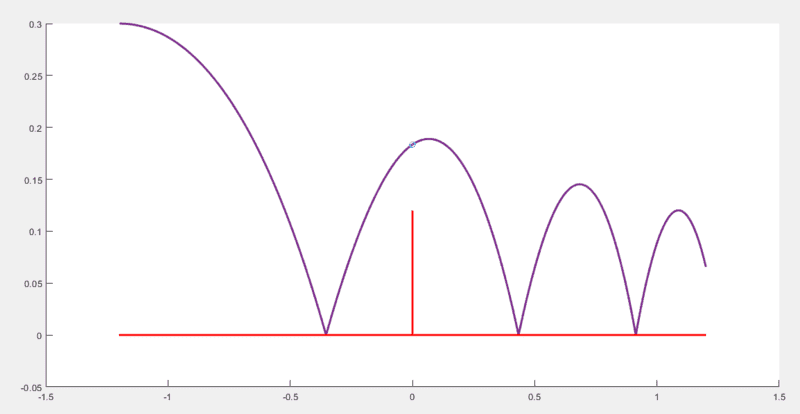

The solution is plotted below

The thing I'm having problems with is the error calculation of the main RK4 process. From above I know that:

$$\begin{cases}

E_{k\text{:th zero crossing}} = |x_{\text{zero crossing}}(h)-x_{\text{zero crossing}}(2h)| \\

E_{\text{interpolation}} = |P_{n+1}-P_{n}(x_0)|

\end{cases}$$

I have, however, used RK4 for the whole calculations, just not the zero crossings.

TL:DR this is my statement of the problem:

My problem is that I know that the absolute error (for Runge-Kutta 4, and many other methods) is defined as ##\text{values at step length } h - \text{values at step length } 2h## (Def. 1). When I try to run my code with step size ##h##, I don't get the length of ##\text{length } \text{values at step length } h = \text{length } \text{values at step length } 2h \cdot 2## (Eqn. 1). I think this has to do with the fact that I'm changing the step length near zero crossings (see 2. in my algorithm).

Hence, the question: how should I figure out the error for the main Runge-Kutta 4 iterations in this case?

The problem is that vectors do not line up in size, and even if Eqn. 1 would be true, it feels kind of funky to take "every second element of the vector with the finer step length" subtracted with the previous iteration to calculate the error.

I have learnt about Richardson's extrapolation and thought that it might be viable here. But what should I Richardson extrapolate? Every value calculated with Runge-Kutta 4? The points included at the interpolation near ##x = x_0##?

I hope I've outlined my problem and approach clearly enough and that it can be understood.

If not, please let me know what's missing! (Let's hope I didn't forget anything critical, heh)

$$\begin{aligned} m \ddot{x} & =-k_x \dot{x} \sqrt{\dot{x}^2+\dot{y}^2} \\ m \ddot{y} & =-k_y \dot{y} \sqrt{\dot{x}^2+\dot{y}^2}-m g \end{aligned}$$

Given that ##k_x, k_y=0.005##, ##m=0.01## and ##g=9.81## and when ##y## crosses zero, ##\dot y## should change sign.

The initial conditions are ##y(-1.2)=0.3##, ##\dot y(-1.2)=0##, ##\dot x(-1.2)=4##

I stated the equation above for context, but I don't think that it is the core of my question.

More about the problem:

- The differential equation is related to a ball moving on a plane surface, starting in ##x=-1.2## and ending in ##x=1.2##. The latter is my stop condition (see below).

- The question is to find out the value of ##y## at any given point ##x_0## with an error smaller than ##\mathcal O##.

I'm new to this forum and have used Matlab to solve the problem, but it seems to me like the Matlab category is more for the coding aspect of things. This question is more algorithm-oriented and specifically about error approximation. I apologize if I put it in the wrong place.

My current algorithm to solve this differential equation is:

I will refer to "Runge-Kutta 4" as RK4 below.

- I have rewritten the system as a set of first order ODE:s. I will denote that ##\vec u##.

- Using Runge-Kutta 4 with a step length ##h##, run until ##y<0##. When that happens, go back to the ##n-1##th iteration, and run RK4 with a finer step length. The step length is divided by two until ##y_{\text{zero crossing}} < \mathcal O## and ##|x_{\text{zero crossing}}(h)-x_{\text{zero crossing}}(2h)| < \mathcal O##, where ##\mathcal O## is the error tolerance. This allows me to more precisely calculate the zero crossings. Running with a fine step length over the whole interval takes many minutes to complete, whilst this approach takes a couple of seconds.

- When we know the zero crossing precisely, store the ##\vec u## values that were calculated in (3) with the fine step length. Flip the sign of ##\dot y## of ##\vec u_{\text{zero crossing}}##. Finally, recalculate with RK4 the value for ##\vec u_{\text{zero crossing}}## with the flipped velocity.

- Continue until ##x>=1.2##

5. To find the value of ##x=x_0##, pick the ##n+1## ##x##-values around ##x_0## and interpolate. I calculate the error by using

##|P_{n+1}-P_{n}(x_0)|##, where ##P_n## gives the ##y##-value of the interpolated polynomial with degree ##n## at ##x_0##.

Here, also given the conditional that ##|P_{n+1}-P_{n}(x_0)|<\mathcal O##

______

The solution is plotted below

The thing I'm having problems with is the error calculation of the main RK4 process. From above I know that:

$$\begin{cases}

E_{k\text{:th zero crossing}} = |x_{\text{zero crossing}}(h)-x_{\text{zero crossing}}(2h)| \\

E_{\text{interpolation}} = |P_{n+1}-P_{n}(x_0)|

\end{cases}$$

I have, however, used RK4 for the whole calculations, just not the zero crossings.

TL:DR this is my statement of the problem:

My problem is that I know that the absolute error (for Runge-Kutta 4, and many other methods) is defined as ##\text{values at step length } h - \text{values at step length } 2h## (Def. 1). When I try to run my code with step size ##h##, I don't get the length of ##\text{length } \text{values at step length } h = \text{length } \text{values at step length } 2h \cdot 2## (Eqn. 1). I think this has to do with the fact that I'm changing the step length near zero crossings (see 2. in my algorithm).

Hence, the question: how should I figure out the error for the main Runge-Kutta 4 iterations in this case?

The problem is that vectors do not line up in size, and even if Eqn. 1 would be true, it feels kind of funky to take "every second element of the vector with the finer step length" subtracted with the previous iteration to calculate the error.

I have learnt about Richardson's extrapolation and thought that it might be viable here. But what should I Richardson extrapolate? Every value calculated with Runge-Kutta 4? The points included at the interpolation near ##x = x_0##?

I hope I've outlined my problem and approach clearly enough and that it can be understood.

If not, please let me know what's missing! (Let's hope I didn't forget anything critical, heh)

!

!

. Don't have MatLab, so downloaded Octave and fought my way in. Both horrible and fascinating at the same time (for a 1980's Fortran 77 'expert'). Results identical to what I already had in all digits -- including the error estimates and the bouncing points

. Don't have MatLab, so downloaded Octave and fought my way in. Both horrible and fascinating at the same time (for a 1980's Fortran 77 'expert'). Results identical to what I already had in all digits -- including the error estimates and the bouncing points