The new

August 10, 2023 paper and its abstract:

The new paper doesn't delve in depth into the theoretical prediction issues even to the level addressed in today's live streamed presentation. It says only:

A comprehensive prediction for the Standard Model value of the muon magnetic anomaly was compiled most recently by the Muon g−2 Theory Initiative in 2020[20], using results from[21–31]. The leading order hadronic contribution, known as hadronic vacuum polarization (HVP)was taken from e+e−→hadrons cross section measurements performed by multiple experiments. However, a recent lattice calculation of HVP by the BMW collaboration[30] shows significant tension with the e+e− data. Also, a new preliminary measurement of the e+e−→π+π−cross section from the CMD-3 experiment[32] disagrees significantly with all other e+e−data. There are ongoing efforts to clarify the current theoretical situation[33]. While a comparison between the Fermilab result from Run-1/2/3 presented here, aµ(FNAL),and the 2020 prediction yields a discrepancy of 5.0σ, an updated prediction considering all available data will likely yield a smaller and less significant discrepancy.

The CMD-3 paper is:

F.V. Ignatovetal. (CMD-3 Collaboration), Measurement of the e+e−→π+π−cross section from threshold to 1.2GeV with the CMD-3 detector(2023),

arXiv:2302.08834.

This is 5 sigma from the partially data based 2020 White Paper's Standard Model prediction, but much closer to (consistent at the 2 sigma level with) the 2020 BMW Lattice QCD based prediction (which has been corroborated by essentially all other partial Lattice QCD calculations since the last announcement) and to a prediction made using a subset of the data in the partially data based prediction which is closest to the experimental result.

The 2020 White Paper is:

T. Aoyama et al.,The anomalous magnetic moment of the muon in the Standard Model, Phys. Rep. 887,1 (2020).

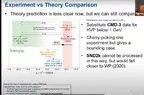

This is shown in the YouTube screen shot from their presentation this morning (below):

As the screenshot makes visually very clear, there is now much more uncertainty in the theoretically calculated Standard Model predicted value of muon g-2 than there is in the experimental measurement itself.

For those of you who aren't visual learners:

World Experimental Average (2023): 116,592,059(22)

Fermilab Run 1+2+3 data (2023): 116,592,055(24)

Fermilab Run 2+3 data(2023): 116,592,057(25)

Combined measurement (2021): 116,592,061(41)

Fermilab Run 1 data (2021): 116,592,040(54)

Brookhaven's E821 (2006): 116,592,089(63)

Theory Initiative calculation: 116,591,810(43)

It is likely that the true uncertainty in the 2020 White Paper result is too low, quite possibly because of understated systemic error in some of the underlying data upon which it relies from electron-positron collisions.

In short, there is no reason to doubt that the Fermilab measurement of muon g-2 is every bit as solid as claimed, but the various calculations of the predicted Standard Model value of the QCD part of muon g-2 varies are in strong tension with each other.

It appears the the correct Standard Model prediction calculation is closer to the experimental result than the 2020 White Paper calculation (which mixed lattice QCD for parts of the calculation and experimental data in lieu of QCD calculations for other parts of the calculation), although the exact source of the issue is only starting to be pinned down.

Side Point: The Hadronic Light By Light Calculation

The hadronic QCD component is the sum of two parts, the hadronic vacuum polarization (HVP) and the hadronic light by light (HLbL) components. In the Theory Initiative analysis the QCD amount is 6937(44) which is broken out as HVP = 6845(40), which is a 0.6% relative error and HLbL = 98(18), which is a 20% relative error.

In turn, the e+e−→π+π− cross section portion of the HVP contribution to muon g-2, which is the main thing that the Theory Initiative relied upon experimental data rather than first principles calculations to do, accounts for 5060±34×10

−11 out of the total aHVP µ =6931±40×10

−11 value, and is the source of most of the uncertainty in the Theory Initiative prediction.

The presentation doesn't note it, but there was also an adjustment bringing the result closer to the experimental result in the hadronic light-by-light calculation (which is the smaller of two QCD contributions to the total value of muon g-2 and wasn't included in the BMW calculation) which was announced on the same day as the previous data announcement. The

new calculation of the hadronic light by light contribution to the muon g-2 calculation increases the contribution from that component from 92(18) x 10

-11 to 106.8(14.7) x 10

-11.

As the precision of the measurements and the calculations of the Standard Model Prediction improves, a 14.8 x 10

-11 discrepancy in the hadronic light by light portion of the calculation becomes more material.

Why Care?

Muon g-2 is an experimental observable which implicates all three Standard Model forces that serves as a global test of the consistency of the Standard Model with experiment.

If there really were a five sigma discrepancy between the Standard Model prediction and the experimental result, this would imply new physics at fairly modest energies that could probably be reached at next generation colliders (since muon g-2 is an observable that is more sensitive to low energy new physics than high energy new physics).

On the other hand, if the Standard Model prediction and the experimental result are actually consistent with each other, then low energy new non-gravitational physics are strongly disfavored at foreseeable new high energy physics experiments, except in very specific ways that cancel out in a muon g-2 calculation.