You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Linear algebra Definition and 999 Threads

-

A

Linear Algebra - LU Factorization

Hello all, I have a problem related to LU Factorization with my work following it. Would anyone be willing to provide feedback on if my work is a correct approach/answer and help if it needs more work? Thanks in advance. Problem: Work:- ashah99

- Thread

- Replies: 1

- Forum: Calculus and Beyond Homework Help

-

Proving statements about matrices | Linear Algebra

Hi guys! :) I was solving some linear algebra true/false (i.e. prove the statement or provide a counterexample) questions and got stuck in the following a) There is no ##A \in \Bbb R^{3 \times 3}## such that ##A^2 = -\Bbb I_3## (typo corrected) I think this one is true, as there is no squared...- JD_PM

- Thread

- Replies: 25

- Forum: Calculus and Beyond Homework Help

-

Lecture 5 - Science, Toys, and the PCA

We open this lecture with a discussion of how advancements in science and technology come from a consumer demand for better toys. We also give an introduction to Principle Component Analysis (PCA). We talk about how to arrange data, shift it, and the find the principle components of our dataset.- AcademicOverAnalysis

- Media item

- Data science Linear algebra Pca

- Comments: 0

- Category: Misc Math

-

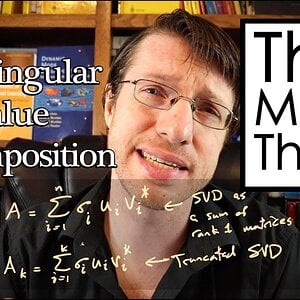

Lecture 3 - How SVDs are used in Facial Recognition Software

This video builds on the SVD concepts of the previous videos, where I talk about the algorithm from the paper Eigenfaces for Recognition. These tools are used everywhere from law enforcement (such as tracking down the rioters at the Capitol) to unlocking your cell phone.- AcademicOverAnalysis

- Media item

- Data science Linear algebra Svd

- Comments: 0

- Category: Misc Math

-

Lecture 2 - Understanding Everything from Data - The SVD

In this video I give an introduction to the singular value decomposition, one of the key tools to learning from data. The SVD allows us to assemble data into a matrix, and then to find the key or "principle" components of the data, which will allow us to represent the entire data set with only a few- AcademicOverAnalysis

- Media item

- Data science Linear algebra Pca Svd

- Comments: 0

- Category: Misc Math

-

A

Riesz Basis Problem: Definition & Problem Statement

The reference definition and problem statement are shown below with my work shown following right after. I would like to know if I am approaching this correctly, and if not, could guidance be provided? Not very sure. I'm not proficient at formatting equations, so I'm providing snippets, my...- ashah99

- Thread

- Replies: 10

- Forum: Calculus and Beyond Homework Help

-

A

Orthogonal Projection Problems?

Summary:: Hello all, I am hoping for guidance on these linear algebra problems. For the first one, I'm having issues starting...does the orthogonality principle apply here? For the second one, is the intent to find v such that v(transpose)u = 0? So, could v = [3, 1, 0](transpose) work?- ashah99

- Thread

- Replies: 5

- Forum: Calculus and Beyond Homework Help

-

A

I How can the Lp Norm be used to prove inequalities?

- ashah99

- Thread

-

- Tags

- Linear algebra Norm Proof Proofs

- Replies: 9

- Forum: Linear and Abstract Algebra

-

S

Determining value of r that makes the matrix linearly dependent

for problem (a), all real numbers of value r will make the system linearly independent, as the system contains more vectors than entry simply by insepection. As for problem (b), no value of r can make the system linearly dependent by insepection. I tried reducing the matrix into reduced echelon...- Sunwoo Bae

- Thread

- Replies: 4

- Forum: Calculus and Beyond Homework Help

-

S

Diagonalizing a matrix given the eigenvalues

The following matrix is given. Since the diagonal matrix can be written as C= PDP^-1, I need to determine P, D, and P^-1. The answer sheet reads that the diagonal matrix D is as follows: I understand that a diagonal matrix contains the eigenvalues in its diagonal orientation and that there must...- Sunwoo Bae

- Thread

- Replies: 8

- Forum: Calculus and Beyond Homework Help

-

F

Linear Algebra What are good books for a third course in Linear Algebra?

What are the suitable books in linear algebra for third course for self-study after reading Linear Algebra done right by Axler and Algebra by Artin?- fxdung

- Thread

- Replies: 26

- Forum: Science and Math Textbooks

-

S

Linear Algebra uniqueness of solution

My guess is that since there are no rows in a form of [0000b], the system is consistent (the system has a solution). As the first column is all 0s, x1 would be a free variable. Because the system with free variable have infinite solution, the solution is not unique. In this way, the matrix is...- Sunwoo Bae

- Thread

- Replies: 6

- Forum: Calculus and Beyond Homework Help

-

Calculus What are some affordable textbooks for learning math concepts for physics?

Hey guys, so I was on this thread on tips for self studding physics as a high schooler with the aim to become a theoretical (quantum) physicist in the future. I myself am a 15 year old who wants to become a theoretical physicist in the future. A lot of people in the thread were saying that...- AdvaitDhingra

- Thread

- Replies: 8

- Forum: Science and Math Textbooks

-

F

Linear Algebra What are good second course books in linear algebra for self-study?

What are best second course(undergraduate) books in linear algebra for self-study?I have already read Introduction to Linear Algebra by Lang.- fxdung

- Thread

- Replies: 1

- Forum: Science and Math Textbooks

-

D

I Normalization of an Eigenvector in a Matrix

- Dwye

- Thread

- Replies: 3

- Forum: Quantum Physics

-

Help with linear algebra: vectorspace and subspace

So the reason why I'm struggling with both of the problems is because I find vector spaces and subspaces hard to understand. I have read a lot, but I'm still confussed about these tasks. 1. So for problem 1, I can first tell you what I know about subspaces. I understand that a subspace is a...- appletree23

- Thread

- Replies: 15

- Forum: Calculus and Beyond Homework Help

-

G

Subspace Help: Properties & Verifying Examples

Summary:: Properties of subspaces and verifying examples Hi, My textbook gives some examples relating to subspaces but I am having trouble intuiting them. Could someone please help me understand the five points they are attempting to convey here (see screenshot).- glauss

- Thread

-

- Tags

- Linear algebra Subspaces

- Replies: 35

- Forum: Precalculus Mathematics Homework Help

-

Prerequisites for the textbook "Linear Algebra" (2nd Edition)?

Summary:: What pre-requisites are required in order to learn the textbook "Linear Algebra (2nd Edition) 2nd Edition by Kenneth M Hoffman (Author), Ray Kunze (Author)" Sorry if this is the wrong section to ask what the title and subject state. I read some of chapter 1 already, and that all...- DartomicTech

- Thread

- Replies: 4

- Forum: Science and Math Textbooks

-

K

System of equations and solving for an unknown

The first thing I do is making the argumented matrix: Then I try to rearrange to make the row echelon form. But maybe that's what confusses me the most. I have tried different ways of doing it, for example changing the order of the equations. I always end up with ##k+number## expression in...- Kolika28

- Thread

- Replies: 7

- Forum: Calculus and Beyond Homework Help

-

A

Linear algebra projections commutativity

Textbook answer: "If P1P2 = P2P1 then S is contained in T or T is contained in S." My query: If P1 = \begin{pmatrix} 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \\ \end{pmatrix}and P2 =\begin{pmatrix} 0 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 0 \\ \end{pmatrix} as far as I...- Appleton2

- Thread

- Replies: 8

- Forum: Calculus and Beyond Homework Help

-

Am I using quotient spaces correctly in this linear algebra proof?

%%% Assume that ##X/Y## is defined. Since ##\dim Y = \dim X##, it follows that ##\dim {X/Y}=0## and that ##X/Y=\{0\}##. Suppose that ##Y## is a proper subspace of ##X##. Then there is an ##x\in X## such that ##x\notin Y##. Let us consider the equivalence class: ##\{x\}_Y=\{x_0\in...- Eclair_de_XII

- Thread

- Replies: 2

- Forum: Calculus and Beyond Homework Help

-

J

Confused with this proof for the Cauchy Schwarz inequality

Im confused as finding the minimum value of lambda is an important part of the proof but it isn't clear to me that the critical point is a minimum- jaded2112

- Thread

- Replies: 11

- Forum: Introductory Physics Homework Help

-

S

Linear Algebra I need textbook recommendations to learn linear algebra by myself

Hi PF community, recently i learned about Calculus in one variables and several, so now i'd like to study linear algebra by myself in a undergraduate level, in order to do that i need some textbooks recommendations. I'll be waiting for your recommendations :).- Santiago24

- Thread

- Replies: 11

- Forum: Science and Math Textbooks

-

F

Change of basis to express a matrix relative to a set of basis matrices

Hello, I am studying change of basis in linear algebra and I have trouble figuring what my result should look like. From what I understand, I need to express the "coordinates" of matrix ##A## with respect to the basis given in ##S##, and I can easily see that ##A = -A_1 + A_2 - A_3 + 3A_4##...- fatpotato

- Thread

- Replies: 4

- Forum: Calculus and Beyond Homework Help

-

I Invertible Polynomials: P2 (R) → P2 (R)

0 Let T: P2 (R) → P2 (R) be the linear map defined by T(p(x)) = p''(x) - 5p'(x). Is T invertible ? P2 (R) is the vector space of polynomials of degree 2 or less- username123456789

- Thread

- Replies: 1

- Forum: Linear and Abstract Algebra

-

S

How Do Vector Spaces of Linear Maps Differ from Standard Vector Spaces?

Solution 1. Based on my analysis, elements of ##V## is a map from the set of numbers ##\{1, 2, ..., n\}## to some say, real number (assuming ##F = \mathbb{R}##), so that an example element of ##F## is ##x(1)##. An example element of the vector space ##F^n## is ##(x_1, x_2, ..., x_n)##. From...- shinobi20

- Thread

- Replies: 9

- Forum: Calculus and Beyond Homework Help

-

J

I Zero-point energy of the harmonic oscillator

First time posting in this part of the website, I apologize in advance if my formatting is off. This isn't quite a homework question so much as me trying to reason through the work in a way that quickly makes sense in my head. I am posting in hopes that someone can tell me if my reasoning is...- JTFreitas

- Thread

- Replies: 9

- Forum: Quantum Physics

-

K

Linear algebra inner products, self adjoint operator,unitary operation

b) c and d): In c) I say that ##L_h## is only self adjoint if the imaginary part of h is 0, is this correct? e) Here I could only come up with eigenvalues when h is some constant say C, then C is an eigenvalue. But I' can't find two.Otherwise does b-d above look correct? Thanks in advance!- Karl Karlsson

- Thread

- Replies: 9

- Forum: Calculus and Beyond Homework Help

-

F

I Proving linear independence of two functions in a vector space

Hello, I am doing a vector space exercise involving functions using the free linear algebra book from Jim Hefferon (available for free at http://joshua.smcvt.edu/linearalgebra/book.pdf) and I have trouble with the author's solution for problem II.1.24 (a) of page 117, which goes like this ...- fatpotato

- Thread

- Replies: 5

- Forum: Linear and Abstract Algebra

-

K

Show that V is an internal direct sum of the eigenspaces

I was in an earlier problem tasked to do the same but when V = ##M_{2,2}(\mathbb R)##. Then i represented each matrix in V as a vector ##(a_{11}, a_{12}, a_{21}, a_{22})## and the operation ##L(A)## could be represented as ##L(A) = (a_{11}, a_{21}, a_{12}, a_{22})##. This method doesn't really...- Karl Karlsson

- Thread

- Replies: 3

- Forum: Calculus and Beyond Homework Help

-

K

What can we say about the eigenvalues if ##L^2=I##?

This was a problem that came up in my linear algebra course so I assume the operation L is linear. Or maybe that could be derived from given information. I don't know how though. I don't quite understand how L could be represented by anything except a scalar multiplication if L...- Karl Karlsson

- Thread

- Replies: 12

- Forum: Calculus and Beyond Homework Help

-

Linear algebra invertible transformation of coordinates

##A^{x'} = T(A^{x})##, where T is a linear transformation, in such way maybe i could express the transformation as a changing of basis from x to x' matrix: ##A^{x} = T_{mn}(A^{x'})##, in such conditions, i could say det ##T_{mn} \neq 0##. But how to deal with, for example, ##(x,y) -> (e^x,e^y)## ?- LCSphysicist

- Thread

- Replies: 2

- Forum: Calculus and Beyond Homework Help

-

K

I Show that ##\mathbb{C}## can be obtained as 2 × 2 matrices

I have this problem in my book: Show that ##\mathbb{C}## can be obtained as 2 × 2 matrices with coefficients in ##\mathbb{R}## using an arbitrary 2 × 2 matrix ##J## with a characteristic polynomial that does not contain real zeros. In the picture below is the given solution for this: I...- Karl Karlsson

- Thread

- Replies: 14

- Forum: Linear and Abstract Algebra

-

K

I Finite fields, irreducible polynomial and minimal polynomial theorem

I thought i understood the theorem below: i) If A is a matrix in ##M_n(k)## and the minimal polynomial of A is irreducible, then ##K = \{p(A): p (x) \in k [x]\}## is a finite field Then this example came up: The polynomial ##q(x) = x^2 + 1## is irreducible over the real numbers and the matrix...- Karl Karlsson

- Thread

- Replies: 6

- Forum: Linear and Abstract Algebra

-

E

MHB Resource for learning linear algebra

I want to take some courses that involve heavy math, so I have been learning maths on the khan academy site: precalculus, calculus, statistics etc. But one fundamental area of maths the khan academy site doesn't have is a course on linear algebra. I really need to learn and use linear algebra in...- Emekadavid

- Thread

- Replies: 5

- Forum: Linear and Abstract Algebra

-

K

I Why does A squared not equal A times A when k = Z2?

In my book no explanation for this concept is given and i can't find anything about it when I am searching. One example that was given was: Let $$A=\begin{pmatrix} 1 & 1 \\ 1 & 0 \end{pmatrix}$$ with ##k=\mathbb{Z}_2## I think k is the set of scalars for a vector that can be multiplied with...- Karl Karlsson

- Thread

-

- Tags

- Linear algebra Matrices

- Replies: 12

- Forum: Linear and Abstract Algebra

-

K

I Trying to get a better understanding of the quotient V/U in linear algebra

Hi! I want to check if i have understood concepts regarding the quotient U/V correctly or not. I have read definitions that ##V/U = \{v + U : v ∈ V\}## . U is a subspace of V. But v + U is also defined as the set ##\{v + u : u ∈ U\}##. So V/U is a set of sets is this the correct understanding...- Karl Karlsson

- Thread

- Replies: 10

- Forum: Linear and Abstract Algebra

-

K

Linear algebra, find a basis for the quotient space

Let V = C[x] be the vector space of all polynomials in x with complex coefficients and let ##W = \{p(x) ∈ V: p (1) = p (−1) = 0\}##. Determine a basis for V/W The solution of this problem that i found did the following: Why do they choose the basis to be {1+W, x + W} at the end? I mean since...- Karl Karlsson

- Thread

- Replies: 10

- Forum: Calculus and Beyond Homework Help

-

J

I Properties of a unitary matrix

So let's say that we have som unitary matrix, ##S##. Let that unitary matrix be the scattering matrix in quantum mechanics or the "S-matrix". Now we all know that it can be defined in the following way: $$\psi(x) = Ae^{ipx} + Be^{-ipx}, x<<0$$ and $$ \psi(x) = Ce^{ipx} + De^{-ipx}$$. Now, A and...- JHansen

- Thread

- Replies: 3

- Forum: Quantum Physics

-

L

MHB Connecting linear algebra concepts to groups

The options are rank(B)+null(B)=n tr(ABA^{−1})=tr(B) det(AB)=det(A)det(B) I'm thinking that since it's invertible, I would focus on the determinant =/= 0. I believe the first option is out, because null (B) would be 0 which won't be helpful. The second option makes the point that AA^{−1} is I...- lemonthree

- Thread

- Replies: 6

- Forum: Linear and Abstract Algebra

-

My first proof ever - Linear algebra

First, a little context. It's been a while since I last posted here. I am a chemical engineer who is currently preparing for grad school, and I've been reviewing linear algebra and multivariable calculus for the last couple of months. I have always been successful at math (at least in the...- MexChemE

- Thread

- Replies: 4

- Forum: Precalculus Mathematics Homework Help

-

U

Other Which Introductory Linear Algebra Book Is Best for Aspiring Engineers?

Hello I am looking for an introductory linear algebra book. I attend university next year so I want to prepare and I want to become an engineer. I have a good background in the prerequisites, except I don't know anything about matrices or determinants. I am looking for the more application side...- user10921

- Thread

- Replies: 5

- Forum: Science and Math Textbooks

-

Introductory Linear Algebra Texts

I am currently enrolled in Multivariate Calculus and am looking to get build up a solid base of mathematics for undergraduate physics curriculum. I am looking for a Linear algebra book that will aid me in my quest. I currently own Axler's Linear Algebra Done Right, but I fear it is too...- Demandish

- Thread

- Replies: 1

- Forum: Science and Math Textbooks

-

S

Setting Free variables when finding eigenvectors

upon finding the eigenvalues and setting up the equations for eigenvectors, I set up the following equations. So I took b as a free variable to solve the equation int he following way. But I also realized that it would be possible to take a as a free variable, so I tried taking a as a free...- Sunwoo Bae

- Thread

- Replies: 1

- Forum: Calculus and Beyond Homework Help

-

S

Solving a Problem in My Assignment: X1, X2, and X3

This is just a small part of a question I have in my assignment and I'm not sure how to solve it, nothing in my eBook or our presentation slides hints at a similar problem, what I tried was I noticed that X1 and X2 have the difference of (3,3,3) and I assume either X3 = (3,3,3) or X3 = (7,8,9)...- Soma

- Thread

-

- Tags

- Assignment Linear algebra

- Replies: 2

- Forum: Precalculus Mathematics Homework Help

-

S

Matrix concept Questions (invertibility, det, linear dependence, span)

I have a trouble showing proofs for matrix problems. I would like to know how A is invertible -> det(A) not 0 -> A is linearly independent -> Column of A spans the matrix holds for square matrix A. It would be great if you can show how one leads to another with examples! :) Thanks for helping...- Sunwoo Bae

- Thread

- Replies: 4

- Forum: Calculus and Beyond Homework Help

-

X

Introduction to Linear Algebra: Solving Real-World Problems

Summary:: Linear algebra 1.Let a a fixed vector of the Euclidean space E, a is a fixed real number. Is there a set of all vectors from E for which (x, a) = d the linear subspace E / 2. Let nxn be a matrix A that is not degenerate. Prove that the characteristic polynomials f (λ) of the matrix A...- xidios

- Thread

- Replies: 10

- Forum: Calculus and Beyond Homework Help

-

I Explanation of a Line of a proof in Axler Linear Algebra Done Right 3r

∈Was wondering if anyone here could help me with an explanation as to how Axler arrived at a particular step in a proof. These are the relevant definitions listed in the book: Definition of Matrix of a Linear Map, M(T): Suppose ##T∈L(V,W)## and ##v_1,...,v_n## is a basis of V and ##w_1...- MidgetDwarf

- Thread

- Replies: 4

- Forum: Linear and Abstract Algebra

-

A

MHB Is $2 + 8\sqrt{-5}$ a Unit or Irreducible in $\mathbb{Z} + \mathbb{Z}\sqrt{-5}$?

prove that $2+8{\sqrt{-5}}$ is unit and irreducible or not in $\mathbb Z+\mathbb Z{\sqrt{-5}}$.- abs1

- Thread

- Replies: 5

- Forum: Linear and Abstract Algebra

-

A

MHB Introduction to linear algebra

prove that u(z+zw)={+1,-1,+w,-w,+w^2,-w^2}- abs1

- Thread

- Replies: 3

- Forum: General Math