Devin-M

- 1,069

- 765

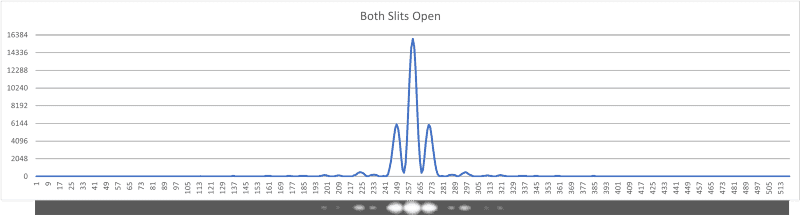

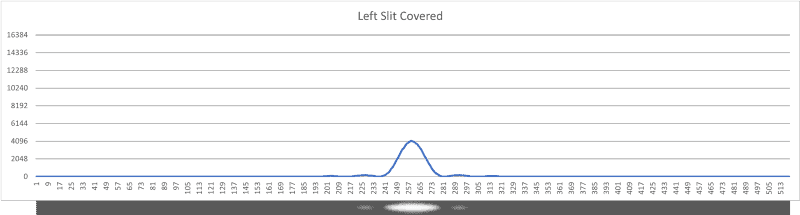

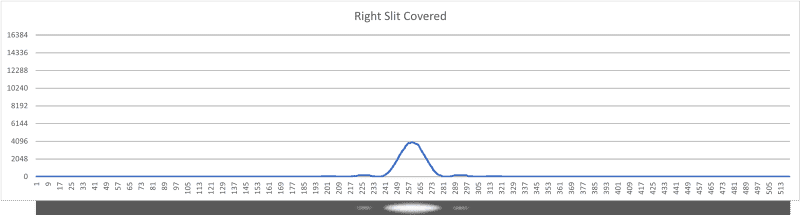

collinsmark said:Here are the resulting plots:

Figure 8: Both slits open plot.

Figure 9: Left slit covered plot.

Figure 10: Right slit covered plot.

The shapes of the diffraction/interference patterns also agree with theory.

Notice the central peak of the both slits open plot is interestingly 4 times as high as either single slit plot.

So adding the second slit results in double the total photon detections & roughly 4x the intensity in certain places and significantly reduced intensity in others. That’s consistent with conservation of energy, but how do you rule out destruction of energy in some places and creation of energy in others, proportional to the square of the change of intensity in field strength (ie 2 over lapping constructive waves but 2^2 detected photons in those regions?

To further illustrate the question posed, at 2:38 in the video below, when he uncovers one half of the interferometer, doubling light, the detection screen goes completely dark: